vozka

-

Posts

136 -

Joined

-

Last visited

-

Days Won

1

Posts posted by vozka

-

-

1 hour ago, Oktokolo said:

The only catch is, that you can't store non-AI-generated images that way - yet (surely there also could be an AI that takes an image and generates a detailed description from it, but some human-visible details would likely be lost in the process).

This is already possible to a degree. It takes two things: first you use the language model of the AI to "interrogate" the image and find what text description would lead to generate something close to it. Then you basically use the diffusion algorithm which normally generates images from noise in reverse, to gradually generate noise from an image. This gives you the seed and description, and the result is often very close to the original image.

I was playing with it yesterday because it then allows you to change some details without changing the whole image, and that allows you to take the portraits of your women friends and transform them into being old and unattractive, which I found all of them universally appreciate and find kind and funny.

The early implementation in Stable Diffusion is just a test and it's quite imperfect (it produces noisy images with overblown colours among other things), plus it's limited by the quality of normal SD image generation, but as a proof of concept it obviously works and will probably get better soon.

1 hour ago, Oktokolo said:Storing images like that also means, that there finally isn't a distinction between vector and bitmap images anymore. Both would be generated by an AI and are therefore sorta infinitely scalable (but ultimately the contained detail in the resulting image keeps being limited by the size of the AI of course).

However I don't see this happening any time soon. The AIs work with bitmaps, are trained on a specific image size (with Stable Diffusion it's 512 x 512 px at the moment) and making anything significantly smaller or larger completely breaks the process. Various AI upsampling algorithms exist, but they never work as well as straight up generating the image in the resolution that the neural net was optimized for. And I don't know about any practical solutions to this yet.

-

1

1

-

-

2 hours ago, jaxa said:

This is offtopic, but you just reminded me of a great memory.

I was always into underground music culture, mostly metal as a teenager, but later also electronic music. A few months after I moved to a different city for university I discovered a tiny underground (literally) private bar, basically a rave speakeasy. No license, entrance with a camera, located in what used to be a wine cellar and a later a PC gaming arcade (which left very strong air conditioning there, so the weed only smelled outside, not inside). Each weekday it was open from about 6-7 pm to about 4 am, and almost every time there were one or more DJs playing more or less underground electronic music, for free, just for fun. Sometimes doing live broadcasts over the internet, before that became a common thing.

I still remember the first time I came there, walked down a narrow set of stairs and entered the dark main room with a couple tables with benches and about 10 different CRT monitors placed in random places (including under the benches), all playing Electric Sheep animations. At the time it felt like something from The Matrix.

The place is still kind of operating many years later, but it got handed over to guys who care more about getting drunk everyday than about music or technology, so the magic is long gone.

-

1

1

-

1

1

-

-

3 hours ago, chakkman said:

A computer can neither just forget things, nor can it have a sudden flash of insight.

Neural networks can and do forget things during training, and their memory is very imprecise. And we're not entirely sure why that is, as far as I know. But it's one of the basic principles without which the whole concept wouldn't work.

It is true that a neural network cannot have a sudden flash of insight, or more precisely it cannot realize that it just had a sudden flash of insight. Artificial general intelligence does not yet exist and is probably a couple decades away, and specialized AI is not self-aware. It can certainly act as a flash of insight for a human that uses it to create something unexpected though.

-

2 hours ago, chakkman said:

No, it isn't. That's just utter nonsense. The human brain is neither fully understood, nor is there any degree of complexity which could map the whole brain in software. Whoever claims that is either a complete brainfart, or deliberately lying.

I know, people are in science fiction these days, but, that's what it is really. Science fiction.

Again, tell a machine to invent a computer (or something similar). Or to come up with Einstein's theory of relativity.

I'm not sure why people think that would be possible from a computer. A computer can do things which you command him to do, and even do some kind of "learning" based on the parameters you put into it. But, expecting any kind of human behavior is just ridiculous, because a machine doesn't think like a human being, or any other living being.

A computer can forget things? Yes, maybe when you program it to forget things, based on a predefined pattern. And that's the thing. The pattern is predefined. It's not random. Not analog. It's digital. A computer can neither just forget things, nor can it have a sudden flash of insight. It just can act in pre defined routines, put into it by its programmer. Even if it allegedly acts human, like im machine "learning", which is nothing other than, again, pre defined behavior.

You make some good points, but I personally think it's similarly naive to think that we cannot eventually get to a point, where an artificial intelligence produces results so similar to a human in some areas, that the fact that it functions differently becomes irrelevant.

In fact I'd say that current neural networks are a big step forward both in terms of the results they produce and in terms of how similarly they work to a brain. We know that artificial "neurons" are a distant abstraction of how a brain works, but a neural net is not just a normal mathematical algorithm. Yes, it's "just" matrix multiplications, but at the same time the complexity of the computation is so far beyond what any human could analyze or design that it's a blackbox. That in particular is similar to a real brain.

The output of a neural network is deterministic just like with a "normal" algorithm - two identical inputs give you two identical outputs. With the case of image generation algorithms part of the input is noise (in the shape of the final image), which dictates how will the final image look. Change the noise very slightly and the output can be completely different. Since we want the results to be reproducible, we use a pseudorandom noise generator initialized by a seed number. Same seed will produce the same noise pattern and therefore the same resulting image.

What if we instead use a noise generator that is truly stochastic? We are able to do that (using specialized hardware). The algorithm itself would still be deterministic, but it would be unpredictable because a part of the input would be unpredictable. Would that be "organic" enough?

And what if we stop computing the neural network on a GPU and instead use a chip that does analog matrix multiplication (already exists, although not powerful enough for this particular usecase, yet), which brings a stochastic element into the computation itself? The computation itself is no longer deterministic. You know what will roughly happen, but cannot predict the exact result even if you know the input.

Would that be organic enough? It is certainly a very different thing now from how any normal computations work.

-

10 hours ago, Xolvix said:

A part of me is a bit uncomfortable with such AI because I tend to feel that art is the domain of the human rather than the machine, and I've heard the idea that AI will be able to basically replace all humans in the art-making process eventually. Maybe that will still happen, but if am aware that a bot made some artwork instead of a human then there's something lost. Art is one of the few things that still has some soul attached to it (excluding mass-market stuff), so I don't know if I'm being old-fashioned but I'd feel better if I knew at least the base of the work was still mostly under the creation of a human.

This will undoubtedly happen with large parts of digital art. But there's a ton of art in the physical world that is not in danger. Even oil paintings - you can print digital art on canvas, but it won't be able to mimic some specific painting techniques that create a physical structure on the canvas, or specific types of pigment. Or various types of combined media. Then there are other even more physical media like sculptures, that's an infinite world.

Honestly from the various art school graduates/teachers that I know none of them are threatened by this because none of them ever did normal digital art like anything an AI can produce.

-

37 minutes ago, AluminumHaste said:

Also:

That's the beauty of it being open-source. An unofficial AMD port already exists (although I have no idea how well it works) and people did several optimizations that reduced the RAM necessary to run it down to about a half (with almost the same computation speed, less than half with reduced speed).

14 minutes ago, jaxa said:Actually, Nvidia has had image models for generating photorealistic faces for years now. They have gotten better over the years.

Applying 2D text-to-image algorithms to modern games seems unlikely, with the exception of making lots of textures and maybe 2D portraits for UI, but there are many other algorithms being worked on. Maybe a similar approach could be used to replace procedural generation techniques. Like making a cave/dungeon in Skyrim, or that thing Tels was working on a decade ago. Rather than making a raster image, it could make geometry, place textures, and design a whole city.

Bring on the negative societal implications of bots invading art, I'm all for it. But one thing to watch out for is the copyright question. These image models can be trained on a superset of copyrighted images or a smaller focused subset (to mimic an artist's style) and produce images that could lead to novel legal questions and expensive copyright lawsuits. This is not a problem for people making memes and shitposting online, but it could be a massive problem for game developers, big or indie.

Sounds like vozka has made some textures with it? These can be scaled up from small 512x512 sizes to higher resolution with a separate upscaling algorithm, that's what people have been doing to make stuff presentable along with touchups in Photoshop/GIMP. Whether the results are any good is another story, maybe vozka should post their results.

My results are just a proof of concept and not very useful for game assets, but I was pretty happy with them. I made a very simple 3D render with unnaturally clean PBR materials, and then added some dust and dirt on them using Stable Diffusion.

I had to overlay some black noise texture over the clean materials because the AI needs something dirt-like to work with, it will not generate dirt on something that is completely clean unless you let it deviate from the base imagea lot, and in that case it tends to change the shape of items and distort things. I also had to render the bench and garbage bin separately (but keep their shadows in), the AI tended to change them too much.

The images are 832 x 832 px in size because that's the maximum that currently fits into my 6 GB of VRAM (I'm not using the latest optimizations), but when doing something like generating noise (which doesn't need much continuity), you can easily just split a large render into smaller tiles and do it tile by tile.

Before AI dirt:

After AI dirt:

-

1

1

-

-

27 minutes ago, chakkman said:

I can't imagine this getting very popular in professional game development. Maybe for very low budget projects. For everyone else, it will surely not be custom enough. Sounds very generic. I know, generic world creation is a thing, but, not so much when it comes to artwork.

I do not have permission to say names, but the concept artists I mentioned work for a mid-sized company and they use Midjourney for scifi themed concept art. The results surprisingly are not generic looking at all if you learn to use it, but it's true that the "text to image" workflow does not give you enough control to straight up generate assets (and the quality is not good enough either). Midjourney is a paid proprietary service, but they specialize in their results being slightly less photorealistic but more creative and artistic.

But the important thing is that the "image to image" workflow exists.

Normally with text to image you describe what you want, the the neural net starts with noise and gradually rearranges it into something that resembles the description. With "image to image" you start with some actual image that you feed to the neural net instead, and then have it transformed to something that fits the description. You can change how far the program is allowed to deviate from the base image. This gives you a lot more control and it could potentially be used to generate textures for example.

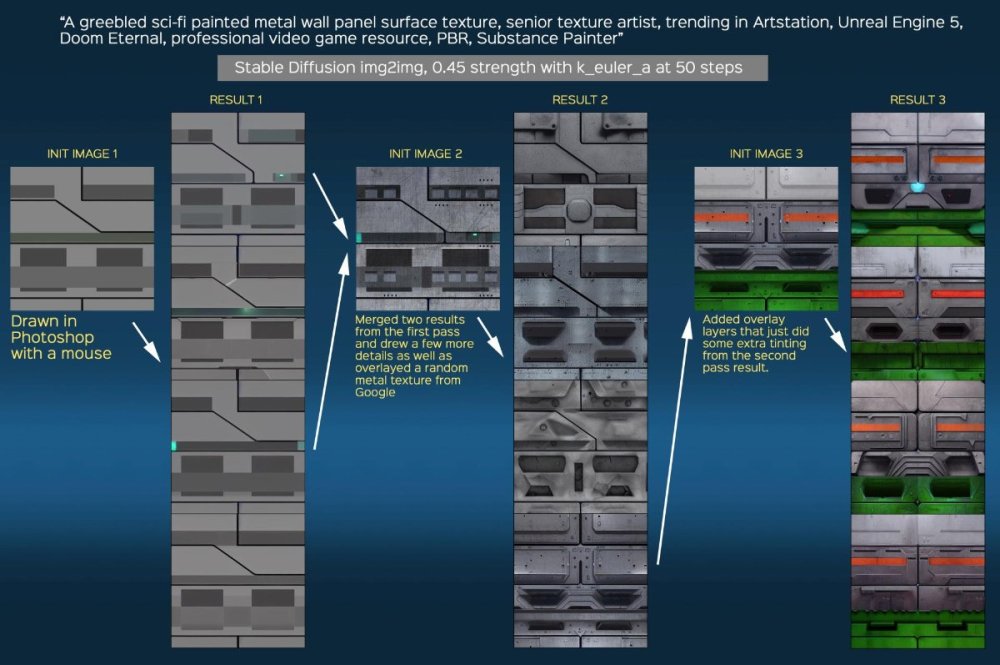

Some guy just posted an example on Reddit. He drew a rough scheme of a "scifi wall" using basic shapes, overlayed a random scratched metal texture and had SD generate several versions of a scifi wall from that. Then he edited his favorite result a bit and fed it back into SD, generating the final version. This is how it looked:

I used the same workflow when I was adding grime to a final render (so not straight to a texture) and it worked well too.

-

I assume all of you have at least heard about it already or seen some pictures. Neural networks that generate decent looking images from a text prompt and do some other things (like modifying existing images) are becoming mainstream. They cannot generate photorealistic hi-res images yet, but the development pace is incredibly fast, so they will probably get reasonably close within a few years.

The big thing that happened recently is that Stability AI released their implementation of such neural network, Stable Diffusion, for free as open-source. The quality is not the best, but it's good enough and all the other ones are behind a paywall, waitlist or just a closed source service and not good enough. You need a GPU (preferably Nvidia) with at least 4 GB of RAM to run it, so nothing special.

The potential implications to society are big (and not necessarily positive), but honestly I don't really want to start the discussion because the internet is already full of it and so many people are surprisingly emotional about it. Go to Twitter if you want to see (you don't).

I'm more interested in what you think about using it in game development. I know that in some commercial studios it's already being used by concept artists, because it's firstly a reasonably efficient inspiration machine and secondly the images it produces are not licensed (and it doesn't matter that much in pre-production anyway), so if you manage to create something good, you can just paint over it to finetune and use it. But it can do other things.

It can do inpainting, which can be used to remove seams or blemishes in phototextures.

It can also generate seamless textures (there's a switch that causes every generated image to be periodic). There are no examples of people using them in games or renders yet because that feature is less than a week old.

Personally I tried using it to add dirt and imperfections to a render that was too clean, and that was a success as well. Adding dirt to a render is not exactly useful in most of games, but I bet it could be adapted to add some grime to game textures as well.

Any ideas? Have you tried it already?

-

-

Really nice mission! I haven't played any of your old ones so I had no idea what to expect and it was a very pleasant surprise. Tight and great looking, the environment was really well made. Appreciated some of the new (or less used) soundscapes as well, I think it's a big part of the overall atmosphere and while pretty much all of those used in TDM are good, the effect gets lessened when you hear them all the time.

Looking forward to you next work!

-

1

1

-

-

I encountered the bow crash again, in a different mission. It's in Who Watches The Watcher?, it happened again when shooting an NPC with a broad arrow (a different NPC than in Hazard pay, a guard, so it's not zombie-related).

I noticed one new thing - the bow shot that crashes the game has broken animation. Something happens when "loading" the bow, the (broad) arrow seems to vanish and maybe the bow is at a weird angle as well - I'm not sure since it happens pretty fast and I only saw twice. Then I think the arrow release still works, and as soon as the arrow (dunno if it's visible by then or not) hits the enemy, the game immediately crashes.

I am only able to reproduce it randomly. I have a save created shortly before the crash happened and I manage to reproduce it once in 2 tries, and then in the next 15-20 tries I was not successful again, so I gave up. I only managed to reproduce with broad arrows, not with water, gas or fire arrows that I had on me, but that may have been by chance.

-

1

1

-

-

Very enjoyable, thanks for this mission.

I'm just watching the Snowpiercer series (which is pretty good by the way), so this fits right in thematically. Great idea, and it seems to me that a train mission is like the opposite of sewer missions - everybody hates those and they're almost always bad, whereas when a game dares to do a train mission, it's almost always good.

I would have liked if it was longer simply because I was having fun. Can't have it all though and compact missions are also nice.

Getting enough loot was just fine for medium difficulty, but I honestly have no idea where the rest of it could have been hidden, so good job on that too.

-

A bit different topic since his games are free all the time and not just during giveaway, but do you know of Increpare Games? He's producing a staggering amount of puzzle games, some of which are really smart and really good. Others just funny and stupid. Often the first goal of the game is to understand how it works and what's the goal.

Gestalt OS for example is one of the best puzzle games I've played.

-

1

1

-

-

1 hour ago, Shadow said:

Just curious was it K-Lite codec pack? I was looking for the same way to fill in thumbnails also and that did it perfectly for me.

Nope, I don't like downloading codec packs because I remember that they used to create messy situations in the system by containing outdated codecs, unneeded codecs that conflicted with other apps etc. That was about 15 years ago and it's possible the situation is entirely different, but for me it stuck (and up until now I haven't needed to install any codecs separately for about a decade). There's a google webp codec and a windows store webp codec, I used the google one.

-

2 hours ago, OrbWeaver said:

The problem is you're defining better in a very narrow way — "more efficient at compressing data" — while ignoring other aspects which to most people are equally (if not more) important. Application support is one of those aspects. As your own experience with Windows 10 and WEBP shows, a "better" format is useless if nothing else supports it.

Similarly, Opus might be better at compressing audio than Ogg Vorbis and MP3, but if you can't play it on your device of choice, it is no better at all. For most uses, wide application and device support is a far more important concern than squeezing a bit more quality out of a slightly smaller file. Especially given that storage and network costs are decreasing and the size difference between a 96 kbps and a 160 kbps file is practically irrelevant.

Well, yeah, the problem of slow adoption is precisely what I'm complaining about. This is kind of a circular argument - if nobody adopts it, nobody is going to use it and it's going to have little support. I do realize that this is a difficult problem to solve because companies have to basically volunteer to "passively" support it and hope it brings them advantages in the long term.

There are other aspects that I omitted as well, like for example HEAACv2 being slightly more demanding to decode (which is also the reason why Bluetooth historically used poor SBC encoding), but those are largely moot nowadays as well (even integrated bluetooth chips and cheapest SoCs can decode pretty much any audio format with no issue.

Your point regarding storage and network costs does not cover the whole situation, I see three issues with it.

Firstly mobile internet is still slow in many places in the world and it's often limited. Where I live it's difficult to get more than 5 GB monthly limit for a reasonable price.

Secondly most websites are bloated as hell and any way to speed up their loading helps. This was the main reason for webp creation and adoption.

Thirdly for services like music and video streaming bandwidth is expensive and the difference between using 160 kbps and 96 kbps for the same quality can save a lot of money. That was the reason why Youtube and Soundcloud switched to more modern codecs. And not even that, Netflix in some apps already switched to one of webp successors for movie thumbnails, because in such a large scale even serving the thumbnails in a decent image quality costs a lot of money.

Btw in my case webp was certainly not useless, it was just an annoying situation. I use it as backup for my own use (seems to be about 1/5 of png size so far for photo-like files), so I just downloaded a codec from google to get explorer thumbnails and I don't need other people to support it. Although the fact that Facebook messenger converts webp files to... static gifs of all things, is kind of annoying (and funny).

-

Since this thread is already silly, why not derail it a bit: The main thing I took from this whole ordeal is that it's annoying how long it takes to adopt new and better formats.

There are virtually no reasons to use .gif apart from compatibility with ancient systems. Jpeg from an actual image editor instead of mspaint being much better is one thing, but there's also webp, heic, avif, jpeg xl and others, all better than their lossy or lossless predecessors and most have free implementations. Except almost nobody uses them because the adoption is glacially slow. Surprisingly Chrome and Firefox are fast, but everyone else isn't, Apple traditionally being the worst.

I was pleasantly surprised to find that Blender now supports webp output for rendering, which in its lossless version is straight up better than png or tiff, only to find out that Win10 doesn't support it natively and I have to search the web for a codec to get thumbnails in explorer.

It's the same with music files. We've had codes better than mp3, standard aac or ogg vorbis for almost 2 decades now, yet these three are still the most used codecs for streaming music. And when a platform decides to use something else, it's often a step down in quality because they push down the bitrates too much, as with youtube in the last couple years.

-

However you choose to present your in progress map is of course entirely your business, but I gotta say that "using crappy built-in software" is a strange hill to die on.

And mspaint being crappy is the only reason why this funny thread exists.

-

I believe this is just the matter of using a more sane image editor.

-

4 hours ago, freyk said:

George Broussard:

"Yes, the leak looks real. No, I’m not really interested in talking about it or retreading a painful past.

You should heavily temper expectations. There is no real game to play.

Just a smattering of barely populated test levels."

Source: https://twitter.com/georgebsocial/status/1523602422437842944He seems really bitter about the project for some reason, quite strange considering this all happened 20 years ago. Having seen the footage it seems that is definitely more than "a smattering of barely populated test levels" and I can see a group of fans making a game out of it.

-

On 5/6/2022 at 8:41 AM, Xolvix said:

I would argue that a Thief game built entirely around ray tracing, would perhaps be the perfect example of ray tracing tech used for gameplay as opposed to just for looks. Assuming it's done right, it would mean zero confusion between what looks like a dark area of the map as opposed to what the engine considers a dark area of the map. Despite the Doom 3 unified lighting engine, there's still too many situations where the lighting of a region betrays its actual visibility to the AI, so you'll go there to hide but it actually registers brighter than some benign other area of the room due to.. whatever. A ray traced lighting engine should eliminate that discrepancy completely.

I thought about this as well and on one hand I'm excited about the idea but on the other it would bring so many changes I'm not even sure if it could work. You'd have to redesign many of the stealth tropes at least.

For example, being in a room with dark walls while a guard holding a torch passes through might be fine, but being in a room with white walls might not because the amount of reflected light would be high enough to make you visible. Awesome.

Being careful of other people seeing you casted shadow. This would raise the difficulty so much (if it's implemented in any way realistically) that it might be less awesome.

Being seen through reflections in windows, mirrors or water. Possibly leading to frustrating situations where you have no idea how you were seen, unless the game specifically tells you.

I'm sure there are many other things you could think of.

-

On 4/25/2022 at 3:02 PM, AluminumHaste said:

Now, we have players complaining that guards react too slow.

Perhaps I was unclear because that is not the case. I am complaining that the way the delay is manifested in the game looks wrong, not about the delay itself.

The delay itself is probably pretty realistic, but in real life the guard would not react by completely ignoring something he's seen and going on with is life for a few seconds before suddenly turning around and attacking the character. He would probably stop and be confused before realizing what's happening and then attack.

On 4/25/2022 at 4:03 PM, New Horizon said:So what kind of situations are you seeing this delayed reaction? How well lit is the player and how far away is the guard roughly? Also, have you set the vision and hearing of the guards in the main menu settings any lower? Have you tried increasing the AI's vision in the settings menu to something higher?

I will have to focus on it next time I'm playing because this is just something that I realized now after reading this thread, not something that I focused on recently. I have not changed any of the AI settings and I think it happens when I'm partly lit and the guard is pretty close.

-

1

1

-

-

On the topic of guards, is anybody bothered by how they don't respond to noticing the player immediately but after a short delay? Seems like it might be intentional because most people don't have inhumane reflexes and it takes a moment of confusion to realize what's going on when somebody surprises you. But the issue is that between the moment when a guard has you in his line of sight and when he starts acting, he usually just continues what he was doing. This creates weird situations where guards just walk past you on their patrol after clearly seeing you and then suddenly turn around, yell and attack you.

From a gameplay perspective obviously it's not a big issue, it just often looks wrong. So I was wondering if that's intentional or what was the motivation. I'm thinking that playing some sort of "confused, looking around" animation on the guard would make this situation look much better.

-

2

2

-

-

On 4/21/2022 at 8:47 PM, kano said:

Actually I have a fitting question for this topic. As the game ages, how hard would it be for the TDM team to spit out versions of the models with more subdivisions to make them look better? I mean, since it's all open source, can't one just take the original model files, ratchet up their subdivision, and then reimplement them into the game as hardware gets more powerful?

The other day I spawned a whole bunch of guards on my 5950x machine just to see what would happen to the frame rate, because I remember doing that same thing making a Pentium 4 beg for mercy, and it didn't even flinch.

There's no simple way to add polygons and make the model look better without a lot of work.

What might make more sense would be replacing low-res textures. Those imo are more pain to look at and more often used right next to high resolution textures on the same surface or in the same room. After playing a few games (Thief 1 & 2 among others) with neural network upscaled textures, I believe this technique could be used with success.

It takes a while to tune the processing pipeline so that the textures aren't oversharpened, noisy etc., but the results can be good. It takes a while to select the right textures, process them (often needs hand retouching for seamless texturess for example, and alpha channels are not always supported) and test them in-game, but it's doable.

-

What a great mission.

I have no opinion about the various small changes - I enjoy them as they make the mission feel fresh, but I can't say if I prefer them or not. Streamlining the lockpicking is nice on one hand, but on the other hand I've had some tense moments where the timing of a patrolling guard was tight and having to switch between lockpicks and trying to pick some door while he's coming round the corner and nearly breathing down my neck made the situation even more exciting. But I appreciate the effort made so that we can experience something else :).

What I liked about the mission the most is how polished and balanced everything was. Like your other missions that I played the difficulty is just fine, the mission is large and nonlinear enough to be fun, but starightforward enough to not be frustrating. Very enjoyable, including the side contracts, it just flows really nicely. I got stuck at the main objective for a while, but there were several hints around, I just wasn't attentive at first.

Oh, and bonus points for replacing the mission accomplished sound, always appreciated. And the main menu is nice too.

-

1

1

-

An updated list of the best TDM fan missions?

in Fan Missions

Posted

Also most missions have so few ratings that the average is kind of random. If you're just looking for some order in which to play the missions than this is not a bad one, but it's not really worst the best and don't take the ratings too seriously.