-

Posts

7255 -

Joined

-

Last visited

-

Days Won

280

Everything posted by stgatilov

-

Connection to TDM with automation

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

What's the difference between command and statement? I mean, I have this code: // Construct toggles _camSyncToggle = GlobalEventManager().addAdvancedToggle( "GameConnectionToggleCameraSync", [this](bool v) { bool oldEnabled = isCameraSyncEnabled(); setCameraSyncEnabled(v); return isCameraSyncEnabled() != oldEnabled; } ); // Add one-shot commands and associated toolbar buttons GlobalCommandSystem().addCommand( "GameConnectionBackSyncCamera", [this](const cmd::ArgumentList&) { backSyncCamera(); } ); _camSyncBackButton = GlobalEventManager().addCommand( "GameConnectionBackSyncCamera", "GameConnectionBackSyncCamera", false ); As you see, _camSyncToggle is created from lambda and directly tied to toolbar button. But _camSyncBackButton is created as a command in CommandSystem, then same-named command is created in GlobalEventManager, which is finally tied to toolbar button. Why can't we delete the last three lines? What do they even do? UPDATE: Both of these two things work in toolbar, and both of them show up in Keyboard Shortcuts and work properly from shortcuts too. -

Connection to TDM with automation

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

There is one more thing I forgot. In my opinion, it should be possible to assign hotkeys to game connection actions. Defaults keys are not needed, but if mapper finds himself using something a lot, it should be possible to do it quickly from keyboard. Aside from speed, there is also a problem of screen size. @Wellingtoncrab has a laptop with small screen, and even the new GUI window takes too much space on it. He should be able to use common features with GUI window hidden. How is it usually done in DarkRadiant? -

That's an interesting option, although a bit complicated. And if we do it for normal maps, better do it for ordinary textures too. Basically, we can distribute tga/png textures, and write dds textures straight on player's machine on-demand. Of course, TDM installation will increase in size... With all the normal maps, I have dds directory of size 2.5 GB. Now consider how the size will increase if we replace dds with tga for ordinary textures (provided that we find most of them in SVN history). I'd say it will become 5-6 GB download and 9 GB after install...

-

Connection to TDM with automation

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

I think I initially resized the window in wxFormBuilder, then got annoying problems with activity indicator, and finally forget that sizes should not be constant. I hope I'll remember where to look for information if I do any GUI in the future. UPDATE: I added SetMinSize just before calling Layout. I tried merging the branch, it worked fine, no conflicts. Nobody except me changed Game Connection code, and my changes outside it consists of a tiny hack in MapExporter. If you update entity spawnarg every frame, then it will be very bad with "Update after every change". This mode schedules update on next frame after every change (delaying by one frame does not increase latency but allows to move many entities at once). Also, "update map" is blocking. If you update spawnarg only when mouse is released, then it should be OK. -

The common opinion is exactly opposite: compression artefacts are way more visible in normal maps than in diffuse maps, and mostly due to specular light. It won't be easy... but I guess it is possible to check for image program and dump tga of the end result, then compress tga to dds and replace image program with dds image path. By the way, will we simply delete tga normal maps after everything is done?

-

linux Wayland compatible engine for Linux users

stgatilov replied to MirceaKitsune's topic in TDM Tech Support

I don't understand the rationale. There is X system, and there is Wayland. The programs written for Wayland don't work in X system. The programs written for X system work in Wayland via compatibility layer. Given that we cannot combine both systems in one build, relying on X system sounds like the best solution. -

I guess you can do this now "in theory", i.e. disable image_usePrecompressedTextures.

-

I wrote a simple script to convert all *_local.tga textures to DDS/BC5 using compressonator. From a very brief testing, the game loads them and looks normal. So I wonder about this point: The easiest solution is to run image programs using DDS image as source. All these texture compressed formats are trivial to decompress. The main question is about quality, I guess.

-

Connection to TDM with automation

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

Sound good to me. I think if something needs to be added, it should be added to the GUI window. The menu interface was temporary from the very beginning, and I hope it will never be used again. Ok, I'll write some initial tooltips then. -

Connection to TDM with automation

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

I don't want to push it... But are there any plans about integrating my latest changes? I have finished working on game connection for now, at least on DR side. In my opinion there are several TODOs remaining: Now "Connection" menu contains only one item, which spawns the new window. Is it OK? Should it be moved somewhere else? There are no icons for "pause" and "respawn" buttons. I guess I'll try to draw them myself (I use Inkscape a lot myself). It is obvious how to draw "pause", but what to draw on "respawn" icon? It would be nice to have some verbose words about the new GUI window. Should it be in tooltips or in manual/help? Should I try writing them (judging from feedback over wiki page, I'd probably avoid it) ? -

Exceptions in DarkRadiant

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

Legacy OpenGL? I guess you should ask @cabalistic about it, but to me it's total bullshit. Of course, you need a "fat" driver with deprecated stuff into order to use them. But I think it is only a problem if you want to use newer features (GL3 and later) at the same time. And I think even Linux drivers support deprecated profile today. As far as I understand, you use GL 2.1 and no more than that, and you rely on glBegin and lots of other stuff anyway. In my opinion it is not wise trying to clean everything to Core profile. It will take a lot of time, result in complicated code, and will most likely be slower than it is now. Did you ever try to reimplement immediate-mode rendering (glBegin/glEnd) in Core GL3 ? It is very hard to achieve the same speed as driver shows. If you manage to put everything into one display list, performance improvement should be crazy, at least on NVIDIA driver. Of course, the results are heavily dependent on the driver. The reason they are rarely used today is that they are not flexible: you cannot modify parameters and enable/disable anything. Display list only fits if you have static sequence of OpenGL calls. Perhaps it won't help with lit preview, but basic "draw things where they are" view is OK. Oh, I did not enable lit mode and profiled the basic one. Yes, calculateLightIntersections is very slow here. With L lights and E entities, you have O(L E) intersections in the loop here. That's a hot loop which should contain only a bare minimum of calculations in it. The problem is that you compute light's bbox O(E) times per frame, and entity's bbox O(L) times per frame. Compute boxes once per frame for each thing, and the problem should go away. After that you will have another problem: I understand that you want to have stable pointers when you put objects into container by value. But the truth is, linked lists are very slow to traverse (The Myth Of Ram should be read by every programmer who cares about performance). Unfortunately, DarkRadiant relies of linked lists in many places, and the same problem happens e.g. during scene traversal. Going through entities/brushes is very slow when they are in a linked list. Maybe use std::vector? Or at least std::deque if addresses must not be change? In this particular case, you can copy _sceneLights into temporary std::vector and use vector in the loop (in calculateLightIntersections). Same applies to bounding boxes: precompute them into std::vector-s before quadratic loop. -

Exceptions in DarkRadiant

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

If you don't need dynamicity, then you can compile a display list and render it when camera moves. It is a very simple approach from base OpenGL, and it makes wonders (I remember I saw it working even faster than VBOs on NVIDIA). Of course, if you change some settings or filters, then you need to recompile display list, which will take some time. -

Exceptions in DarkRadiant

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

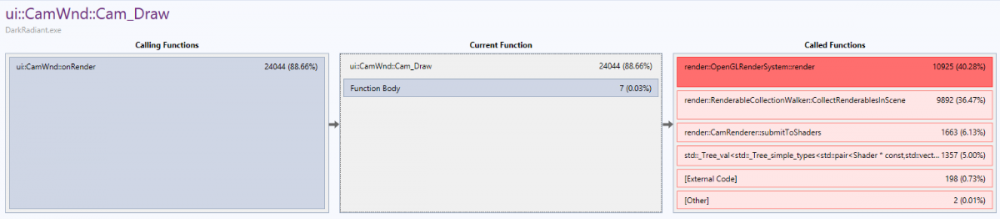

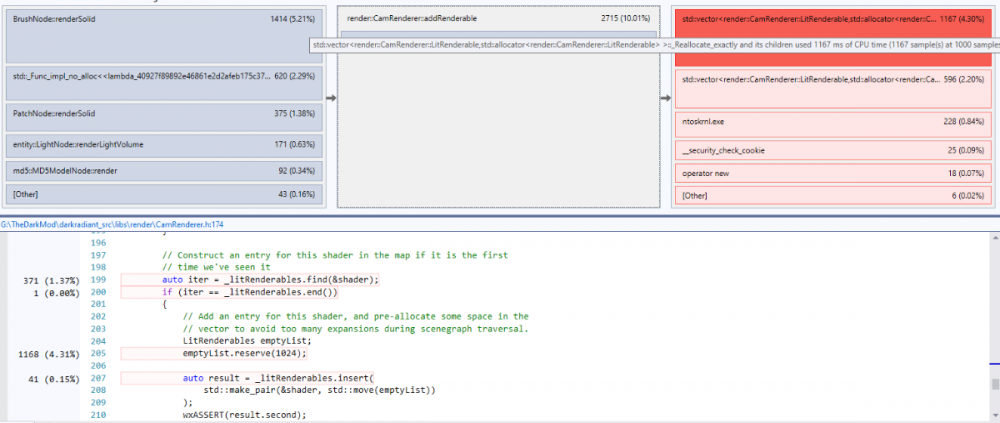

I don't think messing with OpenGL commands is a good idea. You won't win much easily, and redoing all the rendering is too complicated (and the more advanced OpenGL features you use, the more driver bugs you suffer from). Note: I'm not sure there is much to win here. Maybe 20%... not more. Here is profiling data from Behind Closed Doors (worst scene): It seems that CollectRenderablesInScene does not do any OpenGL calls. It is a bit sad to see std::map destructor eating 5% here (out of total 88%). Here some time is spent on searching in map (1.3%), reserving 1024 elements (4.3%), and push_back (2.2%-??3.5%??) --- out of 10% spend in addRenderable. This is from within submitToShaders (6.1%) --- 5% is spent on various data structures. Once again: changing this won't immediately turn 5 FPS into 20 FPS. -

Connection to TDM with automation

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

I fixed the build on Linux CI. Basically, if wxUSE_ACTIVITYINDICATOR is not set to 1, then wxStaticBitmap is used. Its png image was created from screenshot of a working activity indicator on Windows, so it should look similar. But it won't rotate -

As a rare exception, two dev builds are published at once: dev16335-9550 dev16342-9552 The latter one includes the main menu GUI changes, which will be included in all future dev builds too.

-

Connection to TDM with automation

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

Yes, but I'd like DR users to have this GUI before Spring 2022 Should I provide some sort of replacement under #ifdef? For instance, show an image with still activity indicator instead of spinning indicator. -

Exceptions in DarkRadiant

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

By the way, what about std::stof during map load? Should I refactor it too? If yes, then where to put ParseFloat wrapper? -

Exceptions in DarkRadiant

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

Yes. Also, you can enable all exceptions in Exception settings at some moment and see where it breaks. -

Exceptions in DarkRadiant

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

Looking at TDM code, exceptions are only used when hard ERROR happens. At this moment all code execution is stopped regardless of where common::Error was called (stack unwinding is the main profit of exceptions). But if you look closer, Carmack never cared about freeing resources when exception triggers Some memory that was allocated directly is leaked, and the same probably happens for some resources. For modern C++ programming, that is considered completely unacceptable but I have never seen any real problem for TDM user due to it Also, you should note that programmers do change. At the moment of writing idTech4, Carmack started using C++ without any prior experience or learning. idTech4 is "C with classes" partly because its authors were C programmers without enough C++ experience. I would be happy to see how his code style evolved when he earned experience, but unfortunately no later idTech engine is open source P.S. By the way, Google has banned exceptions in their code, as far as I remember. And it seems gamedev companies sometimes even completely disable support for exceptions in build settings --- but that's too radical for normal C++ programming. -

The topic of build time was left in somewhat unfinished state (here). I have measured time for clean single-threaded build, here are results: Debug x64: 534 sec -> 782 sec (+46%) Release x64: 544 sec -> 778 sec (+43%) The results with fully parallel build are closer to each other: 161 sec -> 195 sec (+21%). However, I think single-threaded results are most important to consider. It seems there was some sort of bubble in DarkRadiant build, and when Eigen was added, some of its cost filled the bubble, so the wall time increase was less than increase in computation. However: We can improve parallelism (e.g. remove dependency between projects) and remove the bubble, then the ratio after/before Eigen will become closed to single-threaded results. The project will grow, more code will be added (including Eigen-using code). The bubble can fill up by itself, and at that moment the ratio will be closer to single-threaded too.

-

Decided to compare performance before and after Eigen: Before: 356dfb4e05f1ff6ea7f570376e6a2b4692ad581a "#5581: Fix lights not interacting due to a left-over comment disabling the RENDER_BLEND flag" After: 806f8d2aad9ef7a3d354e2681b71a7417be75e34 "Merge remote-tracking branch 'remotes/orbweaver/master'" I built Release x64 and started DarkRadiant and TheDarkMod without debugger attached. Then opened Behind Closed Doors map. I measured three things: map load timing, as reported in DarkRadiant console. FPS while slightly moving camera in DR preview near start. Time for empty "Update entities now" when connected to TDM (I added ScopeTimer in exportMap scope). Results: Before: 13.05-13.09 map load, 41-43 FPS, 0.85-0.87 update map After: 13.35-13.41 map load, 38-40 FPS, 0.88-0.90 update map I had to run everything many times and look at last results. Also made sure TDM is not playing when I measured the first two numbers. To me it looks like a measurable difference, but a small one. It would be great if someone measures this on a different machine. Independent measurement is even more important because I sort of expected such results It would be very surprising to see a big difference, since most of the tasks done by DR are not limited by math. And I was not surprised to see that Eigen is slower: while it can sometimes do 2 operations in 1 instruction, it has to perform a lot of shuffles all the time, which easily reverts the potential benefits. Plus increased pressure on optimizer should result in less inlining and less optimizations.

-

Exceptions in DarkRadiant

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

Regarding actual rendering performance in Release build, the following is probably not a good idea: std::map<Shader*, LitRenderables> _litRenderables; Better have one large std::vector and push_back records to it. When collection is over, std::sort it by shader, then extract intervals with same shader into something like std::vector<std::pair<int, int>>. And do not shrink these vectors between frames, of course. Most likely it will help... but can't be sure of course. -

I was trying to understand why DarkRadiant camera is so damn slow even in release, and it turned out that exceptions are the problem. Visual Studio debugger breaks program execution on EVERY exception thrown, even if the exception is caught immediately. Then it checks Exception Settings: did user want to stop on this particular type of exception? If yes, then execution breaks, and if not, debugger continues execution. The "break on exceptions of type" feature of debugger can be quite useful. However, having lots of exceptions thrown all the time turns it useless. Breaking and resuming debuggee all the time is VERY slow. So when something throws std::bad_cast every frame, rendering become 20 times slower. Here is the pull request: https://github.com/codereader/DarkRadiant/pull/16 I think the same problem happens on map load: std::invalid_argument is thrown in huge quantities. And it is easy to fix it: just add an utility function "bool ParseFloat(const std::string& text, float& result);" implemented via std::stdtof, and call it everywhere instead of calling std::atof and catching exception. I'm afraid C++ is not like Python, and exceptions should be used only in exceptional situations.

-

Connection to TDM with automation

stgatilov replied to stgatilov's topic in DarkRadiant Feedback and Development

Linux build on Github CI fails on my fork: [ 35%] Building CXX object plugins/dm.gameconnection/CMakeFiles/dm_gameconnection.dir/GameConnectionDialog.cpp.o /home/runner/work/DarkRadiant/DarkRadiant/plugins/dm.gameconnection/GameConnectionDialog.cpp:8:10: fatal error: wx/activityindicator.h: No such file or directory 8 | #include <wx/activityindicator.h> It seems that activity indicator is simply missing in the version of WxWidgets installed on CI machine, i.e. WxWidgets is rather old there. Is it a big problem? UPDATE: CMake says 3.0.4 is installed, but activity indicator was added in 3.1.0. -

First find a map with 1000 LOD entities, then bother Indeed, if entities all think about LOD at the same time, it can cause a stutter. Although the code which checks LOD does not look as heavy, I'm not even sure 1000 entities will be enough. Moreover, they are actually spread randomly across frames: // add some phase diversity to the checks so that they don't all run in one frame // make sure they all run on the first frame though, by initializing m_TimeStamp to // be at least one interval early. // old code, only using half the interval: m_DistCheckTimeStamp = gameLocal.time - (int) (m_LOD->DistCheckInterval * (1.0f + gameLocal.random.RandomFloat()) );