-

Posts

855 -

Joined

-

Days Won

22

Posts posted by Frost_Salamander

-

-

Hi all! I've just built my first FM and would like to invite some people to beta test it. It's a small, traditional city/mansion style mission.

Once I have a few replies I'll start a thread in the beta testing forums.

Note: I am also on Discord, but my username there is Frost_Salamander. Admins: is it possible to get my username here changed to that? @greebo @taaaki ?

-

3

3

-

-

On 1/11/2013 at 5:55 PM, TheUnbeholden said:

Slight problem, the compass is point the wrong way (according to the map of the office, Gerrards office should be in the south east corner, but it shows up as the north east corner according to the compass.) its very unprofessional for our smuggler friends to draw a map without having your bearings.

I think mission authors do this on purpose, and I was going to ask why. Is it to make it more challenging? I personally have a terrible sense of direction and get lost easily, and stuff like this puts me off. Depending on the map, some places are more easily navigated than others. If a particular environment is very symmetrical (like a lot of office buildings) and the map is disorientating it's not fun IMHO. I know having terrible maps is part of Thief culture, but I wish wrong orientation wasn't part of it.

Just finished this with 2991 loot (short of 3000) on medium difficulty. I'm not the best of ferreting out all loot, but I'm not terrible either. Frustrating not to meet the loot goal.

Having said all that, it's very well done and challenging. I didn't feel like giving up on the mission objectives and enjoyed dealing with the AI, but felt the loot goal wasn't worth carrying on for. I did however finish the rest of the Corbin series without any difficulty, so this is just some (hopefully) constructive feedback

-

I wonder if it's something to do with the resolution scaling. I have another strange issue where on some maps if I go underwater everything zooms in, and I lose all perspective. Was going to open a new issue but the screenshots were too big to upload. I mentioned it in the Air Pocket fan mission thread.

-

4 hours ago, Destined said:

Do you use any mods/patches? If I remember correctly, someone made a patch for a Thief 3 like compass and this sounds a bit like that.

No none at all.

-

Just finished it. I didn't

Spoilerencounter the gold bar or whatever everyone was talking about, I guess because I didn't make it into all the cabins on the sunken ship.

The underwater zoom issue makes the sunken ship unplayable for me (which is looking like it's something to do with my setup, and not the mission itself). I may try to put together some screenshots and ask about it in the tech support section.

-

Hey @Geep thanks for the detailed reply. It helps to know what's going on behind the scene for sure. And thanks for the hint - will be trying again, not giving up on this one!

-

I got out of the ship, but:

SpoilerI found Emily in the boat, and it says 'just follow her ship'. But it doesn't move. What am I missing here?

-

OK my main monitor does have speakers, but I never use that. And yeah, although I said I keep my drivers up to date, I only really do it every couple months or so - will do that.

Also I put the multi-core back on, with no problems there it seems...

-

OK so I've tried to play this a couple of times and had to give up. I like that it's unique and the sense of claustrophobia at the start is real, so although I didn't really enjoy it I think it's intentional and achieved its design goal.

However the tight spaces and all the junk floating around just makes it painful to navigate. For whatever reason every time I picked something up it would get thrown instead of holding on to it (maybe because I'm in the water?). Also when I submerge it's difficult to see anything, even if I increase the gamma/brightness. I've also got a weird issue: when I got to the mess cabin, whenever I submerge my perspective totally changes - it's like when I go underwater everything seems like it's zoomed in like 4x. I lose all sense of perspective and can't orientate myself. It's hard to explain unless you see it. It was fine before that point - not sure what's happening. I experienced this in another mission as well (think is was William Steele: The Barrens, in the canals).

Anyways - not sure if it's Dark Mod issues or something with the mission itself. I don't mean to criticize the mission design, I would love to play it as intended, and would like to try and finish this if anyone has any tips.

-

played a few missions today and no issues with OpenAL EFX turned off. Perhaps that was the issue?

@nbohr1more it looks like from that log that it found OpenAL on all devices (including headphones which would the mainboard audio, which is what I always use) - what makes you think it only found it on Nvidia? BTW this audio support on video cards annoys me to no end - I can never get rid of them. At the risk of sounding ignorant, why does this exist? Do people plug their headphones into their monitors? How do you take advantage of this?

Had another problem though which I've not noticed before. When I activate the compass, it shows up in the bottom center just underneath the light gem, so its half-hidden under that. Anyone seen this before?

-

6 minutes ago, Springheel said:

That feature has been known to create random crashes.

Yep, which is why I included that setting in my post. However it still happens with that turned off.

I am going to try some missions with openal EFX turned off to see if that helps. Will be a few days though before I have feedback.

-

I'll try disabling EFX. I've been trying to get it to crash again with no success

although I did get the stuttering a couple of times when pressing escape to go to the menu. Also I can confirm the problem exists with multi-core turned off.

although I did get the stuttering a couple of times when pressing escape to go to the menu. Also I can confirm the problem exists with multi-core turned off.

If I type

com_allowAVXin the console I get:

Unknown command 'com_allowAVX'Also didn't say in my system setup I have a Dell Precision T7500 with stock motherboard. No idea what that supports in terms of sound...

-

-

Hi @Amadeus, not it's not just your map, so don't worry

It played really well to be honest, I didn't have any performance issues or anything.

It played really well to be honest, I didn't have any performance issues or anything.

BTW I did actually finish the mission, with

Spoiler2000 loot right on the nose! I was a bit relieved as I have no idea where the rest of it is!

-

Great mission - well done

Is it possible to

Spoilerget to the area beyond the gates (the part where you can hear horses and see a guard walking around)? If so could use a hint...

-

1

1

-

-

Since I upgraded to 2.07 I keep getting crashes. It can happen at any time, but frequently it occurs when I press escape to pause and go to the main menu. Also sometimes when I quick-save. The music starts getting choppy and then I get the windows spinny wheel and then the 'this program is not responding' dialogue box before it exits. The most recent example is today when playing 'A Good Neighbor'. I have the resolution scaled to about 1/2 (as my graphics card can't hack 4K with its 3 GB RAM). Shadows are set to 'Maps' and Multi-core enhancement is 'On' (although I'm pretty sure I get the same problem with this turned off). Also I noticed that sometimes when I'm idle (like waiting for an AI to move), everything will start chugging, then recover.

It's not so bad that I can't play, but it's often enough that something is obviously wrong.

I've been keeping my video drivers up to date and all that. Not sure what it is. Also I couldn't quite figure out how to generate a crash log - happy to provide that if someone can tell me how to do it.

My system is:

- Windows 10

- Nvidia GTX 1060 3GB

- Xeon X5675 3.07 GHz

- 24GB RAM

- 4K monitor

-

So I just tried it in Windows and....it worked

The only things to be aware of are a couple of settings you need to tweak (both in the Docker settings UI):

- You need to 'share' a drive with Docker to allow the bind-mounting of directories - so just share the drive (or directory) containing your dark mod source

- Bump up the resources dedicated to Docker. The default for me was 2 GB RAM and 2 CPU cores. Increasing this allowed me to run a multi-threaded build. This is necessary in Windows because it's using Hyper-V.

Without the 2nd tweak, it failed for me in a similar manner as it did for you (i.e. cc1plus crash), so I suspect that was because you didn't dedicate enough cores to your VM.

-

OK - I managed with -j12 on my Ubuntu desktop, so the crash might have something to do with Virtualbox.

Here are the 'proper' installation docs here: https://docs.docker.com/engine/installation/linux/docker-ce/ubuntu/

Docker for Windows:

https://docs.docker.com/docker-for-windows/install/

You need to be running either Windows 10 Professional or Windows Server 2016 I think (or else you have to use the Docker Toolbox version, in which case you might as well just use Virtualbox). I don't know how many people are running either of those. I have a dual-boot system with Ubuntu Gnome / Windows 10 Pro, but I don't know what most of you guys are using.

So for Docker for Windows the default setting is to use Linux containers, but you can 'switch' it to use Windows containers. So to answer your question it should work in the same manner as running it on Linux. I can try it out later and see.

-

1

1

-

-

Just had a quick look at some current hosted CI services. There is a lot of good stuff out there that is free for open source projects. Most of them are Linux/Docker/Github-centric which would be fine if not for the Github thing, but I found one that seems to tick all the boxes for Windows:

- free for open source

- Windows / Visual studio build environments

- supports public Subversion repositories

Some more about their build environments: https://www.appveyor.com/docs/build-environment/

Only thing I'm not sure about is would the environment have everything required for building TDM? They have DirectX SDK and Boost - is there anything else it needs?

Another option for Windows might be Team Services, but it's hard to discern what the limitations are - looks like it's free for up to 5 people and might have to use Git (or TFS) as well: https://www.visualstudio.com/team-services/

-

1

1

-

OK - I've pushed my build image to dockerhub: https://hub.docker.com/r/timwebster9/darkmod-build/

There is a short blurb on there of how to run it. All you need to do is install docker and run that command. It only covers the simple case of a release build - no sense trying to cover every single use case at the moment.

Don't worry if you're pessimistic - I don't blame you. If it makes any difference, I do this sort of thing for a living and I wouldn't dream of doing any sort of Linux build any other way than this.

Is it possible to build binary inside Docker and than to run it outside Docker on arbitrary Linux environment?Yes absolutely - this is what I'm in fact proposing. If you look at that command to run the darkmod build, it 'bind-mounts' (the -v argument) the source code directory from the host inside the container and then runs the build. When the build stops, the container exits and the newly built binaries will be there on your host filesystem, with no trace left of the container.

The build environments are different, and TDM can build on one machine and fail to build on another oneBut why does this happen in the first place? It's because of inconsistent environments - the very thing that Docker fixes. Correct me if I'm wrong, but you can build for x86-64 on Ubuntu and the resulting binaries will run on Fedora right? If so, it doesn't matter what the build image uses for its OS. Now if the game runs on one machine and not the other, this is a different story (the runtime environment). Even if you did want different Linux build environments, all you would need to do is create a Docker image for each one. Again - this is where an automated CI system would help...

Also to be clear, containers are NOT VMs. I only used that term because it's the best way to describe it to someone who is not familiar with them. They run a single process only (not an entire OS), and their resource usage is limited to whatever resource the process running inside the container is using. There is lots of stuff on Google describing the differences. Basically a running container will just use whatever resources on the host that the process needs. Yes, there is overhead, but it's almost negligible. A key thing to understand about how they can do all this without being a true VM is that they also share the host operating system's kernel. Another way to think of it is a running container uses only the binaries from the image, but the resources and kernel of the host. A traditional VM allocates and reserves fixed CPU, memory and disk resources, as well has having a much higher overhead for the hypervisor.

Regarding running Dark Mod in Docker:

Let's keep this to just builds for now - I don't want to promise something that I can't do. I haven't looked into doing this and I don't know if it will work. Using a VM such as Virtualbox isn't a bad idea at all - and if it works then great. However I don't have any visibility of what you guys have been doing so I'm not aware of any problems you might have. If you need a way to share a VM image then I would use something like Vagrant. Again, if Docker is an option here - it uses less resources and startup time is way faster than using a traditional VM.

Having said all that, some preliminary searching looks sort of promising. One thing you can do with Docker is share hardware resources with the host. For example graphics cards, sound cards, the X11 display, and I/O devices. This is all done via bind-mounts of the /dev/xxx devices or UNIX sockets. Nvidia has got into the game for GPU processing tasks in datacenters: http://www.nvidia.com/object/docker-container.html (think cyptocurrency or AI computing).

However once we start sharing host resources then you lose some of the benefits of using a container - that is we start introducing host dependencies. But it could end up being an easy way to share a current build for testing purposes - it would save having to send a ZIP file around - the current build would exist in Dockerhub which is a central location, and all one would have to do is pull and run the latest image.

-

1

1

-

-

You're right in that the Dockerfile itself doesn't do anything particularly clever, but the output of it is a docker 'image'. This image can then be run as a container (the 'VM') and it contains everything you need to build your project. What this means is nobody then has to worry about setting up their PC with all the required dependencies, etc. They don't have to even think about it.

I included the Dockerfile/docker image build to illustrate the entire process. Normally a 'user' (whether it's a real person or an automated CI process) doesn't have to worry about that part. Usually someone will create this Dockerfile and build the image. They then publish the image to Dockerhub. Then you, as a user, pulls (downloads) the image to use. I haven't published an image, but if I had it would look like so:docker pull darkmod/darkmod-build

Then you build the project using that image using 'docker run':

docker run <a bunch of arguments> darkmod/darkmod-build <some build command>

The 'advantage' is that the build environment is isolated from anyone's PC and is immutable. It will never change, ever, and will work every single time. For everybody. No matter what Linux distro they have running, or what state their OS is in.

Also - you don't even have to do the 'docker pull' - if the image in the 'docker run' command doesn't exist on your local filesystem docker will pull it automatically.

Github:

I'm sure you've all discussed the pros and cons of switching and I don't mean to rehash all of it here - just if code repo size was a blocker for it I would be happy to help look into possible solutions is all... :-)

I've used SVN longer than I've used Git, and I've been involved in a few real-world projects where they were migrated, and it's never a problem. Yes, it can be complicated but it doesn't have to be. I am no expert myself - I basically stick to push and pull, and you can merge via the Github UI, etc.

Docker:

So primarily I was suggesting using Docker as a build tool - not for running the game. Containers are primarily used for server applications or command-line tools. Having said that, it is possible to run UI apps in containers (I've run browsers in containers before - e.g. Selenium). I think you could use the X Window system in the container or use something like VNC server. I would have to look into this, but would be happy to do it if you think it would be useful - by the sounds of it it would be.

The scenario you mention (running the container on any distro) is indeed what you can do with Docker - and why it's so useful. You can even do this with Windows or Mac (although only running Linux containers). Windows containers exist now, but I have no experience at all with it so can't really say what's possible there.

Automated builds:

If we can use a free CI system then the 'administration efforts' would be minimal - especially if we use Docker. That's one of the reasons I'm suggesting it - to pave the way for stuff like this.

One final note in case it's not obvious - using Docker for builds does NOT replace the current build system (scons or whatever). That all stays put. It just runs the current build command in the container. It's the environment that changes. not the process.

-

1

1

-

-

To better illustrate, let's contrast the different ways of doing this:

The Old Way

1. Obtain a build machine (PC, VM, or server)

2. Install required OS (Ubuntu, Debian, Windows, Mac, etc)

3. Update OS

4. Download and install required OS dependencies (e.g. apt install mesa-common-dev, scons, subversion, etc).

5. Download and install required project dependencies (e.g. Boost). May require building from source, etc

6. Download project source

7. Run the build script, and hope (or pray) that it 'just works'. Which it won't.

8. Spend the next 3 hours figuring out what's wrong with your setup.

9. finally get it working

10. Update some OS dependency, and find that your project build no longer works.

11. Someone updates the build script or adds a new dependency, and the build no longer works because they haven't updated the Wiki

12. etc, etc

Note that instead of step 5. people may opt to include libraries in the source tree. This leads to repository bloat.

The New Way (with Docker installed)

1. Download project source

2. Run single docker command. This always works because the 'build image' will have been updated with any required changes as well - if it hadn't the code wouldn't build for the person who made changes to the source code.

Note the 'build image' doesn't have to be built by the user - it can be shared via dockerhub and 'pulled' locally. This is done implicitly if it doesn't exist locally when you do a 'docker run'. The user doesn't have to know anything about it at all, in fact.

-

1

1

-

-

I'm not suggesting CMake. I'm not suggesting replacing scons. All I'm suggesting is a way to create and use a build environment that is instantly available to everyone and will work every single time.

It would be useful for those interested in Linux builds, or those who work on Mac or Linux. You can even run Docker on Windows, and build for Linux from your Windows environment. Or run in it Mac and also build for Linux. That's kind of the point.

You can think of Docker has an extremely lightweight Virtual Machine that you can spin up instantly (literally milliseconds) with any OS in order to run a particular task (in this case, to build Darkmod), and then it disappears. The OS (or image) you can build yourself and put anything you want in it (see the Dockerfile in the Github link I provided). Again, this is just one use case for Docker - the others aren't really applicable here.

If you don't have any interest or experience with Linux builds, then it won't be of interest - totally understandable. But for someone like me, who wants to build the project in the quickest and easiest way possible, and have it work every single time, it is very useful.

Regarding Windows - it's a different story and not really part of this Docker thing. But I was also looking at this whole thing from an automation angle like Tels mentioned in the first post in this thread. This is also doable with VS but like I said it would require a different approach.

You would get a better idea of how it's helpful if you worked through the Github example I provided - but if it's not your area of interest then by all means give it a miss.

Also - I would be interested in reading whatever article you said ridiculed Docker. The only people that I have heard ridicule Docker are those who have never used it and don't really understand the benefits of it. I've worked in software development for 18 years, and container technology is the single most useful thing to emerge in the mainstream in the past few years (along with cloud computing).

-

1

1

-

-

Grayman: believe me I understand where you are coming from....

This wouldn't require replacing scons or MSVS (I'm assuming this means Visual Studio). Rather it still uses them and complements them. Think of it as abstracting the build process up into another layer - one that is far easier to manage (both for newbies and experiences devs), as well as paving the way for other possible improvements like mentioned earlier.

Also I'm not interested in suggesting anything goes into SVN - this is just a demonstration on how things can be improved.

For those interested, I've put what I have up on Github, with a readme: https://github.com/timwebster9/darkmod-build

-

2

2

-

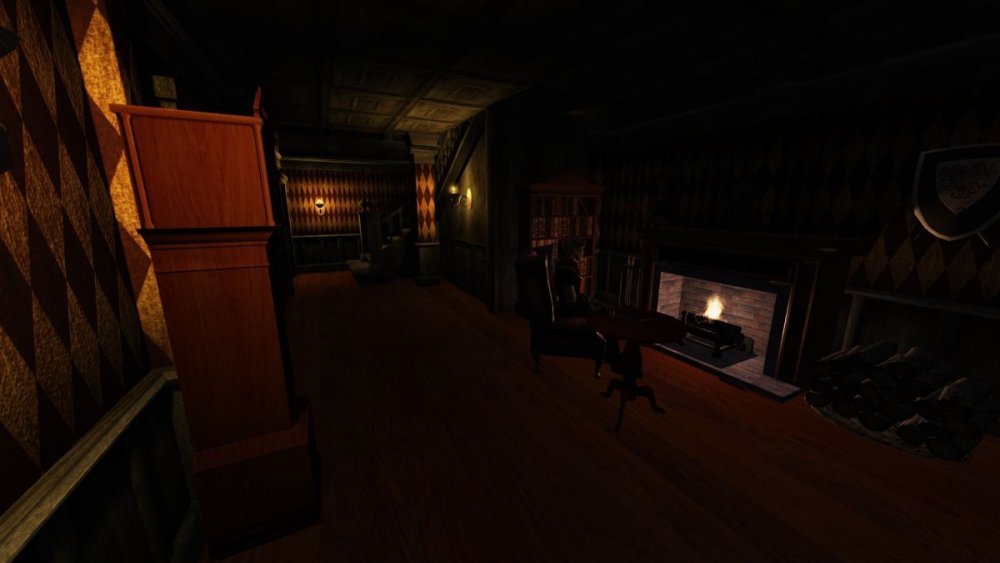

Beta testers wanted: The Hare in the Snare

in Fan Missions

Posted

some more screenshots: