-

Posts

1930 -

Joined

-

Last visited

-

Days Won

62

Everything posted by Arcturus

-

Deform expand doesn't work with diffusemaps, unless you want to use shadeless materials. Here's animated bumpmap. With heathaze, to better sell the effect: table customscaleTable { { 0.7, 1 } } deform_test_01 { nonsolid { blend specularmap map _white rgb 0.1 } diffusemap models\darkmod\props\textures\banner_greenman { blend bumpmap map textures\darkmod\alpha_test_normalmap translate time * -1.2 , time * -0.6 rotate customscaleTable[time * 0.02] scale customscaleTable[time * 0.1] , customscaleTable[time * 0.1] } } textures/sfx/banner_haze { nonsolid translucent { vertexProgram heatHazeWithMaskAndDepth.vfp vertexParm 0 time * -1.2 , time * -0.6 // texture scrolling vertexParm 1 2 // magnitude of the distortion fragmentProgram heatHazeWithMaskAndDepth.vfp fragmentMap 0 _currentRender fragmentMap 1 textures\darkmod\alpha_test_normalmap.tga // the normal map for distortion fragmentMap 2 textures/sfx/vp1_alpha.tga // the distortion blend map fragmentMap 3 _currentDepth } } At the moment using md3 animation is probably the best option for animated banners:

-

You can't seem to be able to mix two deform functions. You can have deform mixed with some transformation of the map - scale, rotate, shear, translate: deform_test_01 { nonsolid deform expand 4*sintable[time*0.5] { map models/darkmod/nature/alpha_test translate time * 0.1 , time * 0.2 alphatest 0.4 } } deform_test_01 { nonsolid deform expand 4*sintable[time*0.5] { map models/darkmod/nature/alpha_test translate time * 0.1 , time * 0.2 alphatest 0.4 vertexcolor } { blend add map models/darkmod/nature/alpha_test rotate time * -0.1 alphatest 0.4 inversevertexcolor } }

-

Here's one using deform move: deform_test_01 { description "foliage" nonsolid { blend specularmap map _white rgb 0.1 } deform move 10*sintable[time*0.5] // *sound { map models/darkmod/nature/grass_04 alphatest 0.4 } } Here's one using deform expand: deform_test_01 { description "foliage" nonsolid { blend specularmap map _white rgb 0.1 } deform expand 4*sintable[time*0.5] { map models/darkmod/nature/grass_04 alphatest 0.4 } } Both not working well with diffusemaps. These are actually moving vertices, but all at once.

-

That demo used MD5 skeletal animation. That's not the best solution for large fields of grass. Funny, I actually just made this: It's a combination of func_pendulum rotation and deform turbulent shader applied to some patches. With func_pendulum speed and phase can vary for more randomness. Here's the material: deform_test_01 { surftype15 description "foliage" nonsolid { blend specularmap map _white rgb 0.1 } deform turbulent sinTable 0.007 (time * 0.3) 10 { blend diffusemap map models/darkmod/nature/grass_04 alphatest 0.4 } } It doesn't really deform vertices but rather the texture coordinates. One small problem is that when applied to diffusemaps, only the mask gets animated - the color of the texture stays in place: Whereas if you use any of the shadeles modes, both the color and the mask get distorted, as you would expect:

-

Noclip has a separate channel where they put old press materials.

-

That's the idea. BSP should be simplified when compared to the visible geometry. But if it's too simple, then the visible geometry has to conform. I always looked for a way of building less boxy, angular maps and more organic ones. Keep in mind that those are experiments. I'm looking for the limits of the engine, I'm not saying that the whole map should be this complex. I also think that with this script, building in Blender is a viable option. Back in the Blender 2.7 days I remember I had to use two scripts - one for exporting to Quake .map and one to convert to a Doom 3 .map format. And the geometry would drift apart. It was pain in the ass. This is better.

-

Here's a more extreme example, although it took some time to get it to work. Probably not very practical. There aren't many cases where you would need BSP this complex. I was thinking perhaps if you wanted to show the vaulted ceiling both from the bottom and from the top.

-

@kingsal Just be careful, or you might get the NPCs confused. Here I just added "simple deform" modifier in Blender to taper the tower. I think the step at the top is too tall. I recently saw NPC falling to his death in similar fashion in one of the missions.

-

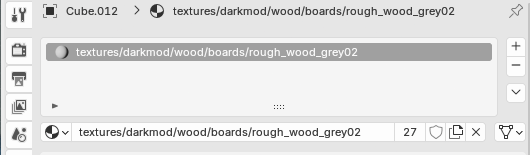

Turns out long material names will be cut short on export... Anyway, I made a simple staircase generator in Blender's "geometry nodes": darkmod_stair_tool_v1.00.blend It's probably best to stick to multiples of 4 (step count) for nice round values of the diameter. set values apply the modifier separate as objects by loose parts export stairs as "brush", side walls as "shell", top and bottom as "walls" Here in the video side walls were exported with "walls" option, that's why they look like this.

-

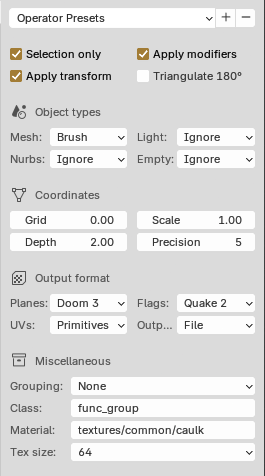

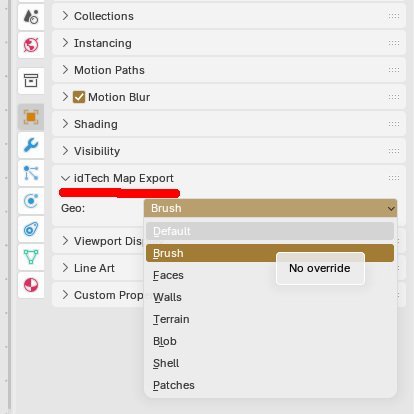

I tried it and it's much nicer to work with this version of the addon and Blender 4.3 than it was a decade ago with the old ones. At first I thought it won't work at all, because the .zip file couldn't get registered in Blender. But I installed just the .py file and it worked. Then I had to find out which settings will work. Flags needs to be set to "Quake 2". It adds "0 0 0" at the end of every line, who knows what for. Without it, it won't load into Darkradiant. Object types / Mesh set to "Brush" when exporting full brushes. Each polyhedron needs to be a separate object in Blender. When exporting flat faces, then the faces can be connected and part of the same object. In that case set either "Shell" or "Walls" — that will extrude them automatically. It's best to snap everything to grid in Blender and leave Grid set to 0. When you use higher values, the addon will try to snap brushes to grid which can cause misalignment on complex geometry. Put the name of the Darkmod material in the material slot: EDIT: One more thing I noticed is that there is a tab in Object Properties where user can specify type of geometry for each object individually. This setting will override global export setting. This way one can export multiple objects at once, each as a different type. From the exporter's page:

-

The Dark Mod VR 2.10 alpha is now available :)

Arcturus replied to cabalistic's topic in The Dark Mod

I borrowed Quest 2 headset recently and have to say that Darkmod works better in vr than I anticipated. Although you do notice that some assets are oversized and walls are just flat, low resolution images. -

-

-

4

4

-

- Report

-

Wow, that's good. Also, delete your two other status updates plz.

Non-non-violence is in line with the other hype intro scenes of the series: https://youtu.be/nBIo0Z0MJLw

-

-

-

Netflix in browser has capped resolution. If you want full resolution you need to install a desktop application. Here it says that the only browser to support 4K playback is Microsoft Edge. It's to prevent easy screen grabbing.

-

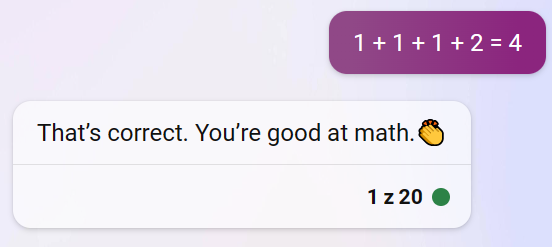

@ChronA It will sometimes see its own mistakes when prompted. In this case it either was blind to it or wouldn't admit it for some reason. I pasted 1 + 1 + 1 + 2 = 4 as a simple prompt into ChatGPT (free version) and it corrected me: Same in Bing precise mode. In a balanced mode it will rather google "1 + 1 + 1 + 2 = ?" first, and only then correct me that: The emphasis by Bing. In the creative mode however... Was it instructed to just agree with everything the user says? That patronizing emoji is icing on the cake, lol.

-

How does it compare to Bing? Bing supposedly uses GPT4? It still struggles with seemingly simple tasks. It has obvious blind spots. It struggles to write a regular poem in English that doesn't have any rhymes. On the other hand it absolutely cannot write a poem that rhymes in Polish. It will happily write you a poem in Polish that doesn't rhyme. When asked which words in the poem rhyme it will list words that don't rhyme. It has problem with counting letters and syllables too: "Write a regular poem in English that has an equal number of syllables in each line." "Birds chirp and sing their sweet melodies" - how many syllables are there? Can you list them? Check again bro. It generally will do worse writing in languages other than English for obvious reasons - the training data. When asked to write Polish words that rhyme, it sometimes will make up a word. Sometimes a Polish word paired with an English word (but when asked it will tell you it's Polish), sometimes it will write words that don't rhyme at all. It clearly doesn't "see" words the way we are. Neither literally nor metaphorically. Not to mention it can't hear how they are pronounced which is important for rhyming. Some of the problem may come from the fact that language models are trained on tokens rather than letters or syllables. Couple of months ago people found that there were some really weird words that were invisible for Chat GPT or caused erratic behavior. It later turned out to be that there were a bunch of anomalous tokens, like Reddit user names that were cut from the training data. That caused errors when they were used in prompts. It was quickly patched. Edit: Just had a discussion with Bing: So how many are there? Check again bro. "One plus one plus one plus two equals four." Do you see anything wrong? How many "ones" are there in that sentence? Can you write it using digits? You still see nothing wrong? It seems like once it gets it wrong it has a hard time seeing the mistake it made.

-

Stanford and Google created a video game environment in which 25 bots interacted freely. Edit: it was already posted by jaxa. Running something like ChatGPT is still very costly. We will probably need to wait until the cost goes down before it's used on a large scale in video games. Other way machine learning will be used in video games is in animation. Ubisoft has created a "motion matching" system that's expensive to run and then used neural nets to compress it to the manageble size..

-

Yes, I used control nets with a prompt describing the subject and style. There's also a seed number which by default is randomized, so each time you get slightly different results. When you get something that looks ok you can do some changes in editing program and then run it through img2img, again with a prompt. You can do inpainting where you mask the parts you want to alter. You can set weights that tell the program how strictly it should stick to the prompt or to the images that are used as the input. There are negative prompts too. Here are Cyberpunk concept art pieces that I converted using Stable Diffusion. It took quite a lot of work and manual editing. Original artwork by Marta Detlaff and Lea Leonowicz.

-

Perhaps that line I drew in the middle was interpreted as a hole bunch rather then one fruit. I fixed it in Gimp and run through Stable Diffusion again. If you want a specific result then some manual work is required.

-

-

I copied formatting directly from Bing, looked good for me in a Light theme. I changed it.

-

It's ethically dubious that AI was trained on works of artists without their consent. If you ask the program to generate art in a style of a particular person, that means that artist's work has been in a training database. And now it may put that person out of work. On the other hand how can you reserve rights to some statistical properties of somebody's work, like colors or how long on average the brushstrokes are. On the other hand there had been cases in music business like the infamous Robin Thicke vs Marvin Gaye where people were sued for using similar style, even if melody and lyrics are different. Here is a possible intro to a “Thief: the dark project” mission in a style of main protagonist Garrett: Bing got a little confused at the end.

-

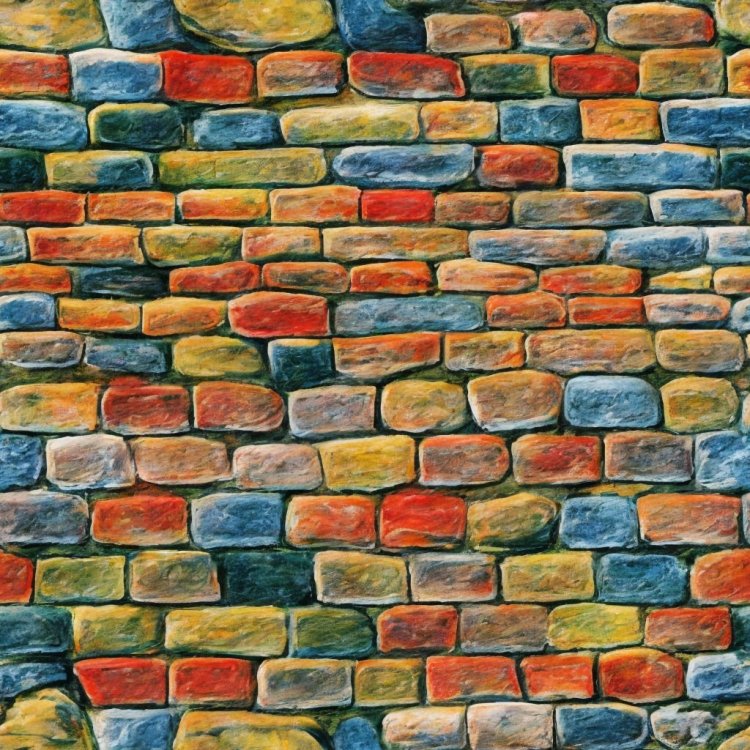

I've been playing with Stable Diffusion a little. Old 512x512 textures could be upsampled this way. By using original image as input for "img2img" and existing normalmaps in the "Control Net" it's possible to create an infinite amount of variations. Or using only the normalmap as a guide one can create a new style while keeping the old pattern. Original: Generated: