-

Posts

7245 -

Joined

-

Last visited

-

Days Won

280

Everything posted by stgatilov

-

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

Does it support updating existing installation? If people will uninstall + install the game every year, they will generate unnecessary traffic spikes. UPDATE: By the way, I don't think system-wide installation is a good idea. TDM installation is designed as user-only: it does not need admin rights, and is installed to one directory. Trying to turn this into system-wide installation is fragile and pointless. -

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

Try to change this line OFF to FALSE in the line with POSITION_INDEPENDENT_CODE Try to add line after minimum required version: cmake_policy(SET CMP0083 NEW) Post your GCC version. -

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

Hey! Do you have CMake newer than 3.14? It should pass -no-pie automatically to linker. -

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

I have chosen approach 1. Starting from svn rev 9396, version of compiler is not included in the platform name for third-party artefacts. It is just "win64_s_vc_rel_mt" and "lnx64_s_gcc_rel_stdcpp" now. -

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

I don't know. Conan has some "options", but I don't think you can pass arbitrary flags via it. -

Hello again, @Kowmad ! Over the long year, we have revamped our system of mirrors, both for the game itself and for the FMs. Now it should be much easier and safer to add an HTTP server to mirrors. We would be happy to add your server as a mirror if you still want to do so! The hard requirements are: TDM game takes 6 GB, FMs take 9 GB. So you need at least 20 GB of storage. Server must allow to download files via HTTP protocol by direct URL. That's what HTTP servers always do, actually Run rsync pull every hour/day/week to synchronize data. For TDM game mirror, server must support multipart byteranges. For FMs mirror, it is not necessary. As for other things, the outbound traffic on mirrors is rather high. The rough order is terabyte per month.

-

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

If you go to ThirdParty\custom\openal\CMakeLists.txt and add a line before add_subdirectory: set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -fcommon") Then run 1_export_custom.py, 2_build_all.py, and TDM build again. Will the problem go away then? -

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

Yes, no-pie is added only for 64-bit Linux for some reason... @cabalistic, I guess we can enable it for everywhere? For instance, do check like this: check_cxx_compiler_flag(-no-pie CXX_NO_PIE_FLAG) if (CXX_NO_PIE_FLAG) set_target_properties(TheDarkMod PROPERTIES POSITION_INDEPENDENT_CODE OFF) set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} -no-pie") endif() By the way, it is weird to see two commands doing the same -

Network is an interesting thing: if something can possibly fail about it, then it will surely fail for someone sooner or later. I cannot believe it actually hangs, since default CURL behavior is 300 seconds timeout on establishing connection. I think it can be lowered to e.g. 20 seconds. Where did you find it? Installer always does that. It should not be a problem. Yes, closing the window does not terminate program. I think I should tie closing GUI to canceling download in the last page, and simply block the cross icon at all the other moments.

-

Unless you have "seta com_allowConsole 1" in darkmod.cfg or autoexec.cfg or in command-line parameters, console should trigger by Ctrl+Alt+Tilde. If you have this cvar set, then it is toggled by just Tilde. I don't know about any other way to disable/enable console.

-

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

Ok, people can use prebuilt artefacts, they just can't build and replace them if they have different GCC. I like two possibilities: Remove compiler version from platform name. Leave everything as is and say that THIRDPARTY_PLATFORM_OVERRIDE should be used in this case. -

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

Yes, but it is rather unintentional that people with Linux and GCC cannot use our prebuilt artefacts if they have version different from 5. They should be perfectly usable. Do you have any idea how to resolve this? -

Testers and reviewers wanted: BFG-style vertex cache

stgatilov replied to cabalistic's topic in The Dark Mod

I believe there are cvars for that. Also, static data is now stored in a separate VBO again: 5598 -

Hmm... I don't think it is best practice of Git to be honest. Maybe best practice of GitHub. I see two reasons for protecting main branch: External contributions from people who has no access and whom nobody even knows. Enforcing code review on all changes. Frankly speaking, I think none of these points apply to our context. External contributions are maybe possible after the map is released, but not while it is in development. Code review only makes sense for scripts and decls, but I think nobody will bother about it. No, it does not. GitHub exposes its repositories via SVN protocol, which allows you to use SVN client (until someone decides to use some "fancy" feature like submodule or LFS, of course). A colleague of mine used TortoiseSVN in one very small project with git repo. He did not notice any problems, even though nobody even knew about it. Unless people are going to really make many branches, using SVN client might be an option. Of course locking support is not required: you can always implement it outside of VCS. Just say "I'm going to work on these assets, don't change them please". That's implicit locking. Even with SVN, you can still forget about locking, make your changes, and then find out that they are in conflict with something. Don't think pull requests are needed here. Even with SVN, you can simply update the file, see conflict, and click "resolve with mine", and thus happily overwrite it.

-

Unable to link openal during compilation

stgatilov replied to Alberto Salvia Novella's topic in TDM Tech Support

Yes, it is rather strange how it works with prebuilt libs. The major version of compiler is automatically included in the platform name when artefacts are built, but it is hardcoded when artefacts are used. There are two options: Use CMAKE_CXX_COMPILER_VERSION to deduce correct platform name when using artefacts. Remove major version of compiler from platform name when building artefacts. The problem with first approach is that it will force everyone without GCC 5 to build artefacts himself, which removes the idea behind storing artefacts at all. The problem with second approach is that static libraries on VC are incompatible between versions. At least they were before VS2015: the newest three versions should be compatible. It boils own to defining some tag of binary compatibility, and in current implementation it should be generated both in conan and cmake and be the same. P.S. If you want to always build everything from sources, you can simply use platform override. It is described as "Unsupported platform" section in ThirdParty/readme.md. In this case you will get away completely from these weird lnx32_s_gcc5_rel_stdcpp names. -

One interesting idea for mappers would be to create a repo on GitHub, and then use TortoiseSVN client exclusively to work with it. No hassle with branches, pulls/pushes, rebases, etc, no chance to lose data. Just SVN update, then work with FM, then SVN commit. Indeed, sooner or later you'll get conflicts, and that would be a serious challenge regardless of VCS/client. It would be perfect if GitHub supported SVN locks too, but I'm afraid it does not. Real SVN hosting is needed if you want to lock files.

-

I hoped for something simple, but it indeed looks complicated. As expected Why do you suggest using pull requests? Reviewing map changes is futile in many cases. Wouldn't it be easier to work on one branch in one repo and push directly? Or is it a workaround for git's ability to easily lose data? Why do you suggest rebasing? That's an easy way to lose data. Better just merge everything.

-

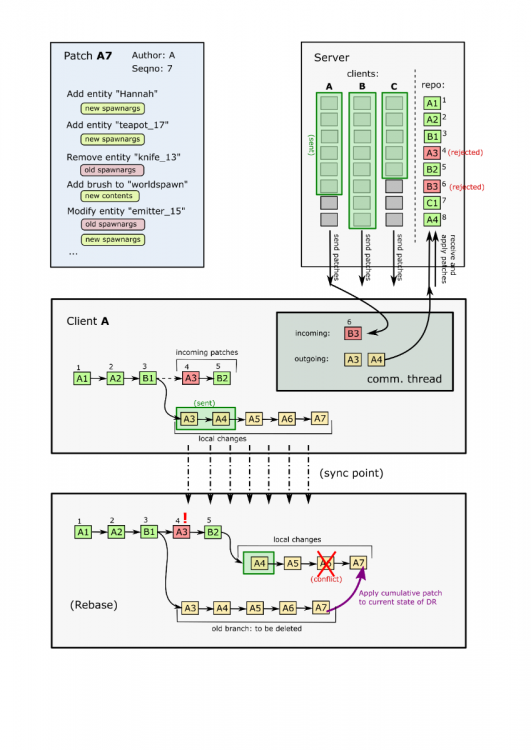

Ok, let's dream a bit Suppose that we have one server, which stores the map in something like SVN repo: initial revision, and a sequence of patches. The server is going to work as the only source of truth about map state. Suppose that all assets are stored on a mounted network disk. As far as I understand, google drive can be mounted to local disk. I guess such a simple approach is enough. Suppose that we have a framework for map diffs/patches with following properties: It is enough to find diffs on per-entity and per-primitive level, so that a change in entity spawnargs stores all spawnargs in the updated entity, and a change in primitive stores the full text of the updated primitive. No need to go on the level of "add/remove/modify a spawnarg". A modification inside patch should store both the old and the new state. Simultaneously changing spawnargs on the same entity or changing the same primitive results in a conflict, which can be detected reliably by looking at patches. Sequence of compatible patches can be quickly squashed together --- might be convenient in some cases. They can also be reversed easily. It is possible to quickly save a diff between current DR state and the previous such moment. I guess that's what hot reload already does, except that perhaps it is too careless with primitives now. It is possible to quickly apply a patch, as long as it does not result in a conflict (conflict happens e.g. when we modify entity spawnargs which are different from the old state stored in the patch). Since DarkRadiant is a complex thing, applying patches cannot happen at arbitrary moment. If we have a dialog opened, and we apply some patch which adds/removes some item from this dialog, that could easily result in a crash. Cleaning all the code for such cases is too unreliable and too much work. It is much easier to simply say that patches can only be applied when no dialogs are active, no edit box is active, etc. Now in order to turn DarkRadiant into a multiplayer game, we only need to invent some protocol The server simply receives all patches from all clients and commits them to its repository in order or arrival (creating new revisions). Every diff should have unique identifier (not just hash of content, but identifier of client + sequence number of diff on his side). If applying a patch results in a conflict, then new revision is still created, but it is marked with "rejected" flag and it does not change anything (empty diff). Storing rejected revisions is necessary in order for the client to know that his patch was rejected. Also, the server remembers which was the last revision sent to each client. It actively sends patches of newer revisions to the clients. The client has a persistent thread which communicates with server in an endless loop. It has a queue of outgoing patches, which it sends to server sequentally, and a queue of incoming patches, which it populates as it receives more data from server. This thread never does anything else: the main thread interacts with the queues when it is ready. The main thread of the client works mostly as usual. When user changes something, we modify all the usual data + add a patch into some patch queue. Note that this is different from the outgoing patch queue in communication thread. Also, this queue is divided into two sections: some beginning of the queue is "already sent to server", and some tail is "not sent to server yet". When user changes something, we compute diff and add a patch to the "yet-unsent" tail of the queue. We establish a "sync point" when 1) no dialog is open (i.e. DR is ready to be updated), and 2) patch queue is not empty or 5 seconds has passed since previous sync point. The sync point is going to be complicated: Pull all the received ingoing patches from the communication thread. Compute the patch from current state to the latest state received from the server. Take our patch queue and reverse it, then concatenate ingoing patches, finally squash the whole sequence into one patch. Apply this patch to the current DR state, and you get server revision. That's the most critical point, since all data structures in DR must be updated properly. Look which of the "already sent" patches in our queue are contained among the ingoing patches. These ones were already incorporated on the server, so we drop them out from our queue. If some of our patches were committed but rejected, we should quickly notify user about it (change dropped). Go sequentally over the remaining patches in our queue, and apply them to the current state of DR, updating all structures again. If some patch cannot be applied due to conflict, then we drop it and quickly notify user. The already-sent patches remain already-sent, the not-yet-sent patches remain not-yet-sent. Copy all not-yet-sent patches into the outgoing patch queue of the communication thread, and mark them as "already sent". Of course, when users try to edit the same thing, someone's changes are dropped. That's not fun, but should be not a big problem for fast modifications. The real problem is with slow modifications, like editing a readable: user can edit it for many minutes, during which no sync points happen. If someone else edits the same entity during this time, then all his modifications will be lost when he finally clicks OK. I think this problem can be gradually mitigated by adding locks (on entity/primitive) into the protocol: when user starts some potentially long editing operation, the affected entity is locked, and the lock is sent to server, which broadcasts it to all the other clients. If client knows that entity is locked, it forbids both locking it with some dialog and editing it directly. Note that synchronization of locks does not require any consistency in time: it can be achieved by sending entity names as fast as possible without some complex stuff like queues, sync points, etc. The system sounds like a lot of fun. Indeed, things will often break, in which case DR will crash, and map will become unreadable. Instead of trying to avoid it at all costs, it is much more important to ensure that server stores the whole history, and provides some recovery tools like "export map at revision which is 5 minutes younger than the latest one". I'm pretty sure starting/ending a multiplayer session would be a torture too. Blocking or adjusting behavior of File menu commands is not obvious too. UPDATE: added illustration:

-

In principle, there are three known approaches to collaborate on a project: Lock + modify + unlock. When someone decides to change a file, he locks it, then changes it, uploads a new version, and unlocks it. If file is already locked, then you have to wait before editing it. Or you can learn who holds the lock and negotiate with him. Copy + modify + merge. When you want to modify a file, you get a local copy of it, change it, then try to upload/commit the changes. If several people have modified the same file in parallel, then the whoever did it last has to merge all changes into single consistent state. Merging is usually possible only for text documents: sometimes it happens automatically (if the changes are independent), sometimes it results in conflicts that people have to resolve manually. Realtime editing. The document is located on the central server, and everyone can edit it in realtime. Basically, that's how Google Docs work. I'd say internally it is lock+modify+unlock approach, but with very small changes and fast updates. @MirceaKitsune asks for approach 3. I guess it would require tons of work in DarkRadiant. Approach 2 can be achieved by using some VCS like SVN, mercurial, git, whatever. But it requires good merging in order to work properly. Text assets like scripts, xdata, defs, materials should merge fine with built-in VCS tools. Binary assets like images, models, videos, are completely unmergeable: for them approach 2 is a complete failure. As far as I understand, @greebo wants to improve merging for map files, which are supposedly the most edited files. Approach 1 can be achieved by storing FM on cloud disk (e.g. Google Drive) and establishing some discipline. Like posting a message "I locked it" on forum, or in a text file near the FM on Google Drive. SVN also supports file locking, so it can be used for unmergeable assets only and on per-file basis. Git also has extension for file locking, as far as I remember.

-

SVN also has feature of file locking. I think mappers implement manual locking when they work on a map on google drive: someone says "I'm editing it now", then upload his changes and say "I have done with it, the map is free". That's exactly the locking paradigm, but on the whole map. SVN locking was designed for working with files which are unmergeable (like models or images). By the way, does anyone know what's the status of betamapper SVN repo? Who are the intended users of this repo? Speaking of map diff, wouldn't it be enough to normalize order of entities and primitives, and run ordinary diff afterwards? UPDATE: By the way, hot-reload feature already computes some diff for map file, but it is only interested in entities, whcih can be easily matched by name --- so it is very basic. I think asking mappers to learn git is overkill. If you wrap the whole git into simple commands, then it has some chance. There are some non-programmer VCS, and they are usually very simple. For instance, Sibelius stores versions in the same file, allows switching between them and viewing diffs. Did not even find any merging there. Word and SharePoint also seems to use sequence of versions, but Word at least has merging (two documents without base version, I suppose). It is strange to suggest mappers learning the VCS which is hardest to learn/use out of all possibilities. Git does not provide any additional capabilities over mercurial, but pushes a lot of terms over user which he regularly bumps into and has to learn eventually. Plus it is very easy to lose data. Git ninjas know that deleted branches are not lost: just look at reflog... but for non-ninja it is the same as "lost".

-

We have lost a member of the TDM development team

stgatilov replied to Springheel's topic in The Dark Mod

It is often missed that Grayman was also Lead Programmer of TDM team for six years. -

We have lost a member of the TDM development team

stgatilov replied to Springheel's topic in The Dark Mod

Of course, it is only about the two missions which were not released. Nobody ever talked about removing already released FMs. I have added the question to my previous post. -

We have lost a member of the TDM development team

stgatilov replied to Springheel's topic in The Dark Mod

Grayman repeatedly said that if he does not have time to release these two FMs, then the story of William Steele ends on the fifth mission. I specifically asked him about it in March and here is his answer: So unless anyone wants to ignore his will, WS6 and WS7 are dead too.- 84 replies

-

- 10

-

-

-

-

@Caverchaz, I have added some retry capabilities to the installer. Ideally, it should be able to overcome the problem you had. Unfortunately, I cannot test it myself: I installed TDM afresh without any issue yesterday. The problem simply does not happen on my machine. But you have somewhat unique conditions that the problem happens on your side. So I'd like to ask you for help with testing. Here is the plan: Put tdm_installer into empty directory and run without any checkboxes set (just as you did initially when it hung up). If it hangs (does not show any progress for ~10 minutes, or simply does not finish in a few hours), then take a memory dump as I described above. Regardless of whether it succeeds or hangs, please find the logfile of the run and attach it here.