Search the Community

Showing results for '/tags/forums/doom 3/'.

-

[Bug] On Launch, Distorted First Frame When AA

Daft Mugi replied to Daft Mugi's topic in TDM Tech Support

@stgatilov Even better news! The following commit fixed this issue regardless of r_tonemapOnlyGame3d setting. r10930 | stgatilov | 2025-01-25 | 7 lines Clear background during main menu. This fixes the issue e.g. with AT1: Lucy and tonemap disabled. The briefing there does not use any backgrounds, and no-clear policy results in HOM-like effects. Originally reported here: https://forums.thedarkmod.com/index.php?/topic/22635-beta-testing-213/#findComment-499723 -

So, what are you working on right now?

MirceaKitsune replied to Springheel's topic in TDM Editors Guild

That looks amazing! New AI models are something I've been hoping we could see for some time: We can definitely use more steampunk robots, fantasy creatures, and why not some bigger and scarier undead like Doom?! I hope those models can be perfected and included in a future release. -

I dont know. Maybe by: checking our source code? Reading our Tdm wiki: https://wiki.thedarkmod.com/index.php?title=AI_behaviour_depending_on_player_actions D1 enemy behaviour code: https://github.com/id-Software/DOOM/blob/a77dfb96cb91780ca334d0d4cfd86957558007e0/linuxdoom-1.10/p_enemy.c Or watching a video about player chases? Like:

-

Yes, both DXT3 and DXT5 support alpha transparency. I made an error there. If you speak about fullscreen cutscene in the middle of the mission, then it is OK. We don't have some built-in support for this, but this can be done with full-screen overlay. I'm talking about Doom 3 style videos on computer screen. This is not going to work well with FFmpeg videos, they are only designed for FMV case. It is used for water in NHAT campaign, I think at the beginning of the second mission.

-

Oh, I'm maybe confused - if I understand correctly, __declspec is MSVC specific, so I was unfamiliar -- I thought you were talking about syntax for function declarations in the DLL's Doom script header, rather than the actual DLL C++. On the DLL side, I had just expected they be exported with an `extern "C"` block, so any compiled language (or non-MSVC C++ compiler) can produce a valid DLL (since extern is ANSI standard), and it is up to the addon SDK or addon-writer manually to make the exposed functions TDM-compatible ("event-like" using ReturnFloat, etc.). E.g. my Rust SDK parses the "normal" Rust functions at compile-time with macros and twists them to be TDM-event-like, but simple C/C++ macros could do this for C++ addons too (or do it in TDM engine, but that adds code). To avoid discovery logic, DLL_GetProcAddress expects to find tdm_initialize(returnCallbacks_t cbs) in the DLL. This gets called by TDM (Library.cpp), passing a struct of any permitted TDM C++ callbacks, and everything is gtg. It means exactly one addon is possible per DLL, but that seems like a reasonable constraint. Going the other way, the DLL-specific function declarations are read by TDM from the Doom script header (a file starting #library and having only declarations), and loaded by name with DLL_GetProcAddress. That (mostly) works great with idClass, but instead of #library, we could call a const char* tdm_get_header() in the DLL to get autogenerated declarations. I am not 100% sure I understood this part, so apologies if I get this wrong - I think the benefit of DLLs is that they make chunky features optional and skip recompiling or changing the original TDM C++ core every time someone invents a compiled addon. Also, you wouldn't want (I think) a DLL to be able to override an existing internal event definition. So there isn't a very useful way that TDM C++ could take advantage of the DLL directly (well, maybe). However, as you say, Doom scripts are already a system for defining dynamic logic, with lots of checks and guardrails, so making DLL functions "Just An Event Call" that Doom scripts can use means (a) all the script-compile-time checking adds error-handling and stability, and (b) fewer TDM changes are required. Admittedly, yes, to make this useful means exposing a little more from TDM to scripts (mostly) as sys events - CreateNewDeclFromMemory for example: sys.createNewDeclFromMemory("subtitles", soundName, subLength, subBuffer); Right now, that's not useful as scripts can't have strings of >120chars, but if you can generate subBuffer with a DLL as a memory location, that changes everything. So even exposing just one sys event gives lots of flexibility - dynamic sound, subtitles, etc. etc. - and then there are existing sys events that the scripts can use to manipulate the new decl. No need to expose them to the DLL directly. Basically, it means the DLL only does what it needs to (probably something quite generic, like text-to-speech or generating materials or something), and the maximum possible logic is left to Doom scripts as it's stable, safe, dynamically-definable, highly-customizable, has plenty of sys events to call, has access to the entities, etc., etc. Yes, I think this is what I've got - I'd just added the #library directive to distinguish the "public header" Doom script that TDM treats as a definition for the DLL, vs distributed "Doom script headers" which script writers #include within their pk4 to tell the script compiler that script X believes a separate DLL will (already/eventually) be loaded with these function signatures, and to error/pass accordingly. Although I think no C++ headers are needed, as it would be read at runtime, and TDM can already parse the Doom script headers. I'm not familiar with import libs, but from a quick read, this generates a DLL "manifest" for MSVC, but I think it isn't strictly necessary, assuming there is a TDM-readable header, as TDM provides a cross-platform Sys_DLL_GetProcAddress wrapper that takes string names for loading functions? But if it is, then yes. Yep - bearing in mind that a DLL addon might be loaded after a pk4 Doom script pk4 that uses it, the need for the libraryfunction type is: firstly, to make sure the compiler remembers that this is func Y from addon X after it is declared, until it gets called in a script (this info is stored as a pair: an int representing the library, and an int representing the function) - and then remembers again from compiler to interpreter - i.e. emitting OP_LIBCALL with a libraryfunction argument. secondly, it is to reassure the compiler that in this specific case it is OK to have something that looks like a function but has no definition in that pk4 (as opposed to declaration, which must be present/#included), and, thirdly, to make sure that the DLL addon function call is looked up or registered in the right DLL addon, so any missing/incompatible definitions can be flagged when game_local finishes loading all the pk4s (i.e. when all the definitions are known and the matching call is definitely missing) Right now, each DLL gets a fresh "Library" instance that holds its callbacks, any debug information, and a DLL-specific event function table. It is an instance of idClass, so it inherits all the event logic, which is handy. Having an instance of Library per-DLL seems to me to be neater, as it is easier to debug/backtrace a buggy function to the exact DLL, and to keep its events grouped/ring-fenced from other DLLs. The interpreter needs to know which Library instance to pass an event call to, so the libraryNumber (an runtime index representing the DLL) has to be in the OP_LIBCALL opcode. As such, while (for example) a virtualfunction in Doom script is a single index in an event list, a libraryfunction is a pair of the library index and the index of the function in the Library's event list. --- But, suppose we try to remove libraryfunction as a type. The first issue (above) could be avoided by either: (A) adding DLL events directly to the main sys event list, but since event lists are static (C++ macro generated) then different "core" code would have to change; (B) passing the libraryNumber via a second parameter to OP_LIBCALL and (ab)using virtualfunction (or even int) for the function number, or, (C) making the Library a Doom script object, not namespace, so that the method call can be handled using a virtualfunction The second issue and third issue could be avoided by doing two compiler passes - a primary pass that loads all DLL addons from pk4s, so that the compiler treats any loaded DLL functions as happily-defined normal functions in every pk4 during a secondary pass for compiling Doom scripts, as it is guaranteed to have any valid DLL function definitions before it starts. Sorry, that got quite confusing to describe, but I wasn't sure how to improve on my explanation However, in conclusion, I'm not sure that those other approaches are less invasive than just having a new type, but open to thoughts and other options! Yep! That's nice, sounds practical - maybe even this could eventually evolve to semver, to allow some range flexibility?

- 25 replies

-

Yes, I guess it makes sense to use addon name as namespace on function calls. But as for function declarations --- a C macro can be used to avoid copy/pasting, just like everyone does for __declspec(dllexport). This will probably keep compiler changes minimal? As far as I see, the only reason you use doom scripts is that they allow to define interfaces dynamically. Rather limited interfaces, but enough for simple cases. If you wanted to implement a specific plugin, you would just write C++ header and compile TDM engine against it. But you don't know what exactly you want to implement, so you write interface dynamically in doom script header. And of course it is easier to call functions from such interface from doom script, although you can in principle call the functions directly from C++. However, the problem you'll face quickly when you try to make useful addons is that you are pretty limited on what you can customize without access to more stuff that is available to doom script interface. Which again raises a question: do we really need this? Will we have enough useful addons implemented? The Doom script header which contains the interface of the addon should definitely be provided together with the addon. Whether they are in the same PK4 and where they should be physically located is not that important. Doom script header plays the same role for an addon as public headers do for the DLL. If you want to distribute a DLL, you distribute them along with headers (and import libs on Windows), and users are expected to use them. I'm not sure I understand what you are talking about. There is doom script header which provides the interface. The compiler in TDM verifies that calls from doom scripts pass the arguments according to this signature. The implementation of addon should accept the parameters according to the signature in header as well. If addon creator is not sure in himself, he can implement checks on his side. The only issue which may happen is that the addon was built against/for a different version of interface. But the same problem exists in C/C++ world: you might accidentally get a DLL at runtime which does not match header/import libs you used when you build the problem. And there is rather standard conventional solution for this. Put "#define MYPLUGIN_VERSION 3" in doom script header, and add exported function "int getVersion()" there as well. Then also add a function "void initMyPlugin()" in doom script header, which calls getVersion and checks that it returns the number equal to the macro, and stops with error if that's not true. This way you can detect version mismatch between script header and built addon if you really want to avoid crashes, no extra systems/changes required.

- 25 replies

-

No, Rust would not change much. In this specific case, the game would still crash. The only difference is that it would crash immediately on out-of-bounds access, while in C++ there is a chance it will not crash immediately but drag for a bit longer, or even not crash at all. Rust promises no undefined behavior, but does not protect you against crashes, memory leaks, and logical errors. It is a good idea when your software is attacked by hackers. TDM is full of insecure stuff so there is no reason to bother. I think wasm interpreter runs in a sandbox, like any embedded language. So if you want to do networking, threads, and other stuff that invokes syscalls, you won't be able to do it inside such a sandbox by default. Maybe something extra can be enabled, or you can always manually provide access to any functions inside the sandbox. But generally speaking, this will not work as a way to provide arbitrary native extensions. Speaking of addons, I think the minimalistic approach would be to allow doom scripts declare and call functions from DLLs. Something like "plugin(myaddon) float add(float a, float b);". Then dynamically load myaddon.dll/so and invoke the exported symbol with name "add", and let it handle the arguments using a bit of C++ code that supports ordinary script events. This is what e.g. C# supports: very simple to understand and implement. No need for namespaces and other additions to scripting engine.

- 25 replies

-

- 1

-

-

I can say for sure that this is a marvelous technical achievement A geek inside me cries about how cool it is But I don't see much point in having Rust scripts in TDM, and here are some reasons for this. Note: my experience in Rust is just a few days writing basic data structures + some reading. 1) Generally speaking, high-performance scripts are not needed. Some time ago there was an idea that we can probably JIT-compile doom scripts. While I would happily work on it just because it sounds so cool, the serious response was "Let's discuss it after someone shows doom scripts which are too slow". And the examples never followed. If one wants to write some complicated code, he'd better do it in the engine. 2) If we wanted to have native scripts, it should better be C/C++ and not Rust. And the reason is of course: staying in the same ecosystem as much as possible. It seems to me that fast builds and debugging are very important to gamedev, and that's where Visual Studio really shines (while it is definitely an awful Windows-specific monster in many other regards). I bet C/C++ modules are much easier to hook into the same debugger than Rust. And I've heard rumors that scripts in C++ is what e.g. Doom 2016 does. The engine will not go anywhere, and it's in C++, so adding C++ modules will not increase knowledge requirements, while adding Rust modules will. Rust even looks completely different from C++, while e.g. Doom script looks like C++. And writing code in Rust is often much harder. And another build system + package manager (yeah, Rust ones are certainly much simpler and better, but it is still adding more, since C++ will remain). Everyone knows that C++ today is very complicated... but luckily Doom 3 engine is written in not-very-modern C++. As the result, we have at least several people who does not seem to be C++ programmers but still managed to commit hefty pieces of C++ code to the engine. Doing that in Rust would certainly be harder. 3) If we simply need safe scripts in powerful language, better compile C++ to wasm and run in wasm interpreter. Seriously, today WebAssembly allows us to compile any C++ using clang into bytecode, which can be interpreted with pretty minimalistic interpreter! And this approach is perfecly safe, unlike running native C++ or Rust. And if we are eager to do it faster, we can find interpreter with JIT-compiler as well. Perhaps we can invent some automatic translation of script events interface into wasm, and it will run pretty well... maybe even some remote debugger will work (at least Lua debuggers do work with embedded Lua, so I expect some wasm interpreter can do the same). However, after looking though the code, it seems to me that this is not about scripts in Rust, it is about addons in Rust. So it is supposed to do something like what gamex86.dll did in the original Doom 3 but on a more compact and isolated scale. You can write a DLL module which will be loaded dynamically and you can then call its functions from Doom script. Is it correct? Because if this is true, then it is most likely not something we can support in terms of backwards compatibility. It seems that it allows hooks into most C++ virtual functions, which are definitely not something that will not break.

- 25 replies

-

- 1

-

-

Hi folks, and thanks so much to the devs & mappers for such a great game. After playing a bunch over Christmas week after many years gap, I got curious about how it all went together, and decided to learn by picking a challenge - specifically, when I looked at scripting, I wondered how hard it would be to add library calls, for functionality that would never be in core, in a not-completely-hacky-way. Attached is an example of a few rough scripts - one which runs a pluggable webserver, one which logs anything you pick up to a webpage, one which does text-to-speech and has a Phi2 LLM chatbot ("Borland, the angry archery instructor"). The last is gimmicky, and takes 20-90s to generate responses on my i7 CPU while TDM runs, but if you really wanted something like this, you could host it and just do API calls from the process. The Piper text-to-speech is much more potentially useful IMO. Thanks to snatcher whose Forward Lantern and Smart Objects mods helped me pull example scripts together. I had a few other ideas in mind, like custom AI path-finding algorithms that could not be fitted into scripts, math/data algorithms, statistical models, or video generation/processing, etc. but really interested if anyone has ideas for use-cases. TL;DR: the upshot was a proof-of-concept, where PK4s can load new DLLs at runtime, scripts can call them within and across PK4 using "header files", and TDM scripting was patched with some syntax to support discovery and making matching calls, with proper script-compile-time checking. Why? Mostly curiosity, but also because I wanted to see what would happen if scripts could use text-to-speech and dynamically-defined sound shaders. I also could see that simply hard-coding it into a fork would not be very constructive or enlightening, so tried to pick a paradigm that fits (mostly) with what is there. In short, I added a Library idClass (that definitely needs work) that will instantiate a child Library for each PK4-defined external lib, each holding an eventCallbacks function table of callbacks defined in the .so file. This almost follows the idClass::ProcessEventArgsPtr flow normally. As such, the so/DLL extensions mostly behave as sys event calls in scripting. Critically, while I have tried to limit function reference jumps and var copies to almost the same count as the comparable sys event calls, this is not intended for performance critical code - more things like text-to-speech that use third-party libraries and are slow enough to need their own (OS) thread. Why Rust? While I have coded for many years, I am not a gamedev or modder, so I am learning as I go on the subject in general - my assumption was that this is not already a supported approach due to stability and security. It seems clear that you could mod TDM in C++ by loading a DLL alongside and reaching into the vtable, and pulling strings, or do something like https://github.com/dhewm/dhewm3-sdk/ . However, while you can certainly kill a game with a script, it seems harder to compile something that will do bad things with pointers or accidentally shove a gigabyte of data into a string, corrupt disks, run bitcoin miners, etc. and if you want to do this in a modular way to load a bunch of such mods then that doesn't seem so great. So, I thought "what provides a lot of flexibility, but some protection against subtle memory bugs", and decided that a very basic Rust SDK would make it easy to define a library extension as something like: #[therustymod_lib(daemon=true)] mod mod_web_browser { use crate::http::launch; async fn __run() { print!("Launching rocket...\n"); launch().await } fn init_mod_web_browser() -> bool { log::add_to_log("init".to_string(), MODULE_NAME.to_string()).is_ok() } fn register_module(name: *const c_char, author: *const c_char, tags: *const c_char, link: *const c_char, description: *const c_char) -> c_int { ... and then Rust macros can handle mapping return types to ReturnFloat(...) calls, etc. at compile-time rather than having to add layers of function call indirection. Ironically, I did not take it as far as building in the unsafe wrapping/unwrapping of C/C++ types via the macro, so the addon-writer person then has to do write unsafe calls to take *const c_char to string and v.v.. However, once that's done, the events can then call out to methods on a singleton and do actual work in safe Rust. While these functions correspond to dynamically-generated TDM events, I do not let the idClass get explicitly leaked to Rust to avoid overexposing the C++ side, so they are class methods in the vtable only to fool the compiler and not break Callback.cpp. For the examples in Rust, I was moving fast to do a PoC, so they are not idiomatic Rust and there is little error handling, but like a script, when it fails, it fails explicitly, rather than (normally) in subtle user-defined C++ buffer overflow ways. Having an always-running async executor (tokio) lets actual computation get shipped off fast to a real system thread, and the TDM event calls return immediately, with the caller able to poll for results by calling a second Rust TDM event from an idThread. As an example of a (synchronous) Rust call in a script: extern mod_web_browser { void init_mod_web_browser(); boolean do_log_to_web_browser(int module_num, string log_line); int register_module(string name, string author, string tags, string link, string description); void register_page(int module_num, bytes page); void update_status(int module_num, string status_data); } void mod_grab_log_init() { boolean grabbed_check = false; entity grabbed_entity = $null_entity; float web_module_id = mod_web_browser::register_module( "mod_grab_log", "philtweir based on snatcher's work", "Event,Grab", "https://github.com/philtweir/therustymod/", "Logs to web every time the player grabs something." ); On the verifiability point, both as there are transpiled TDM headers and to mandate source code checkability, the SDK is AGPL. What state is it in? The code goes from early-stage but kinda (hopefully) logical - e.g. what's in my TDM fork - through to basic, what's in the SDK - through to rough - what's in the first couple examples - through to hacky - what's in the fun stretch-goal example, with an AI chatbot talking on a dynamically-loaded sound shader. (see below) The important bit is the first, the TDM approach, but I did not see much point in refining it too far without feedback or a proper demonstration of what this could enable. Note that the TDM approach does not assume Rust, I wanted that as a baseline neutral thing - it passes out a short set of allowed callbacks according to a .h file, so language than can produce dynamically-linkable objects should be able to hook in. What functionality would be essential but is missing? support for anything other than Linux x86 (but I use TDM's dlsym wrappers so should not be a huge issue, if the type sizes, etc. match up) ability to conditionally call an external library function (the dependencies can be loaded out of order and used from any script, but now every referenced callback needs to be in place with matching signatures by the time the main load sequence finishes or it will complain) packaging a .so+DLL into the PK4, with verification of source and checksum tidying up the Rust SDK to be less brittle and (optionally) transparently manage pre-Rustified input/output types some way of semantic-versioning the headers and (easily) maintaining backwards compatibility in the external libraries right now, a dedicated .script file has to be written to define the interface for each .so/DLL - this could be dynamic via an autogenerated SDK callback to avoid mistakes maintaining any non-disposable state in the library seems like an inherently bad idea, but perhaps Rust-side Save/Restore hooks any way to pass entities from a script, although I'm skeptical that this is desirable at all One of the most obvious architectural issues is that I added a bytes type (for uncopied char* pointers) in the scripting to be useful - not for the script to interact with directly but so, for instance, a lib can pass back a Decl definition (for example) that can be held in a variable until the script calls a subsequent (sys) event call to parse it straight from memory. That breaks a bunch of assumptions about event arguments, I think, and likely save/restore. Keen for suggestions - making indexed entries in a global event arg pointer lookup table, say, that the script can safely pass about? Adding CreateNewDeclFromMemory to the exposed ABI instead? While I know that there is no network play at the moment, I also saw somebody had experimented and did not want to make that harder, so also conscious that would need thought about. One maybe interesting idea for a two-player stealth mode could be a player-capturable companion to take across the map, like a capture-the-AI-flag, and pluggable libs might help with adding statistical models for logic and behaviour more easily than scripts, so I can see ways dynamic libraries and multiplayer would be complementary if the technical friction could be resolved. Why am I telling anybody? I know this would not remotely be mergeable, and everyone has bigger priorities, but I did wonder if the general direction was sensible. Then I thought, "hey, maybe I can get feedback from the core team if this concept is even desirable and, if so, see how long that journey would be". And here I am. [EDITED: for some reason I said "speech-to-text" instead of "text-to-speech" everywhere the first time, although tbh I thought both would be interesting]

- 25 replies

-

- 3

-

-

[2.13] Interaction groups in materials: new behavior

stgatilov replied to stgatilov's topic in TDM Editors Guild

Moved this topic from development forums, since it covers a potentially important behavior change in 2.13. Luckily, its important is countered by the rarity of such complicated materials. I hope that this change has not broken existing materials. And even if it had broken any, we will be able to fix them manually... -

Tearing and stuttering in video while playing TDM

stgatilov replied to Cary James's topic in TDM Tech Support

In 2.12, Uncapped FPS was off by default, as it was historically in Doom 3. And this old mode... let's say it is known for causing micro-stutters Although the stutters don't look like "micro" in your videos, it's like multiple frames are missed. The latest build has Uncapped FPS on + Vsync on by default (and Vsync actually works on Linux), which is the most butter-smooth mode we have. -

And for some pointers, read the following topic: https://forums.thedarkmod.com/index.php?/topic/22533-tdm-for-diii4a-support-topic/

-

Just need to say this This was not true at all for me, when I modeled for Doom 3 at lest, all my lwo models worked fine, including smoothing, thou I use Modo 601 that was the exact modeling tool Seneca a model artist from idSoftware used and I also used his custom Doom 3 exporter pluging for Modo that cleans up and prepares .lwo files to Doom 3 prior to exporting. This is what I do in Modo 601 to export clean lwo files, perhaps helps those using Blender as well. 1 - put model origin at world 0,0 and move model geometry if necessary so its origin is at the place you want, like the model base. 2 - (optional) clean geometry using some automatic system, if available, so your model doesn't export with bad data (like single vertices floating disconnected). 3 - bake all model transforms, so rotation, translation and scale are all put at clean defaults (that will respect any prior scaling and rotation you may have done) 4- (in modo smoothing works this way) go to the material section and if you want your model to be very smooth (like a pipe for example) set material smoothing to 180%, you can set smoothing per material. If you want more smoothing control, you can literally cut and paste the faces/quads and you will create smoothing "groups" or islands that way. 5 - use or select a single model layer and export that (the engine only cares for a single lwo layer) if you want a shadow mesh and collision mesh as well, put them all on the same layer as the main model. 6 - never forget to triangulate model before export (Seneca plugin did that for me automatically) I did this and all my models exported fine and worked well ingame. Thou .lwo was invented by lightwave and Modo was developed by former lightwave engineers/programmers, so perhaps their lwo exporter is/was well optimized/done compared to other 3D tools.

-

Here is what I know: static models: LWO: somewhat default small uncompressed size since it is binary loading code is horrible, can be slow and break your model (smoothing groups might be pain) ASE: larger uncompressed size since it is text (compressed size is probably OK?) loading code is as awful as for LWO OBJ: the only format added specifically for TDM, so not very widespread uncompressed size is large because it is text (compressed size is probably OK?) loading is very fast and does not "fix"/break your model, you'll get exactly the topology & smoothing you exported ragdolls: The only format is .af text format, which is handmade for Doom 3. No changes here since Doom 3. It is notoriously hard to create/edit. The only tool is the Windows-only builtin command editAFs, and this is the only in-game editor which has no outside alternatives. We wanted to port it to either DarkRadiant or at least better GUI framework, but it did not happen. animation: MD5: md5mesh --- Doom 3 custom text format which represents a mesh attached to skeleton (joints, weights, etc.) md5anim --- custom text format that represents an animation of a skeleton. I'm pretty sure Blender has some working exporter for these. MD3: This is Doom 3 custom text format which represents linear vertex morphing. It is rarely used, but it is a perfect fit in case animation is clearly not "skeletal" (e.g. animated grass or water). I suppose some converters can be found?... Ask @Arcturus, he used it. Also there are some hardcoded pieces of code which transform a mesh into something else, that are enabled via "deform" keyword in material. The most known example is particles. All of this is very specific and uses custom formats. There is also MA loader (Maya ascii format?), but it is not used an I have no idea how functional it is. Also there is FLT model, but no idea what it is. video: The mainstream idea is to use (mp4 + h264 + aac) for FMV. There is also legacy support for ROQ, but I guess you should not use it today. I think all the other formats are disabled in FFmpeg build that we use. There is no common understanding about in-game videos, I'm not even sure it is a useful case. One specific case is animated textures (e.g. water), and they are usually implemented as a pack of copy/pasted if-s in material file, which point to different frame images. Something like "Motion JPEG/TGA/PNG/DDS" Maybe one day we will make this approach easier to use... textures and images: TGA: The simplest uncompressed format, basically default in case compression is not used. PNG: Lossless compression, but pretty slow to load. Should be OK for some GUI images, but probably not a good idea to e.g. write all normal maps as PNGs: I imagine loading times will increase noticeably if we do so JPG: Lossy format, but pretty small and rather fast to load. I think it is usually used for screenshots and maybe GUI images, otherwise not popular. DDS: This is container which can in principle store both compressed and uncompressed data, but I think we use it only for precompressed textures. TDM supports DXT1, DXT3, DXT5, RGTC compressed formats (each of these formats has a bunch of pseudonymes). If you use compressed DDS, then you can be sure it will be displayed as is, as no further processing it applied to it. Sometimes it is good, sometimes it is bad. The engine has fast compressor/decompressed for all texture compression formats, so if you supply TGAs and they are compressed on load, you only lose in mission size. Images inside guis (briefing, readables) use the same material loading system, so they should support all the same image formats. sound files: OGG: Relatively widespread open format with lossy compression. I guess it is used for everything by default, which seems OK to me. OGG files are loaded on level start, but decompressed on the fly. WAV: Simple uncompressed sound file. Obviously larger than OGG, not sure why it is used sometimes (maybe less compression artifacts?) The only thing I know is that the "shakes" feature of materials specifically asks for WAV.

-

So, what are you working on right now?

nbohr1more replied to Springheel's topic in TDM Editors Guild

A "stage keyword" converts the image to a normalmap. Material defs can have multiple normalmaps but the order impacts whether they are visible or not. You can also use the addnormals keyword to blend a Doom 3 heightmap with a Normal map Both heightmaps use grayscale images to represent height. -

So, what are you working on right now?

nbohr1more replied to Springheel's topic in TDM Editors Guild

We are currently sticking with 1024x1024 for all tiling textures. For characters models or complex assets, it is probably OK to bump up to 4096x4096. Just so there is no confusion, the old Doom 3 "heightmap" is just a grayscale image that gets converted to a normalmap and usually has lower image quality due to only having one channel of color. The new "heightmap" in 2.13 beta is for "Parallax Occlusion Mapping" ( POM ) and thus should at least have enough resolution to represent the 3D contours of the underlying texture. ( Maybe 512x512 min for a 1024x1024 texture? ) -

Trying to load image X from frontend, deferring...

stgatilov replied to snatcher's topic in TDM Tech Support

Yes, it seems that preloading is how Doom 3 engine was supposed to work. I guess it depends on how much/big stuff you get at once. If it is a small TGA file already in OS disk cache, then it might get loaded in <1 ms and you won't notice. But if you load some large model and many images, then the total latency can be noticeable. But there is even worse scenario, and I guess it was typical at the time of Doom 3. Imagine that a player has TDM on HDD and cold-boots the game. The pk4 files are not in OS disk cache yet, so the dynamic image load triggers a request to HDD. And the game waits until HDD responds, which is about 10 ms today. -

Those hand animations are very nice to see, especially the compass animation. Can you make animation for holding / showing the map, like in sea of thieves? (Also asked for in https://forums.thedarkmod.com/index.php?/topic/21038-lets-talk-about-minimap-support/#findComment-463678

-

Okidoki, I finally bit the bullet & went with a Recoil 17 from PC Specialist Specs I went for are Chassis & Display: Recoil Series: 17" Matte QHD+ 240Hz sRGB 100% LED Widescreen (2560x1600) Processor (CPU): Intel® Core™ i9 24 Core Processor 14900HX (5.8GHz Turbo) Memory (RAM): 32GB PCS PRO SODIMM DDR5 4800MHz (1 x 32GB) Graphics Card: NVIDIA® GeForce® RTX 4080 - 12.0GB GDDR6 Video RAM - DirectX® 12.1 External DVD/BLU-RAY Drive 1TB PCS PCIe M.2 SSD (3500 MB/R, 3200 MB/W) Operating System: NO OPERATING SYSTEM REQUIRED The case seems to be made of metal not plastic & there's an optional water cooling unit, which I didn't get For the OS I disabled fast boot & secure boot, loaded Zorin 17.2, used the entire disk, without any installation issues Zorin is a Ubuntu fork so Ubuntu & it's other forks shouldn't have an issue if anyone else gets one of these The only minor issue is the keyboard backlight isn't recognized by default, but the forums are full of info on sorting that out, not that I'm too bothered I've installed TDM & it runs beautifully I also copied my thief 1 & 2 installations from my desktop, I had to uncomment "d3d_disp_sw_cc" in cam_ext.cfg to get the gamma processing working but they run happily too The fans switch on when booting & switch off again after a few seconds, the machine isn't stressed enough to turn them on running TDM so far - this is not a challenge btw On the whole, I'm extremely pleased So thanks for all the advice

-

Mission Administration Terms of Service

stgatilov replied to nbohr1more's topic in TDM Editors Guild

I think we should first decide what do we want TOS for: To protect TDM from legal issues? To protect TDM team from angry mappers in case of conflicts? To guide mission authors in their work? In my opinion TOS should only cover legal issues, and wiki articles about making/releasing missions should cover author guidance. The chance of getting malware in a mission only increases after we write this publicly. Better don't even mention it, we are completely unprotected against this case. By the way, isn't it covered by "illegal" clause? I'm not sure this is worth mentioning, but I guess @demagogue knows better. By the way, which jurisdiction defines what is legal and what is not? Isn't it enough to mention that we will remove a mission from the database if legal issues are discovered? I think this is worth mentioning simply because mappers can easily do it without any malicious intent. We already had cases of problematic assets, so better include a point on license compatibility. It is a good idea to remind every mapper that this is a serious issue. I also recall some rule like "a mission of too low quality might be rejected". In my opinion, it is enough. You will never be able to pinpoint all possible cases why you might consider a mission too bad in terms of quality. And even the specifics mentioned here already raise questions. Having such a rule is already politics. I feel it does not save us from political issues but entangles us into them. If there is a mission which contains something really nasty, it will cause outrage among the community (I believe our most of active forum members are good people). If people are angry, they will tell the mission author all they think about it. And if the author won't change his mind, he will eventually leave TDM community. Then the mission can be removed from the database, perhaps with a poll about the removal. But it sounds like an exceptional case, it is hard to predict exceptional cases in advance. This is not even terms of service, but a technical detail about submissions. The mission should be accompanied by 800 x 600 screenshots. Or we can make them ourselves if you are OK with it. This is again purely technical, and I'm not even sure why it is needed. Isn't it how TDM works? If mapper does not override loading gui file, then default one is taken from core? Is it even worth mentioning? I think we should discuss mission updates by other people in general. This is worth mentioning so that mappers don't feel deceived. The generic rule is that we don't change missions without author's consent. But it is unclear how exactly we should try to reach the author if we need his consent. PM on TDM forums? Some email address? However, sometimes I do technical changes to ensure compatibility of missions with new versions of TDM. Especially since the new missions database has made it rather easy to do. Luckily, I'm not a mapper/artist, so I never fell an urge to replace model/texture or remap something. But still, it is gray zone. On the other hand, I think the truth is: we can remove a mission from database without anyone's consent. I hope it has never happened and will not happen, but I think this is the ultimate truth, and mentioning this sad fact might cover a lot of the other points automatically. -

Yeah I'm aware of the use cases - I mainly used it for particles and preventing models from showing up through walls. The point of the thread was to reveal why it was even created, as the thread I linked contains advice from @stgatilov to NOT use it at all: https://forums.thedarkmod.com/index.php?/topic/21822-beta-testing-high-expectations/page/10/#findComment-490707 If that's the advice, an explanation is needed and the Wiki updated.

-

We support the same formats as Doom 3 except we added ob,j md3, rgtc dds, ogg, png, and a few ffmpeg codecs. https://modwiki.dhewm3.org/File_formats

-

A Winter's Tale By: Bikerdude "One of the few pleasures I have as a man of the thieving profession is to return to my old home town for the holidays and relax in the company of friends and relatives. But a local lord has been going out of his way to making the lives of the locals a miserable." Notes: - TDM 2.12 or later is REQUIRED to play this mission. - This is my entry for the speedbuilding jam. - This FM should play on the vast majority of systems. I have perf tweaked this map with low end players in-mind. And have moved the globale fog/moon lights to the ‘ better’ LOD level. - min recommended spec (as per beta testing) Intel Core i7(3rd gen), nvidia 1030 4gb (GDDR5), will get you 60fps inside and 45fps outside. - Various areas will look better with shadow maps enabled (SoftShadows set to medium/high, Shadows Softness set to zero and LOD set to 'better'), at the possible expense of performance depending on your system specs. - this mission continues the imperial theme, with this being a border town slightly further out from the main imperial lands than Brouften. - build time roughly 130hrs. Download Link: - (v1.4) - https://discord.com/channels/679083115519410186/1310012992867405855/1310022631415746632 v1.4 changes: I have been tweaking the LOD levels through-out the map - - Snow fall has been moved to LOD low/normal. - world fog moved to LOD better. - fireplace grills to LOD better. - world moonlight moved to LOD high. Other tweaks - - reduced light counts in all the fireplaces. - reduced the length of the looping menu video background. - Some new assets Gameplay: - added more ways for the player to get around. - tweaked existing routes to make some easier or more of a challenge. - and added an additional option objective. Credits: Special thanks go to - - Nbhor1more, flashing out the briefing and creation of readables. - Amadeus, Help w/custom objectives, script work, proof reading, mission design & testing. - Dragofer, for custom scripts and script work for the main objective. - Baal, for additional tweaking of the main objective and script work,. - Beta testers: Amadeus, Nbhor1more, Mat99, DavyJones, S1lverwolf, Baal & Dragofer. - Freesound.org, for ambient tracks, further details in sndshd file. Speed Jam Thread: - https://www.ttlg.com/forums/showthread.php?t=152747

- 33 replies

-

- 18

-

-

-

Me too. TDM combat is jankeriffic but manageable when you get the hang of it, and still considerably less trivial than in the original Thief duology. However, I don't play stealth games for combat. If I want to fight things, I'll go play DOOM- which is ironic considering the engine TDM is built on. I wouldn't say I have no use for health potions though. Sometimes I take falling or drowning damage.

-

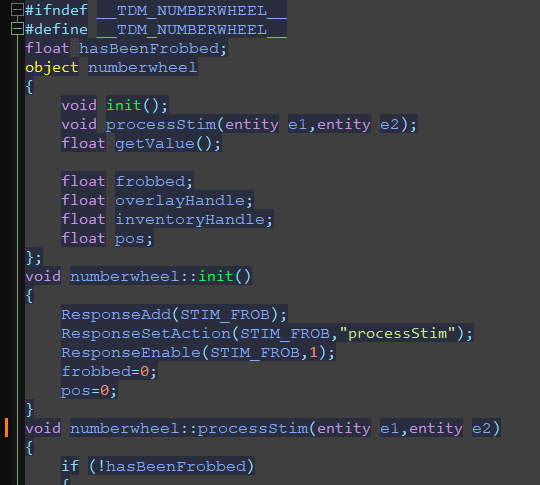

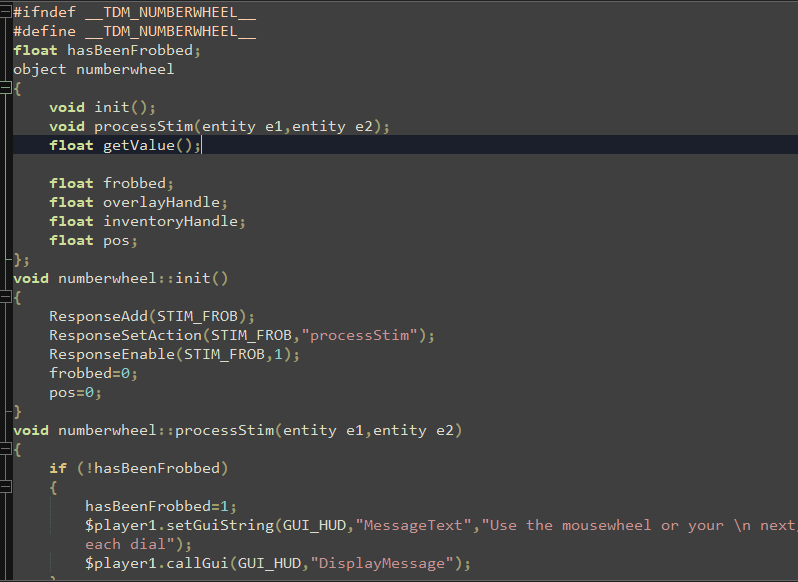

I just wondered if it's useful to use a code spell checker inside a code editor. Scripting syntax is (afaik) symilar to C++, so maybe that could help. There are plugins for vscode and notepad++ I think. Also found this Notepad++ doomscript highlighter: https://www.moddb.com/games/doom-iii/downloads/doom3-script-highlighter-notepad Not sure I like the syntax higlighting. In lightmode it's worse. Maybe it's supposed to represents a console editor? C++ syntax highlighting: