Search the Community

Showing results for '/tags/forums/model/'.

-

I figured out that I could change player head - to add a cowboy hat instead of thief cowl - but it bobs down into vision when moving. Also it covers view when looking up. I created the head by creating a def file for the hat and the "new" player head. entityDef prop_cowboy_hat_whi { "inherit" "func_static" "editor_usage" "A cowboys's hat" "editor_displayFolder" "props/wearables/hats" "mass" "0.6" "model" "models/darkmod/wearables/headgear/hat_cowboy.ase" "skin" "wearables/hats/hat_white_plain" "remove" "0" "solid" "0" "arrowsticking_disabled" "1" // grayman #837 } entityDef atdm:ai_head_thief_player { "inherit" "atdm:ai_head_base" "model" "head_08" // bare head "skin" "heads/clean_heads" "def_attach1" "prop_cowboy_hat_whi" // attaches to side without attach pos "pos_attach1" "velvetcap_1" "attach_pos_origin_velvetcap_1" "2 7 0" // y z x ? "attach_pos_angles_velvetcap_1" "90 0 -98" Is there anything I can do to fix this or am I just screwed?

-

I just read@motorsep Discovered that you are able to create a brush, then select it and right click "create light". Now you have a light that ha the radius of the former brush. Just read it on discord and thought it may be of use for some people in the forums here too.

-

well, I got it working on a simple test plane, subdivided and vertex painted in Blender. The shader I had was actually just fine (at least after removing the parm11 stuff). The problem was with the model's .ase file.

-

If you already know this ignore it but if you don't, then know that if's in materials stages turn on and off those stages, so you need to make sure you are setting parm11 to a valid value above zero in a script somewhere. But if removing those, didn't solved the problem, then the problem could be the vertex colors itself, are you sure the model has the correct vertex color info on it? Afaik the engine only supports grayscale vertex colors in the RGB format no alpha. Also instead of blend diffusemap keyword try the blend add (gl_src_one gl_dst_one) not sure if this matters but the basic example for vertex colors in this link uses it. I haven't used vertex blending for a very long time so all of this is rusty on my mind unfortunately. Also not sure what you mean with ""lawn_vertex_blend" is the vertex-blended DDS image file exported from Blender and is also referenced in the .ase file." but a material name like yours "textures/darkmod/map_specific/lawn_vertex_blend" shouldn't be a link to a real texture, in reality that is just a virtual path to a fake folder that DarkRadient uses to display in the media section of the editor. You can have a material with just a single word on its name and it will show in the media tab, just not inside a folder but in the global space. it is like this: virtual path/material name { material code } or material name { material code }

-

I'm not having much success with vertex blending a cobblestone path with grass. I've applied it to an .ase model imported from Blender. I followed this tutorial: https://wiki.thedarkmod.com/index.php?title=DrVertexBlend_(tutorial)#Vertex_Painting_Each_Object In DR, my custom material file does show up in the material editor and it seems to be getting applied to the model but incorrectly - it's just a uniform green. In game, the model is greyscale - I think it's getting textured with my vertex-painting, instead of the grass and cobblestone. Here's the mtr file. Have I overlooked anything? textures/darkmod/map_specific/lawn_vertex_blend { surftype15 description "grass" qer_editorimage textures/darkmod/nature/grass/short_dry_grass_dark_ed.jpg { blend diffusemap map textures/darkmod/nature/grass/short_dry_grass_dark vertexColor } { blend bumpmap map textures/darkmod/nature/grass/short_dry_grass_local vertexColor } { if ( parm11 > 0 ) blend gl_dst_color, gl_one map _white rgb 0.40 * parm11 vertexColor } { if ( parm11 > 0 ) blend add map textures/darkmod/nature/grass/short_dry_grass_dark rgb 0.15 * parm11 vertexColor } { blend diffusemap map textures/darkmod/stone/cobblestones/cobblestones02_square_dark inverseVertexColor } { blend specularmap map textures/darkmod/stone/cobblestones/cobblestones02_square_dark_s inverseVertexColor } { blend bumpmap map textures/darkmod/stone/cobblestones/cobblestones02_square_dark_local inverseVertexColor } { if ( parm11 > 0 ) blend gl_dst_color, gl_one map _white rgb 0.40 * parm11 inverseVertexColor } { if ( parm11 > 0 ) blend add map textures/darkmod/stone/cobblestones/cobblestones02_square_dark rgb 0.15 * parm11 inverseVertexColor } } "lawn_vertex_blend" is the vertex-blended DDS image file exported from Blender and is also referenced in the .ase file.

-

I just found this thread on ttlg listing Immersive Sims: https://www.ttlg.com/forums/showthread.php?t=151176

-

Recently revisiting the forums after a longer period of time I wanted to check the unread content. I don't know if I am doing this wrong since.. ever... but on mobile (visiting the unread content page on my smartphone) you have to click on that tiny speech bubble to go to the most recent post in a thread. If you don't click correctly you'll hit the headline and end up at post 1 in the beginning of the thread. It's terrible on mobile, since not only the speech bubble is really small and was to miss. But also the thread headline is just millimeters away from it so you go right to the first post that was ever made instead of the most recent ones. Am I doing it wrong? I just want to go through u read content a d the to the newest post from that topic.

-

A@datiswous Ah yeah, well sorry, I was quiet busy and only visiting discord. First time here on the forums since months now I think.. Thank you for the subtitles. I encourage everyone who is interested in using them to download it from here as I'm not sure when I'll be able to implement them myself into the mission. Again, thank you for your work.

- 221 replies

-

- 2

-

-

- contest

- fanmission

-

(and 2 more)

Tagged with:

-

Since they both improve performance and fix other edge cases, seems enabling them might be better than not. I already did so on my end... don't mind a few inaccurate edges even if I wish those didn't happen either, happy for the extra FPS plus fixing self-shadowing issues I have also noticed now that I think about it. Actually I'm curious about something: What if we gave that option 3 modes, so only front faces or backfaces or both cast shadows? I remember once reading that in some circumstances, you can get better results by only computing shadows out of backfacing given they're closer to what's casting them and you don't need shadowing inside an enclosed model.

-

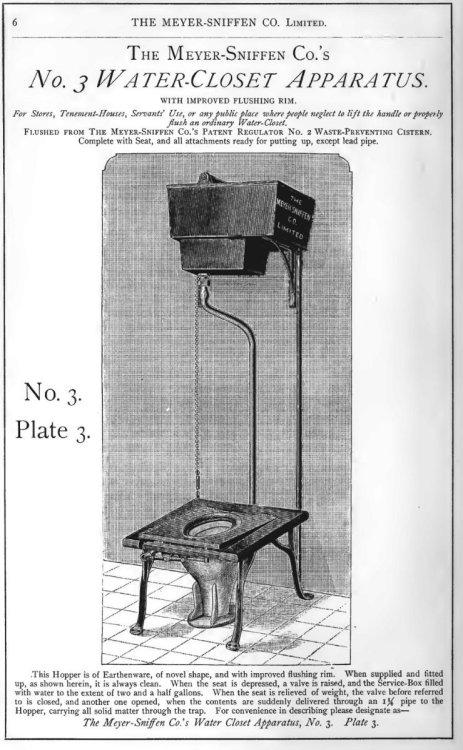

Mabey they have. I've yet to see ANY Guild themed facilities - at all, in any mission for TDM. Case on point, Many-a-map is themed around; Ancient Multi-Hundred year old Castles - (no plumbing evident of ANY kind). brick Industrial Warehouses, City back-alleyways... rediscovered Ruins, often below a Church/Temple/Mausoleum/Graveyard... huge old Mansions - rarely even updated to TOWN-GAS Lamp lighting, let alone 'modern' plumbing... This does leave out; Civil Service offices Watch Stations / Prisons High-class Merchant shops & stores Multi-Tenant Apartment / Hospital / Hotel (ect...) Factory / Office buildings The 'modern' design we are all familiar with was only perfected by 1877. Yet the 1st version was patented in 1778~ (ish). So here's a old plumbing catalogue page from 1884. Will anyone model & skin a Water-closet I wonder?

-

Of course, it is one of the reasons for the decline of online forums, since the advent of mobile phones. Forums on a mobile are a pain in the ass, but on the other hand, for certain things there are no real alternatives to forums, social networks cannot be with their sequential threads, where it is almost impossible to retrieve answers to a question that is asked. has done days ago. For devs for internal communication, the only thing offered is a collaborative app, such as System D (not to be confused with systemd). FOSS, free and anonymous registration, access further members only by invitation, full encrypted and private. https://www.system-d.org

-

Ignoring is somewhat inadequate as you still see other members engaging in a discussion with the problematic user, and as Wellingtoncrab says such discussions displace all other content within that channel. Moderation is also imperfect as being unpleasant to engage with is not in itself banworthy, so there is nothing more to do if such people return to their old behaviour after a moderator had a talk with them, except live with it or move away. I'd be more willing to deal with it if it felt like there were more on-topic discussion, i.e. thoughts about recently played fan missions or mappers showcasing their progress, rather than a stream of consciousness about a meta topic that may or not have to do with TDM. I guess the forums already serve the desired purpose, or they just compartmentalise discussions better.

-

Yep. Both checked. Tried it on round door with only rotate and only translate as well as both (my goal). In all cases the door was visually there but NON solid and NON frobable. Guess some change in base code over past 4 years. No biggie as I did a work-around. But acouple of hours testing - was it the water against the door? no. Something screwy with the brush door? No, deleted recreated, converted to model, still NG. Created regular sliding and rotating doors in water and out, with round model replacing normal one. Still NG. Was there any test I missed? On with the mapping, tally forth once more into the breaches. Mwah ha ha ha

-

A search engine with the linguistic capabilities of ChatGPT can of course be useful, but a language model like ChatGPT without access to real-time information, no matter how good the language model is, is nothing more than a toy, can serve as an assistant or customer support for certain products with the necessary knowledge base of this product, but no more. Today, the information from search engines with AI is infinitely more reliable than that from AI chats, especially if they are not from large monopolies that may reflect certain commercial and/or political interests in their responses, as has already been experienced in Google, MS and also BraveAI, the latter with strong influences from the extreme right and whose CEO was already fired from Mozilla for this. Better the independent AI You can try right now https://andisearch.com (My favorite) https://www.perplexity.ai (also as usefull Browser extension) https://phind.com (specially for devs and programmers) https://you.com (the most complete)

-

In my mission, I have a round door that rotates AND translates. I started this 4 years ago before I got cancer, and then the door worked. Not anymore! I finally got a ROUND to testing this. It does not matter the type of door, if the door is a round brush OR a round model, in the game the door is non-solid and non frobable. I can, however trigger it in the console and it works as it should. I am going to try and make a solid (rectangular) door in front of it as an overlay and use script to achieve my goal. Will let you know how it goes. Update: Works great, had to do a screen grab of the circular door+ wall of the size of rectangular door. Create dds image and material file, so I textured the rectangle door and you can't see difference in game. frob the rectangle, it vanishes and circular one rolls out of way. YAY

-

First of all, ChatGPT , independent of the version, is a language model to be able to interact with the user, imitating being intelligent. It has a knowledge base that dates back to 2021 and adds what users contribute in their chats. This means, first of all, that it is not valid if you are looking for correct answers, since if it does not find the answer in its base, it has a tendency to invent it with approximations or directly with false or obsolete answers. With this, the future will not change, it will occur with AI of a different nature, on the one hand with search engines with AI, since they have access to information in real time, without needing such complex language models and for this reason, they will gradually search engines are going to add AI, not only Bing or Google, but before these there was Andisearch, like the first of all, Perplexity.ai, Phind.com and You.com. Soon there will also be DuckDuckGoAI. On the other hand, generative AI to create images videos and even aplications, music and other, like game assets or 3D models., The risk with AI came up with Auto GPT, initially a tool that seemed useful, but it can be highly dangerous, since on the one hand it has full access to the network and on the other hand it is capable of learning on its own initiative to carry out tasks that are introduced as if it were a Text2Image app out there, what was demonstrated with ChaosGPT, the result of an order introduced in Auto GPT to destroy humanity, which it immediately began to develop with extraordinary efficiency, first trying to access the missile silos nuclear weapons and to fail, luckily, trying to get followers on Twitter with a fake account that he created and where he got more than 6000 followers, hiding later, realizing the danger that can be blocked or deactivated on the network. Currently nothing is known about it, but it is still a danger not exactly to be ruled out, it can really become Skynet. AI is going to change the future, but not ChatGPT which isnt more than a nice toy.

-

The new update sounds very exciting! Already got it and will probably play a new FM again soon. I wasn't sure if lights make full use of portals in order to ditch even more calculations, awesome to hear that's now on the list too Reminds me of something I asked a while ago, don't think anyone knew the answer with certainty: When using a targeted light / spotlight, does it improve performance compared to omni lights? I wasn't sure if the engine knows to calculate only inside the cone they're pointing at, or if behind the curtains it still treats them as 360* projections and just visually makes them a cone. If there's an improvement I was wondering if hooded lights could be made like that by default. Visually there should be no difference: I experimented with the outdoor lamp once, by default it still shines in all directions... you don't see anything above anyway since the model self-shadows, we could likely get it looking almost identical with a wide cone. Just thought it might be a good idea to bring this up now that I remembered, for now a nice new optimization to enjoy

-

Wishlist For Darkradiant

motorsep replied to sparhawk's topic in DarkRadiant Feedback and Development

FEATURE REQUESTS: 1. Array tool - to duplicate selected model or entity or brush via UI on XYZ with spacing parameters (kinda like Blender's array modifier). 2. One-click surface/material copy to either face or entire brush. Currently I have to setup one face by using Surface dialog and then copy/paste it (using hotkeys combo) to desired faces (for which I still have to deselect selected face and then select new one). Very very tedious process. It would be a lot smoother of copied surface parms (material, tiling, etc.) could be applied in one click in 3D view. 3. Ability to set tiling on the entire brush numerically. Currently numerical entry fields are grayed out in the Surface UI when whole brush is selected Thanks beforehand -

I would not yet regard gpt as general intelligence but it certainly has aspects of it, and more will emerge in this model or the next I'm sure. Exciting and scary times! Meanwhile another idea occurred to me regarding continuity. I'm going to do a test where I create a conversation with just my fiction rules and possibly the plot summary, etc. then share it publicly. I can then start a new conversation for the story proper and refer it to that shared url every few messages to refresh its memory of the core essentials. I'm not sure the plot is essential actually because even if it deviates from the original plot idea, it could still work. What needs to be constant is the rules, and significant story progress (eg, Mr Johnson died in Chapter 7 so no, he can't be baking bread in Chapter 12!)

-

I know what you mean. The things the algorithm can do once it's warmed up are astounding, and the endless list of applications to try out is mesmeric. I overdid it early on and actually gave myself a bit of tendonitis from spending every spare waking moment experimenting with it. I'm trying to pace myself better now. But I'm right there with you as regards the philosophical implications. GPT-4 has some legit weaknesses as a logic engine, but its abilities of inference and deduction are no joke, even when you strip away its overwhelming advantage of knowing everything humanity has ever uploaded to the internet pre-September 2021. It can see conceptual connections that most people would not pick up on, and it can act on them. That sounds to me like general intelligence; and it's already near or exceeding typical human level! Without trying to sound alarmist, this is not something this type of model should be able to do based on the training data available to it. There are no examples for these sorts of highly specific original deductions for it to regurgitate. The general intelligence is some sort of new emergent phenomenon, and it's got quite a lot of people in the machine learning research community equal parts excited and spooked. I don't see any new comments on either the public link or my private copy of the conversation. Maybe continuing just makes a new instance for that user?

-

Cheers. Initially I was thinking of this for lights... later thought to include animated models too, mesh deformation isn't that expensive so I can see why there's little benefit. Especially as I realized per-pixel lighting would still be recalculated each frame, specularity also depends on camera angle not just model movement... technically we could frameskip that too but I'm getting way ahead of myself for what would likely be a tiny benefit Could this still work for lights though? Recalculating shadows when something moves in radius of a light is a big cost, even if it's gotten much better with the latest changes. A shadow recalculation LOD may give a nice boost. We could test the benefit with an even simpler change: A setting to cap all shadow updates to a fixed FPS. This would probably be a few lines of code so if you can give me a pointer I may be able to modify my local engine clone to try it. If it offers a benefit it can be made distance-based later. Another way would be to make a light's number of samples slowly decrease with distance, the furthest lights dropping to just one sample like sharp / stencil shadows: Shadow samples also have a big impact. What do you think of this solution as a form of light LOD, maybe mixed with just a shadow update LOD? These actually sound like they make sense; If you think it's worth it I can post those two on the tracker so they're not forgotten.

-

Ooh! We should compare notes in a few weeks. I've been trying for a while now to find tricks for re-establishing continuity between conversations. I've had some success, but nothing yet I would call satisfactory. For instance with the Adventures of Thrumm RP game, I had to start a new session because the ChatGPT client was taking on the order of 20s per token to generate its responses at the end and was crashing every 2-3 minutes. I felt like I successfully got it back into the character and in story for the new session, but it took something like 2 pages of text and over 40 minutes of work on my part. Judge for yourself how well I did: https://chat.openai.com/share/f14f77f7-2b49-497a-990a-b8ee6f405fb1 I'm envisioning an ultimate solution in the form of AI "personas" with associated memories and biographical information in a searchable database, which the chatbot can interact with through an API based on some minimal leading-prompts. Unfortunately that is still a bit beyond my depth as a engineer and AI whisperer... but I am making slow progress. Thanks! You are correct that these were each one continuous conversation (minus a few false-start branches where I submitted incomplete prompts by mistake or tried things that didn't work). I probably would not recommend going that long again. I really only did it in those examples as an experiment to see what would happen. I'd say beyond about 8 rounds of lengthy prompt-response the model's amnesia problem completely erases any benefit it gets from the extra context of the longer conversation. Plus in long conversations it sometimes develops pathologies like linguistic ticks or personality quirks. Starting new conversations periodically is a pain, but probably still best practice. It's a new feature! This is actually the first time I've used it so I'm not 100% clear how it works when you send it to someone with their own account. The controls are on the left next to the chat session title in the chat list: the icons from left to right are to change the conversation title, share the conversation, and delete the conversation. If you'd like to try adding to another person's thread, here's a false start of mine you could try it on. I'll tell you if it works. (Turns out ChatGPT is chronically bad at anagrams, so vandalize away.) https://chat.openai.com/share/8d7227ab-3905-4bf1-82a3-12be4899d48f

-

A few additions and observations: We may get even better results using not just distance but also the entity's size, given the rate should probably depend on how much the entity is covering your view at that moment. As this shouldn't need much accuracy we can just throw in the average bounding-box size as an offset to distance to estimate the entity's total screen space. A small candle can decrease its update rate even closer to the camera, while a larger torch will retain a slightly higher rate for longer. To prevent noticeable sudden changes, the way LOD models can be seen snapping between states in their case, the effect can be applied gradually without artificial steps given it's just a number and may take any value. It might be best to have a multiplier acting on top of the player's maximum or average FPS: If your top is 60 FPS, the lowest update rate beyond the maximum distance would be 30 FPS for a 0.5 minimum setting... along the way one entity may be 0.9 meaning it ticks at 54 FPS, a further one 0.75 meaning 45 FPS, etc. Internally there should probably be different settings for model animations and lights: A low FPS may be obvious on AI or moving objects so you probably don't want to go lower than half the max (eg: 30 FPS for 60 Hz)... for lights the effect can be more aggressive on soft shadows without noticeable ugliness (eg: 15 FPS for 60 Hz). In the menu this can probably be tied to the existing LOD option which can control both model and frameskip LOD's.

-

I hope I'm not proposing some unfeasible idea that was already imagined before, this stuff is fun to discuss so no loss still. Riding the wave of recent optimizations, I keep thinking what more could be done to reach a round 144 FPS compatible with today's monitors. An intriguing optimization came to mind which I felt I have to share: Could we gain something if we had distance-based LOD for entity updates, encompassing everything visual from models to lights? How it would work: New settings allow you to set a start distance, end distance, and minimum rate. The further an entity gets the lower its individual update rate, slowly decreasing from updating each frame (start distance and closer) to updating at the minimum rate (end distance and further). This means any visual change is preformed with frame skips on any entity: For models such as characters animations are updated at the lower rate, for lights it means shadows are recalculated less often... even changes in the position and rotation of an entity may follow it for consistency, this would especially benefit lights with a moving origin like fireplaces or torches held by guards which recalculate per-frame. Reasoning: Light recalculation even animated models or individual particles can be significant contributors to performance drain. We know the further something is from the camera the less detail it requires, this is why we have a level-of-detail system with lower-polygon LOD models for characters and even mapmodels. Thus we can go even further and extend the concept to visual updates; Similar to how you don't care if a far away guard has a low-poly helmet you won't notice, you won't care if that guard is being animated at 30 FPS out of your maximum of 60, nor if the shadow of a small distant light is being updated at 15 FPS when an AI passes in front of it. This is especially useful if you own a 144 Hz monitor and expect 144 FPS: I want to see a character in front of me move at 144 FPS, but may not even notice if a guard far away is animating at 60 FPS... I want the shadows of the light from the nearby torch to animate smoothly, but can care less if a lamp meters away updates its shadows at 30 FPS instead. The question is if this is easy to implement in a way that offers the full benefit. If we use GPU skinning for instance, the graphics card should be told to animate the model at a lower FPS in order to actually preserve cycles... does OpenGL (and in the future Vulkan) let us do this per individual model? I know the engine has control over light recalculations which would probably yield the biggest benefit. Might add more points later as to not make the post too big, for now what are your thoughts?

-

@Fidcal I know where you're coming from. GPT-4's continuity can sometimes falter over long stretches of text. However, I've found that there are ways to guide the model to maintain a more consistent narrative. I've not yet tried fully giving GPT-4 the free reins to write its own long format fiction, but I co-wrote a short story with GPT-4 that worked really well. I provided an outline, and we worked on the text piece by piece. In the end, approximately two-thirds of the text was GPT-4's original work. The story was well received by my writing group, showing that GPT-4 can indeed be a valuable contributor in creative endeavors. Building on my previously described experiments, I also ran GPT-4 through an entire fantasy campaign that eventually got so long the ChatGPT interface stopped working. It did forget certain details along the way, but (because the game master+player dynamic let me give constant reinforcement) it never lost the plot or the essential personality of its character (Thrumm Stoneshield: a dwarven barbarian goat herder who found a magic ring, fought a necromancer, and became temporary king of the Iron Home Dwarves). For maintaining the story's coherence, I've found it helpful to have GPT-4 first list out the themes of the story and generate an outline. From there, I have it produce the story piece by piece, while periodically reminding the model of its themes and outlines. This seems to help the AI stay focused and maintain better continuity. Examples: The adventure of Thrumm Stoneshield part 1: https://chat.openai.com/share/b77439c1-596a-4050-a018-b33fce5948ef Short story writing experiment: https://chat.openai.com/share/1c20988d-349d-4901-b300-25ce17658b5d