Search the Community

Showing results for '/tags/forums/learning mapping/'.

-

You can start here: https://forums.thedarkmod.com/index.php?/topic/12558-useful-important-editing-links/ Specifically, I recommend Springheel's new mapper's workshop: https://forums.thedarkmod.com/index.php?/topic/18945-tdm-new-mappers-workshop/

-

Hi all, I'm very new to TDM which I started playing a few months ago then later discovered the wonderful world of mapping with DarkRadiant and got hooked. With retirement coming up in a couple of months this has now become one of the hobbies on my list which also includes music (wind instruments, synthesizers and software), SBCs and microprocessors (ARMs, Arduinos and PICO pi) and of course relaxing by the pool. I've been having a lot of fun learning from scratch and building my first map using the Startpack. I used prefabs and models and started to build a small town, a mansion area, a forest area and a large underground maze. I'm trying to use the map_of gui to show the current player position in the maze with an "X". I need to find a way to get the player coordinates from a script to the gui. //The map_of_maze.gui used with the inventory map in this case map_of_maze.tga windowDef Desktop { rect 0,0,640,480 nocursor 1 windowDef Maze_map { rect 130,2,428,476 background "guis/assets/game_maps/map_of_maze" visible 1 windowDef Player_position { // Not sure how but dispx and dispy need to be obtained from MazePosition script. rect dispx,dispy,dispx+1,dispy+40 backcolor 0, 0, 0, 0 text "X" font "fonts/andrew_script" textscale .25 forecolor 0, 0, 0, 0.66 visible 1 } } } // The script to be called to get the player position parameters of where the // windowDef Player_position rect should be made visible. // It will be called by trigger entities at the maze access points. void MazePosition() { sys.println("The Maze Position Script has been called."); // Getting player posision coordinates in real world vector pos = $player1.getOrigin(); float x = pos_x; float y = pos_y; float z = pos_z; // Coordinates of maze in real world float Lcoor = -512; // The Left margin. float Rcoor = 648; // The Right margin float Tcoor = 3840; // the Top margin float Bcoor = 644; // The Bottom margin // Coordinates of maze in map_of_maze.gui float Lmap = 50; float Rmap = 350; float Tmap = 0; float Bmap = 470; // Calculation for coordinates transposition from real world to maze map float xcent = abs ((x - Lcoor) / (Rcoor - Lcoor)) * 100; float ycent = abs ((y - Tcoor) / (Tcoor - Bcoor)) * 100; float dispx = (((Rmap - Lmap) * xcent)/100) + Lmap; float dispy = (((Bmap - Tmap) * ycent)/100) + Tmap; // dispx and dispy need to be sent to windowDef Player_position before calling the GUI } Any help in finding a solution to my little problem would be greatly appreciated. In the meantime I'll keep building. Happy Mapping

-

Hello, my name is Tim, I'm from Berlin and because I grew up in East Germany, I don't speak English very well. I'm sorry about that. I've been playing Dark Project since 1999 and I'm very grateful that TDM exists. I just played A Night in Altham. Yesterday the mouse 1 button (Attack) stopped responding. The mouse wheel still worked, but I couldn't call up a weapon to use it. I changed the mouse button mapping, quit the game, restarted it at save points, but the mouse 1 button would not relay the command. I reinstalled the game using the existing installation. After that, the saves were lost as I had downloaded a newer version of TDM and all save points were gone. I started the mission again with the new version and realised that, for example, when I drew the bow, I could see the arrow but not the bow. So I deleted the game completely and reinstalled everything. I invested a lot of time in the mission and completed all but one of the missions, including the optional ones. All the missions I had played before worked perfectly. Input via mouse 1 works with all other PC applications. If I play the mission again, do I have to expect the error again? Many thanks for any answers. The PC's operating system and driver configuration are up-to-date: Operating system name Microsoft Windows 10 Home Version 10.0.19045 Build 19045 System manufacturer ASUS System model All Series System type x64-based PC System SKU All Processor Intel(R) Core(TM) i5-4460 CPU @ 3.20GHz, 3201 MHz, 4 core(s), 4 logical processor(s) BIOS version/date American Megatrends Inc. 2106, 27/11/2014 SMBIOS version 2.7 Version of the embedded controller 255.255 BIOS mode UEFI BaseBoard manufacturer ASUSTeK COMPUTER INC. BaseBoard product H81M-PLUS BaseBoard version Rev X.0x Platform role Desktop Secure boot state On PCR7 configuration binding not possible Windows directory C:\Windows System directory C:\Windows\system32 Start device \Device\HarddiskVolume1 Locale Germany Hardware abstraction level Version = "10.0.19041.3636" Installed physical memory (RAM) 8.00 GB Total physical memory 7.93 GB Available physical memory 4.05 GB Total virtual memory 17.4 GB Available virtual memory 11.9 GB Size of the swap file 9.50 GB Hyper-V - VM monitor mode extensions Yes Hyper-V - SLAT extensions (Second Level Address Translation) Yes Hyper-V - Virtualisation enabled in firmware No Hyper-V - Data Execution Prevention Yes Graphics NVIDIA GeForce GT 610 PNP- Gerätekennung PCI\VEN_10DE&DEV_104A&SUBSYS_847B1043&REV_A1\4&3834D97&0&0008 Adapter type GeForce GT 610, NVIDIA compatible Adapter description NVIDIA GeForce GT 610 Adapter RAM 1.00 GB (1,073,741,824 bytes) Installed drivers C:\Windows\System32\DriverStore\FileRepository\nv_dispi.inf_amd64_c1a085cc86772d3f\nvldumdx.dll,C:\Windows\System32\DriverStore\FileRepository\nv_dispi.inf_amd64_c1a085cc86772d3f\nvldumdx. dll,C:\Windows\System32\DriverStore\FileRepository\nv_dispi.inf_amd64_c1a085cc86772d3f\nvldumdx.dll,C:\Windows\System32\DriverStore\FileRepository\nv_dispi.inf_amd64_c1a085cc86772d3f\nvldumdx.dll Driver version 23.21.13.9135 INF file oem38.inf (section Section002) Colour levels Not available Farbtabelleneinträge 4294967296 Resolution 1920 x 1080 x 60 Hz Bits/pixel 32 Memory address 0xF6000000-0xF6FFFFFF Memory address 0xE8000000-0xEFFFFFFF Memory address 0xF0000000-0xF1FFFFFF I/O port 0x0000E000-0x0000E07F IRQ channel IRQ 16 I/O port 0x000003B0-0x000003BB I/O port 0x000003C0-0x000003DF Memory address 0xA0000-0xBFFFF Driver C:\WINDOWS\SYSTEM32\DRIVERSTORE\FILEREPOSITORY\NV_DISPI.INF_AMD64_C1A085CC86772D3F\NVLDDMKM.SYS (23.21.13.9135, 16.73 MB (17,544,792 bytes), 15.11.2023 16:42) Translated with DeepL.com (free version)

-

I seem to have run into a wall. I'm consistently getting the same error when compiling AAS as part of the dmap process: "WARNING: reached outside from entity..." followed by the entity number and name. The issue is that the pointfile generated is going through solid brushwork and the origin of the entity in question isn't in the void. It's always my NPCs and if I delete that one, another random guard will cause it to fail. I'm really not sure what the issue is, these guards were perfectly fine when I dmapped previous versions of this map, only now am I getting this behavior. I checked around the forums to see if anyone had encountered this before but haven't really seen any mention of it. Any thoughts?

-

Fan Mission: The Builder's Influence (2010/03/20)

Fiver replied to Springheel's topic in Fan Missions

In 2.12, the placing of two objects in the kitchen need adjusting: * The cauldron is floating to the left of the stove at coordinates (246.74 523.07 30.25 -6.1 179.2 0.0). * A bowl is sticking out from the bottom of a shelf at coordinates (229.17 696 30.25 -15.9 -174.3 0.0). I otherwise enjoyed this mission which is well crafted in both mapping and story. Things I particularly liked: * That it was possible to get an overview from the roof tops. * Multiple entry and exit points. * Evenly distributed keys and notes with hints resulted in driving the story forward in a good pace. * The setting and story elements: The rationale for builders patrolling the streets and the city hall. The chilling descriptions of the inquisition in books and notes, and how the city had invited this evil (or possibly even been tricked into doing so). Things that could improve: * I agree with thebigh's comment that it would have been nice if it was possible to enter some other building. Perhaps a room of a relative, or friend, of a victim of the inquisition and with a diary where they describe the victim's innocence and the fear of unfounded accusations keeping the larger public from ending the panic by coming forth with questions or as an alibi. Partly for additional story (mood) but also partly because it makes the surrounding accessible area appear more of an actual city than a scenery flat. Both OMs, A New Job and Tears of St. Lucia, have one such room each. -

I dont have one. Maybe @Havknorr? Tdm on a vm on m1: https://forums.thedarkmod.com/index.php?/topic/21655-tdm-210-on-m1-parallels/

-

@nbohr1more Update: As the leaking occurs in a corner I tried to add two separate slivers of shadowcaulk (turned into func_static). It leaks. But if I combine the two into a single L-shaped func_static, the leak disappears. I didn't add forceShadowBehindOpaque 1. So I guess I'll try the method of adding shadowcaulked func_statics to the problematic sections... Not a clean solution, but who said mapping should be a clean affair?

-

Sure! [[FAQ#Troubleshooting]] the link to the forum is wrong, change it to https://forums.thedarkmod.com/ (or use same value as set for variable "Discussion forum" in the wiki menu instead) This seems important and has been on my list for a long time. The following changes are small but should be uncontroversial: * [[The_Dark_Mod_-_Compilation_Guide]] "Linus distro" -> "Linux distro" * [[The_Dark_Mod_Gameplay]] in section See Also, add a link to [[Bindings and User Settings]] * [[Bindings_and_User_Settings]] change "DarkmodKeybind.cfg" to "DarkmodKeybinds.cfg" * [[Installation]] add definitive article to the first two bullet points. * [[Installation]] "When the game doesnt start the first time, the game create logs." -> "If the game doesn't start the first time, the game creates logs." * [[TDM_Release_Mechanics]] "will be heavily changed of even removed by" -> "will be heavily changed, or even removed, by" * [[TDM_Release_Mechanics]] "links to bugtracker as especially welcome" -> "links to issues in the bugtracker are especially welcome" * [[Fan Missions]] change the redirect (from the category) to the article [[Fan Missions for The Dark Mod]] * [[FAQ#What_is_The_Dark_Mod?]] create a sub-header "Which license does TDM use?" and link to https://svn.thedarkmod.com/publicsvn/darkmod_src/trunk/LICENSE.txt I would have linked to https://github.com/fholger/thedarkmodvr/wiki/Gamepad-support from [[Bindings_and_User_Settings#Gamepad_Default_Bindings]] when I learned about it in January and I noticed it was missing from the wiki article, but the article has since been updated (by you, actually) in April.

-

As detailed above (see hidden content), 2 characters may be chosen to replace duplicate characters in the existing TDM codepoint map. Since I didn't hear any feedback here, I have chosen: Ğ/ğ, with breve (cup), where Ğ uses TDM codepoint 0x88 ğ uses TDM codepoint 0x98 Why these? Because they are reasonable language-wise, and easy to bitmap-draw. At the moment, I will be doing Stone 24pt glyphs and DAT entries for these characters. I intend to add a bugtracker entry (for assignment to me) for additional work needed to fully support this new mapping

-

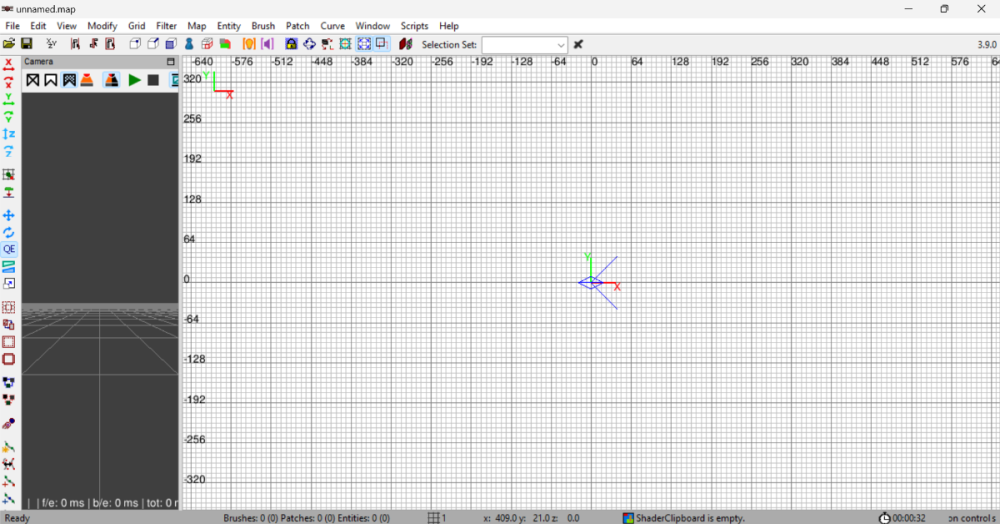

Hi, I've been trying to get into Doom 3 mapping using the DarkRadiant editor, which has been going well on my PC. However on my laptop, the "media" tab doesn't seem to be anywhere. This is what I see, just the camera and default views: Been trying to look at tutorials to see if it's something to be enabled in a newer update, but it doesn't seem so. I went to the Window dropdown menu to manually enable the Media tab (also via the M key), but nothing happens - or rather, DarkRadiant seems to go "out of focus" and I need to click it to get back in, as if it opened a menu somewhere but I still can't see it. If anybody has any advice about what I'm missing or overlooking (or another way of applying textures to brushes?), I'd greatly appreciate it. Thanks.

-

Vertex blending not working with bump maps

OrbWeaver replied to grodenglaive's topic in DarkRadiant Feedback and Development

I tried that. Unfortunately, removing [inverse]vertexColor from one or both of the bump stages makes no difference. Right, we do the same in DR — read them one by one, then assemble them into "triplets" of diffuse/bump/specular maps so the shader can process all three at once. My guess is that the vertex colours are not being passed through to the part of the shader which deals with the normal mapping, so the last normal map is being treated as the one and only normal map. If there are no objections, I can commit my red/blue example into the maps/test/dr directory. The 256x256 normal maps are only 87K and being RGTC/BC5 they also exercise another part of the shader which can easily get broken (at least in DR). -

Any tips for managing space efficiently when mapping? Although I'm proud of my first FM, its interior spaces were admittedly too open for their own good. I'm working on my submission for the 15th anniversary contest and making extensive use of AI models and measuring tools for scale, but I'm still uncertain as to what the ideal, comfortable amount of open floor space is in a room.

-

Just use 32bit binaries+Large Address Aware flag! /LARGEADDRESSAWARE (Handle Large Addresses) | Microsoft Learn Large Address Aware | TechPowerUp Forums

-

Isn't that just if you have a moveable or something behind a wall and its frob_distance is greater than the thickness of the wall? Walls don't block frobbing unfortunately, which is why you get those mapping bugs where you can frob paintings through the wall.

-

I hope that is not the new TDM version. https://forums.thedarkmod.com/index.php?/topic/20784-render-bug-large-black-box-occluding-screen/

-

For free ambience tracks it's as Freky said: you look around on the internet for tracks with the appropriate license to be included in your FM. Fortunately, you likely don't even need to bother doing this as a beginner as there's an entire "Music & SFX" section of the forums full of good ambient tracks for you to use if the stock tracks do not meet your fancy. You might also be interested in Orbweaver's "Dark Ambients", which come with a sndshd file already written for you.

-

Beta Testers Wanted. The Lieutenant 3: Foreign Affairs

Frost_Salamander replied to Frost_Salamander's topic in Fan Missions

For the FM? For beta 1 it's here: https://drive.proton.me/urls/H1QBB04GA0#oBZTb1CmVFQb I've already done around 100 fixes though, so you might want to wait for beta 2 which should be ready in a couple of days hopefully. All links are in the first post of the beta thread here: https://forums.thedarkmod.com/index.php?/topic/22439-the-lieutenant-3-foreign-affairs-beta-testing/ -

Also, I've built another console app, "datBounds", to visualize the bounding box around each bitmap character. Starting from your choice of .dat, .fnt, or .ref file, it generates a set of .tga files, e.g., stone_0_24_bounds.tga & stone_1_24_bounds.tga. You can optionally include a 2-hex-digit label on each bounding box. More about that in May. I'm using the results of this to understand the current complex codepoint mapping of the Stone 24pt glyphs, and develop the workplan and grid layouts for upcoming work.

-

Interesting idea. Not sure about my upcoming time availability to help. A couple of concerns here - - I assume the popup words uses the "Informative Texts" slot, e.g., where you might see "Acquired 80 in Jewels", so it likely wouldn't interfere with that or with already-higher subtitles. - There are indications that #str is becoming unviable in FMs; see my just-posted: https://forums.thedarkmod.com/index.php?/topic/22434-western-language-support-in-2024/

-

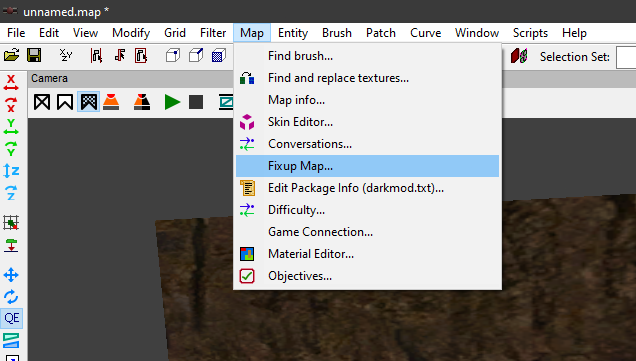

In post https://forums.thedarkmod.com/index.php?/profile/254-orbweaver/&status=3994&type=status @nbohr1more found out what the Fixup Map functionality is for. But what does it actually do? Does it search for def references (to core?) that don't excist anymore and then link them to defs with the same name elswhere? Also I would recommend to change the name into something better understood what it is for. Fixup map could mean anything. And it should be documented in the wiki.