Search the Community

Showing results for '/tags/forums/model/'.

-

Is it possble to make skins for brushes/patches by conferting them to func_static? Models are func_static when you add them in DR, but in the skin file you reference them with the model name instead of the entity name. When you convert a brush/patch though, it converts it uses a model with the same name as the entity name, so in this case func_static_1 for example. Would it be enough to use that in the skin file? Allthough the wiki article states it's important to add an extension.

-

It's strange this is not mentioned on the wiki page.. I do find it logical in program logic. Cool, didn't think of that. I wonder if it's possible to do something like this: skin one_brick_teal_blue { model models/title_models/walls/wooden_frame/straight_frame/* textures/darkmod/plaster/plaster_01 textures/darkmod/stone/brick/blocks_tealblue_dark } So you can make folders of specific models that you can skin in the same way.

-

Your skins don't have a model specified in the skin code. How do you think it works that way? Edit: The reason this happens (I think) is that the skin name cannot start with a number. skin one_brick_teal_blue { model models/title_models/walls/wooden_frame/straight_frame/straight_frame_wall_128_x_128.ase textures/darkmod/plaster/plaster_01 textures/darkmod/stone/brick/blocks_tealblue_dark }

-

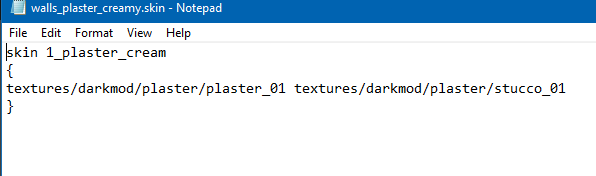

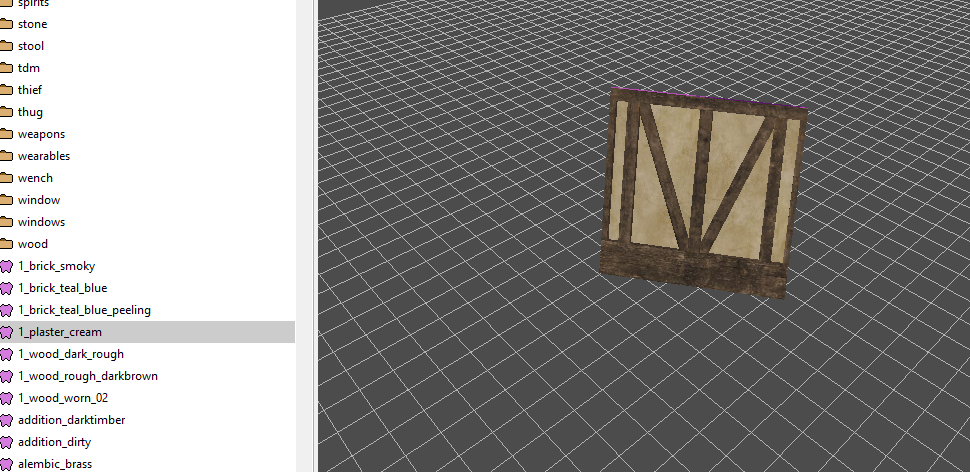

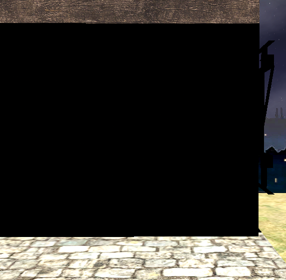

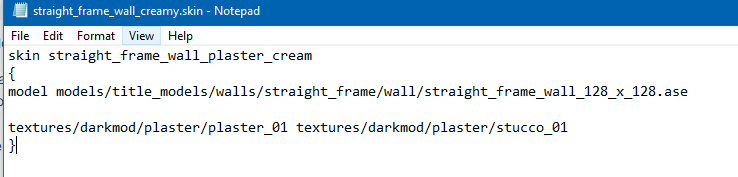

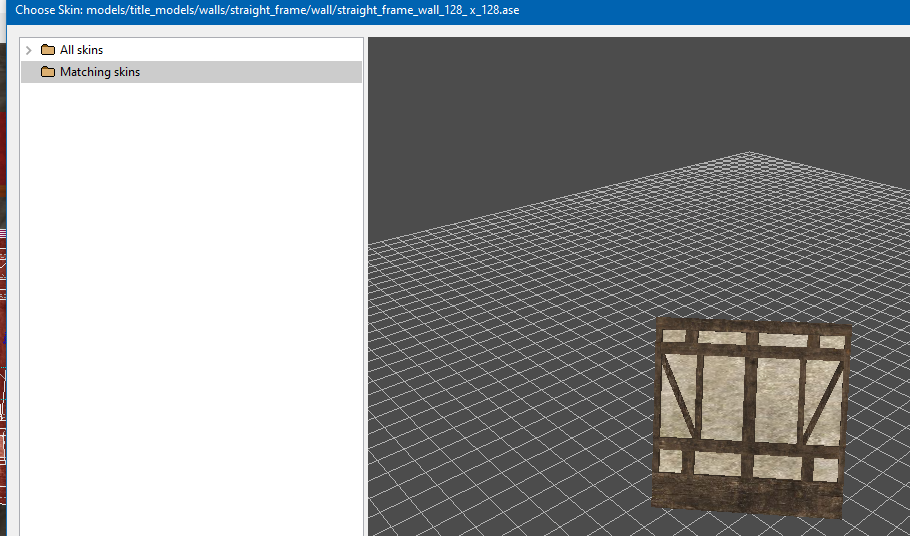

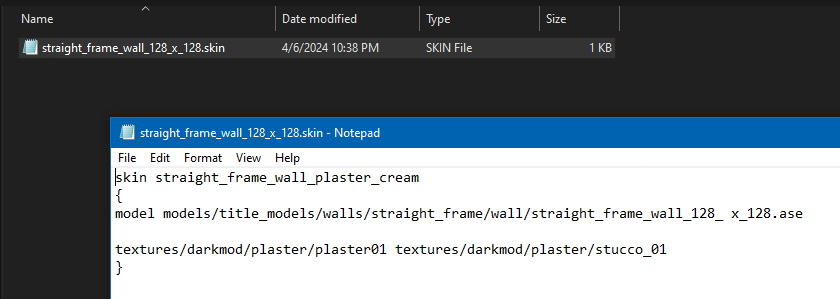

Alright, new problem with making these skins (or should I make a new thread about this?) Why are my skinned models coming up black? Here is my updated code for a simple skin. And here is the model in the skin editor, changed to its creamy, plaster version. Yet for some reason, all of my skins are pure black. The wiki says this is caused by the editor not finding the skin definition, and that there are spelling errors somewhere. I am not sure what this means, though, since all of my directory paths are spelled right (otherwise, how would the skin editor display them perfectly fine?) Does the name of the file have to match the declared skin name?

-

Yes. Sure, I will change it, but I do mind. In addition to changing the forum title, I have also had the name of the pk4 changed in the mission downloader and the thiefguild.com site’s named changed. It's not just some "joke". The forum post and thread are intended to be a natural extension of the mission’s story, a concept that is already SUPER derivative of almost any haunted media story or most vaguely creepy things written on the internet in the past 10 or 15 years. Given your familiarity with myhouse.wad, you also can clearly engage with something like that on some conceptual level. Just not here on our forums? We can host several unhinged racist tirades in the off-topic section but can’t handle creepypasta without including an advisory the monsters aren’t actually under the bed? (Are they though?) I am also trying to keep an open mind, but I am not really feeling your implication that using a missing person as a framing of a work of fiction is somehow disrespectful to people who are actually gone. I have no idea as even a mediocre creative person what to say to that or why I need to be responsible for making sure nobody potentially believes some creative work I am involved in, or how that is even achievable in the first place. Anyway, apologies for the bummer. That part wasn’t intentional. I am still here. I will also clarify that while I love the game, I never got the biggest house in animal crossing either. In the end Tom Nook took even my last shiny coin.

-

FM Release: Sneak and Soufflé - April Fools mission (01.04.2024)

STRUNK replied to Goldwell's topic in Fan Missions

Well, it looks very good! I tried generating some textures with AI, and it look quite good but the free model(s) lost the option to generate images and I'm too cheap to pay for it : P -

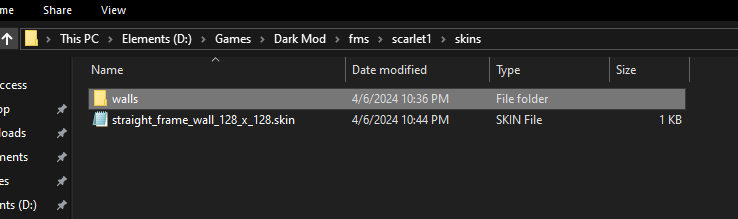

Did you set Windows to show file extensions? Otherwise a file named blabla.skin.txt shows in Windows as blabla.skin Edit: Nevermind, the screenshot shows it's a SKIN file. In general I would recomend using Notepad++ as text editor instead. I use these problem cases to (re)learn how to do things, but after copying the file and folder structure from your example with an other model, in my case the skin showed up fine.. Although putting the skin in a subfolder doesn't work for me.

-

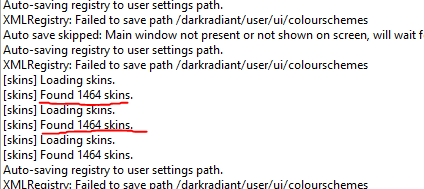

There are no console errors when I reload skins. I did double check and sure enough, one of the texture directories was incorrect. I went ahead and added it. Ah, of COURSE there would be a blank space somewhere...alright, I went ahead and fixed it. While both of your observations were correct, it unfortunately still doesn't load the new skin. I've attempted to reload it with no success. Yeah I suppose that would be important to explain lol. When I go into the model view, and click "Change Skin", it doesn't display a new skin. The console log confirms this, as before and after me making changes/reloading the skin, it only finds the same amount of skins.

-

I think you need to be more specific. What exactly "does not work"? Does the skin not show up in DR? Does it show up but crash DR? Does it appear but replace the wrong texture, or attach to the wrong model? From the screenshot of the skin file in Notepad it looks like you have a space in the model name, but I'm not sure if that's the cause of the problem or just a cosmetic artifact of the screenshot.

-

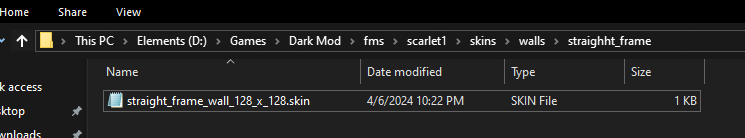

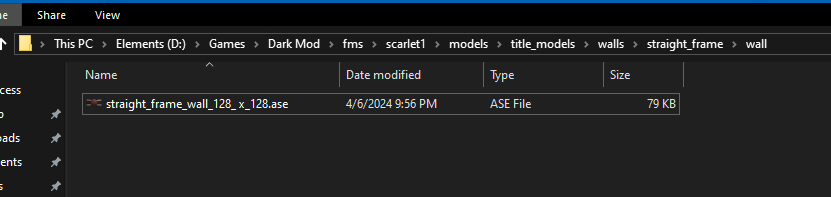

I have been attempting to create a skin for a model. No matter how closely I follow the wiki tutorial, I can't seem to get it to work. Here is a pic of the model directory, the skin directory, and the skin file I wrote. I even tried to make a standalone copy in the skins folder, in case DR doesn't like digging through folders when pulling up skins. Am I missing something? Am I running into problems because skins cannot be made for .ase files?

-

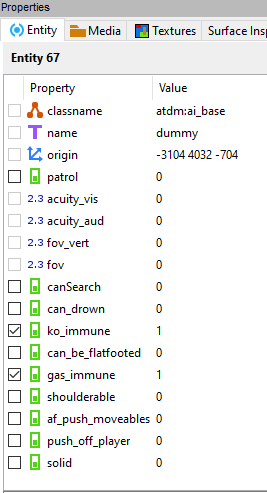

@Petike the Taffer Well that was challenging, but I managed to get it to work with a dummy AI (but with a warning message). Using hide doesn't work on the dummy, as each time it moves it un-hides. You need to use an invisible model. I used the atdm:ai_base, which surprisingly worked with these spawn args so it doesn't interfere with the player position and is inert as possible. Then you just need to trigger a follow script, e.g.: void follow_dummy() { $dummy.setOrigin($player1.getOrigin()); $dummy.hide(); //not sure why this is needed, but it stopped working when I deleted it sys.wait(0.2); //needed to prevent game from hanging thread follow_dummy(); } Alternatively, I tried to just bind the dummy to the player instead, but then the follower ai wouldn't follow me.

-

I've seen fun workarounds like that in other game modding as well. Years ago, maybe even a decade, some fella who was making a mod for Mount & Blade over at the Taleworlds forums revealed that he put invisible human NPCs on the backs of regular horse NPCs, then put the horse NPCs inside a horse corral he built for one of his mod's locations/scenes and then did some minor scripting, so the horses with invisible riders would wander around the corral. The end result was that it looked they're doing this of their own will, rather than an NPC rider being scripted to ride around the corral slowly. Necessity is the mother of invention. I don't know about the newest Mount & Blade game, but the first generation ones (2008-2022) apparently had some sort of hardcoded issue back in the earlier years, where if you left a horse NPC without a rider in its saddle, the horses would just stand around and wait and you couldn't get them to move around. Placing an invisible rider in their saddles suddenly made it viable again, at least for background scenes, of riderless horses wandering around, for added atmosphere. First generation M&B presumed you'd mostly be seeing horses in movement with riders, and the only horses-wandering-loosely animations and scripting were done for situations when the rider was knocked off their horse or dismounted in the middle of a battle. Hence the really odd workarounds. So, an invisible NPC trick might not be out of the question in TDM, even though you could probably still bump into it, despite its invisibility.

-

If you can do this, I don't know how. But it's something I want as well and was actually going to raise it as a feature request. I think speakers are spherical so they model real sound which radiates from a source outwards. I find this doesn't work so well with some scenarios though: water. For example you want to hear the sound of waves lapping a shoreline or a running water sound for a stream, river or canal. If the shoreline or stream is on the longer side, you have to have a speaker with a huge radius to cover it and the sounds extends too far along perpendicular to the body of water. Or alternatively multiple speakers but then you have to manage overlap and it becomes a pain. wind. Same idea but vertical - if you have a long edge or balcony then you need a large radius speaker to cover it and it might extend too low so you hear wind noises on the ground. @Petike the Taffer If all you want is for a sound to fill a room, just use the location system ambients instead. But you can only have one sound I think, so you couldn't have say your ambient music and also a weather sound at the same time without using a speaker for one of them.

-

I was actually looking for a good pumpkin pie model while making this early on, but couldn't find anything

-

The Dark Mod 15th Anniversary Contest - Entry Thread

SeriousToni replied to nbohr1more's topic in Fan Missions

@SpringheelSpeaking of Springheel - I wonder if he will manage to join the contest. I pretty much liked his first map back in the day. I am curious to see what he could do now with all his cool model asset modules. -

DarkRadiant 3.9.0 is ready for download. What's new: Feature: Add "Show definition" button for the "inherit" spawnarg Improvement: Preserve patch tesselation fixed subdivisions when creating caps Improvement: Add Filters for Location Entities and Player Start Improvement: Support saving entity key/value pairs containing double quotes Improvement: Allow a way to easily see all properties of attached entities Fixed: "Show definition" doesn't work for inherited properties Fixed: Incorrect mouse movement in 3D / 2D views on Plasma Wayland Fixed: Objective Description flumoxed by double-quotes Fixed: Spinboxes in Background Image panel don't work correctly Fixed: Skins defined on modelDefs are ignored Fixed: Crash on activating lighting mode in the Model Chooser Fixed: Can't undo deletion of atdm_conversation_info entity via conversation editor Fixed: 2D views revert to original ortho layout each time running DR. Fixed: WX assertion failure when docking windows on top of the Properties panel on Linux Fixed: Empty rotation when cloning an entity using editor_rotatable and an angle key Fixed: Three-way merge produces duplicate primitives when a func_static is moved Fixed: Renderer crash during three-way map merge Internal: Replace libxml2 with pugixml Internal: Update wxWidgets to 3.2.4 Windows and Mac Downloads are available on Github: https://github.com/codereader/DarkRadiant/releases/tag/3.9.0 and of course linked from the website https://www.darkradiant.net Thanks to all the awesome people who keep creating Fan Missions! Please report any bugs or feature requests here in these forums, following these guidelines: Bugs (including steps for reproduction) can go directly on the tracker. When unsure about a bug/issue, feel free to ask. If you run into a crash, please record a crashdump: Crashdump Instructions Feature requests should be suggested (and possibly discussed) here in these forums before they may be added to the tracker. The list of changes can be found on the our bugtracker changelog. Keep on mapping!

- 2 replies

-

- 15

-

-

-

TDM 15th Anniversary Contest is now active! Please declare your participation: https://forums.thedarkmod.com/index.php?/topic/22413-the-dark-mod-15th-anniversary-contest-entry-thread/

-

-

1

1

-

- Report

-

Ah, pity I wasn't reading the forums back in February. I'm fond of that game, along with Bugbear's other early title, Rally Trophy. I was never too good at FlatOut, but it was always a hoot to play.

-

Allow broadhead arrows to break glass lamps

grodenglaive replied to MirceaKitsune's topic in The Dark Mod

Thanks. No scripts were harmed in the making of this. It's pretty simple to do because of the broken and brake spawn args. 1. It does distinguish, but I don't even know why It must be a property inherent in the glass material. 2. Good question, I'll have to get back to you. 3. I don't know how to use skins. OK, I tested it on a stock model. That doesn't work. If you use the same model for "broken", but just change the skin in the properties, it also applies the skin to the unbroken model (and vice versa). Oh, I just discovered you don't even need to put a stim/response on the arrow. It automatically breaks glass when you add the break and broken spawn arg on the object (you still need to make a broken model of course). Isn't that handy? -

Allow broadhead arrows to break glass lamps

MirceaKitsune replied to MirceaKitsune's topic in The Dark Mod

Oh wow, that is amazing! It must require a custom script I imagine? Didn't think that was possible even with one and the S/R system, that's very impressive. Definitely curious about a few things: Does it distinguish between collisions with the glass and frame? If the arrow hits a metal part it shouldn't do anything, it should only break if the glass in particular was hit. If the lamp is triggered by a switch, does flipping it no longer turn the light on once it's broken? Can you use a broken skin rather than model? With some lamps it would be easier to only change the skin and replace the glass, of course both should be supported based on what works best for each lamp. -

The weapon hack is something that IIRC forces a model to render in front of everything else. It's limited by the fact that it can only apply to .md5mesh models, currently, such as the viewmodel of the player's arm and any weapons attached to the arm.

-

I couldn't help thinking of another realism related suggestion, don't know if it was discussed before but it seemed like an interesting idea. If this were to be changed on existing lights by default, it would have minor gameplay implications, but the sort that make missions easier in a fair way. So... electric lights: Like the real ones of the era, they're implied to use incandescent light bulbs... the kind that in reality will explore and shatter upon the smallest impact, and which like real lamps are encased in glass or paper. In any realistic scenario, shooting a broadhead arrow at a lamp or even throwing a rock at it would cause it to go through the glass and break the light bulb inside. Is it wrong to imagine TDM emulating this behavior as a gameplay mechanic? Just as you can shoot water arrows at flame based lights to put them out, you'd shoot broadhead arrows at electric lights to disable them... you must however hit the glass precisely, there's no room for error and it must be a perfect shot. As a way to compensate for the benefit, AI can treat this as suspicious and become alert if seeing or hearing a lamp break, or finding a broken lamp at any time if that's deemed to provide better balance. A technical look at implementing this: Just as broadhead arrows can go and stick through small soft objects such as books, they should do the same if you hit the glass material on a lamp, while of course still bouncing off if you hit metal: Lamp glass would need a special material flag that sends a signal to the entity upon collisions but allows arrows to go through, unlike glass in other parts of the world which is meant to act as solid and changing that everywhere would break a lot of things. When pierced by an arrow, the lamp should immediately turn itself off while playing a broken glass sound and spawning a few glass particles. The glass material should be hidden if the model is a transparent surface, or replaced with a broken glass texture in case the glass is painted on a lamp model without an interior... obviously this would be done by defining a broken skin for the entity to switch to. This does imply a few complexities which should be manageable: Existing lamps supporting this behavior will need new skins and in a few cases new textures, the def must include a new skin variable similar to the lit / unlit skins in this case a broken skin. Any electric light may be connected to a light switch, we don't want toggling the switch to bring the light bulb back to life... as such a flag to permanently disable triggering the light from that point on would be required. For special purposes such as scripted events to reset the world, we should allow an event to unbreak lamps, setting their state back to being lit / unlit while re-enabling trigger toggling. What do you think about this idea and who else wants it? Would it be worth the trouble to try and implement? If so should it only be done for new lights or as a separate entity definition of existing ones, or would the change be welcome retroactively for existing missions without players and FM authors alike feeling it makes them too easy?

-

I manually integrated and tested your PR. There is good news and bad news: The good news is that when running with the "GDK_BACKEND=x11" flag, using the clipper tool no longer breaks the window forcing me to restart DR afterward, the model / entity viewer no longer experiences the issue either. The bad news is that flag is still required, every viewport retains the problem if running Radiant in Wayland mode, we still have a big problem Linux users will increasingly run into as distros adopt it (KDE Plasma 6 now uses Wayland by default). So please integrate the PR if it doesn't break anything, it makes life much easier in the meantime! But this should remain open until either DR or WxWidgets or Wayland solve the core issue that exists when running in native mode.

-

I've figured it out! There are 2 parts: 1. The FreezePointer class has a mismatch, it blanks the cursor on the top-level window but does the pointer locking on whatever particular sub-window (i.e. the 2D view widget) calls for it. The cursor has to be explicitly blanked on the same widget that locks the pointer. 2. The clipper tool updates the cursor whenever the mouse moves, even if it's in the middle of dragging and should be hidden. It was easy enough to guard against changing the cursor while the mouse capture is active. This also fixes the same issue that was happening in the 3D view of the model viewer (but not on the main 3D view). I'll submit a PR shortly, @greebo or others will need to test this change on Windows to make sure it doesn't do any harm there. EDIT: The PR is here: https://github.com/codereader/DarkRadiant/pull/37

-

Noticed all of these only because I played TDM by doing the opposite of stealth to practice swordfights. None of these bugs appeared as long as I played the game stealthily. Should also note that I used Snatcher's Core Essentials @snatcher on and off, but your mod does not alter the AI anyway, correct? Bugs happened both ways. 1) AI freezes in combat - https://bugs.thedarkmod.com/view.php?id=6371 It happens a lot with auto-parry disabled. It never happened with auto-parry enabled. It never happened with some guards, while it happened many times with specific guards. They unfreeze when player dies, or when AI is forced to re-equip the melee when player returned from a spot that usually causes them to throw rocks. Also experienced behavior of them only slowly walking towards me at the pace of "hunched sneakily searching mode", attacking once finally in proximity. 2) AI may collectively lose the ability to hear any of the players noise caused by footsteps / landing. Still unsure of what causes this, but Lockner Manor has a good layout to get to the result. Just follow the right-most path and repeat the process of winning sword-fights with City Watch, and then jump around the next guard to see if they can hear you. If they can not hear you, likely nobody can. If they still can, just combat them too and you will likely have deaf AI by the time you have dealt with the 3 Watch Guards outside. In my experience, when they hear the death scream well enough to run to its location or start searching - the deafening did not work. 3) Helmeted AI does not react to (nor hear) players overhead sword strikes made directly on helmet, stealthily from behind. Not sure if the same issue as deaf AI bug, but happened at least once while it was active. (Lockner Manor) 4) (Moor?) AI may lose the ability to hear AND see player after nearby swordfights and after two slashes to bloody their face. (I may have also Moss Arrowed them to face). Any sword strikes I make simply go through their model with no audio of impact. Collision still gets player detected. (A Good Neighbor) 5) Crash to desktop when archer puts bow away to take out melee (while I may have collided with them on staircase). (Lockner Manor) 6) Crash to desktop when I shot a shortsword-wielding charging Noble. Sound of a deflected arrow played (same that you hear when shooting a stone wall), when I expected a flesh-hitting sound. (A Good Neighbor) 7?) Sometimes AI says "Ow that hurt" merely because I block their strikes. - Is this an intended feature? Do they take actual damage too? Anyway, I didn't find mentions about many of these issues. Reporting aside, if you want me to experiment with something or find ways to replicate - I don't mind updating this thread, as I plan to mess around with sword-fights anyway. Just posted this initial version should anyone want to chime in with similar experiences, and I wanted to know if some of these bugs could be mission-, or mod-specific. Maybe caused by much or unfortunately timed quicksaving / loading?

- 1 reply

-

- 2

-

(-335.2171-59.75).thumb.jpg.77cddc7d0fbf5c58472651a60103dfe1.jpg)