Search the Community

Searched results for '/tags/forums/model/' or tags 'forums/model/q=/tags/forums/model/&'.

-

Oh, there probably were a few really bad OEM vendors who pre-installed 32-bit Win7, Win8, or Win10 on 64-bit systems and sold that to folks who didn't know any better. Still, since Microsoft has moved to a "Microsoft Account" model for OS registration, impacted users can simply backup data, make an install thumb-drive, and re-install.

-

Just use 32bit binaries+Large Address Aware flag! /LARGEADDRESSAWARE (Handle Large Addresses) | Microsoft Learn Large Address Aware | TechPowerUp Forums

-

I hope that is not the new TDM version. https://forums.thedarkmod.com/index.php?/topic/20784-render-bug-large-black-box-occluding-screen/

-

For free ambience tracks it's as Freky said: you look around on the internet for tracks with the appropriate license to be included in your FM. Fortunately, you likely don't even need to bother doing this as a beginner as there's an entire "Music & SFX" section of the forums full of good ambient tracks for you to use if the stock tracks do not meet your fancy. You might also be interested in Orbweaver's "Dark Ambients", which come with a sndshd file already written for you.

-

I'm working in DarkRadiant on a new map, and I'm having something odd I've never experienced before. There's a fire in a stove model on the second floor of a building... walls, floor, ceiling, etc are all worldspawn brushes with appropriate textures, neither player nor AI have any trouble traversing the entire place, so I think it's assembled as it should be. However, if I douse the stove on the second floor with a water arrow, the water splash somehow drips through the floor brush and douses a torch in the same rough area down on the first floor. I tested it on various positions in the building, and it seems to happen everywhere, not just on that one light. Water arrows that impact on the second floor fall through the floor, and if a light is presence in the fall area it's extinguished. Is this expected behaviour? I would have through a solid brush would stop water from traveling any further once released.

-

Beta Testers Wanted. The Lieutenant 3: Foreign Affairs

Frost_Salamander replied to Frost_Salamander's topic in Fan Missions

For the FM? For beta 1 it's here: https://drive.proton.me/urls/H1QBB04GA0#oBZTb1CmVFQb I've already done around 100 fixes though, so you might want to wait for beta 2 which should be ready in a couple of days hopefully. All links are in the first post of the beta thread here: https://forums.thedarkmod.com/index.php?/topic/22439-the-lieutenant-3-foreign-affairs-beta-testing/ -

I think this is a slippery slope fallacy. Just because the ability to customize exists does not mean most mappers will use it. On the contrary, if one considers the customization that are already available, we see that the overwhelming majority of mappers stick to the defaults. The exceptions are interesting also. Kingsal's the only mapper that readily comes to mind who habitually deviates from presets seemingly just for the sake of being different. However everything they make is clearly in service of cohesive visions. Hazard Pay, no matter how you feel about it, unarguably loses a great deal of its survival horror character if you take away the napalm arrows or the punishing save system. The Voltas don't need to use Thief style elemental crystals in place of TDMs arrow model, but the fact that they are there makes a definite statement about the author's awareness of their inspiration for their work in TDM from the original games, which in turn draws attention to other, subtler creative choices. I think it's also telling that some of kingsal's modifications have been adopted by other authors. As OrbWeaver said, "If the defaults are widely disliked, they should be changed." However, how can the community come to a consensus unless there are maps to showcase the advantages of new innovations? Requesting, or worse requiring, players to go in and manually change settings in order to experience a new mechanic is never going to gain any traction. Certainly it is not worth the effort of creating an entire map built around a new paradigm.

-

"May his iron mold the current as we were molded. May his instrument expel all obstruction as we are his instruments." - Collected Sermons of Master Plumber Roto Rooter Few would dare cross the Bridgeport Plumber's Guild. Fewer still could glimpse their secrets and live. CC0 POLYHAVEN PLUMBER PACK BETA Includes: Fully modular pipe kit with optimized shadow mesh, two skin variations and near perfect grid snapping at grid level 4 Moveable plunger prop which is fully compatible with the AI weapon system Worry free CC0 license All credit to the great polyhaven.com for the original mid poly meshes - consider supporting them on patreon. I just decimated and then rebaked the assets as well as converted the maps from pbr and made the additional model and material variations.

- 6 replies

-

- 12

-

-

-

The training mission’s job is not cover the diversity of FMs across the entire platform. There are lots of stuff in volta universe missions which are not covered: explosive barrels, ammo crystals, loot you dislodge by shooting it with an arrow, completely different undead AI with a completely different damage model, etc Just like in northdale missions: neutral/hostile areas, in game shops, simplified lockpicks, etc Why would you need to be told any of this is happening by anything other than the game itself? That’s how games communicate: you play them.

-

Should we consider using detail textures?

MirceaKitsune replied to MirceaKitsune's topic in The Dark Mod

Oh, some implementations might work a little differently from what I remember the term megatexture referring to. From what I used to know, it meant turning the entire level into a single model or set that uses a single enormous texture. While the concept may have its upsides, there are two major issues that negate any benefit in my view: The first is system resources, you don't benefit from any reuse as every pixel is unique, the only way to do it at scale is with a gigantic image thus a huge performance drop in pretty much every department. The second issue is that level design becomes far harder and more specialized... while here in TDM we only need to draw a bunch of brushes and place some modules to make a level, an engine based on megatextures would require level designers to sculpt and paint the entire world in software like Blender which is far more difficult and we likely wouldn't have even half of the FM creators we do today, even for those that know how to do it imagine the task of manually painting every brick on every home and so on. -

Should we consider using detail textures?

MirceaKitsune replied to MirceaKitsune's topic in The Dark Mod

Megatextures were a horrible idea for obvious reasons, not sure why ID chose to learn that the hard way. The concept from what I remember is the whole map uses a single gigantic texture... instead of how we independently pick a couple of 1024 px brick materials for a few brushes and surfaces, the whole map acts as one model with one material and a single texture which probably needs to be 1 million x 1 million pixels even for a small level. This is ridiculous from a perspective of system resources with 100's of GB's of storage and huge (v)RAM requirements and hours of loading time, as well as raising the skills required for level editing since you now need mappers to also be texture artists and sculpt / paint their levels instead of just placing stuff. The only thinkable benefit is there's no repetition since every pixel on every part of the world is unique, but who notices any similarity with independent texturing if it's done right anyway? Detail textures have yet another advantage there: Since you scale the pattern independently on top of the original texture, you can make every surface appear as if it has unique pixels like megatextures. Hence why I'd advice having the details be very high-res, 4k or 8k even 16k if we can take it: Yes that's enormous, but remember we'd only have a few patterns probably no more than 15 in total, and can store them as grayscale then use a single image to modify both albedo / specular / normal (heightmap to normalmap): Map the detail in world space rather than the brush or model UV map, and the resulting pattern on every surface in the world will always be unique since the original and detail textures will be out of sync. -

Indeed and try to wait until you are sure the model is finished before you go through the trouble of aligning textures. I couldn't tell you how much time I spent realigning textures after adjusting walls, windows, etc., or changing my mind about the materials (especially when bevels are involved).

-

If it's supposed to be an octagon, I don't think the corners need to be bevelled. Certainly it looks much better to bevel square corners, but the angles of an octagon don't look bad imo. If you want a smooth cylinder though, the default cylinder patch has 16 sides, which looks smooth enough in game. That would be easier than bevelling 8 corners. Speaking of smoothing; I was pleasantly surprised to find that if you make a model in Blender with smooth-shading ticked, then export an ase file, it will retain the smooth shading when imported to darkradiant.

-

Interesting idea. Not sure about my upcoming time availability to help. A couple of concerns here - - I assume the popup words uses the "Informative Texts" slot, e.g., where you might see "Acquired 80 in Jewels", so it likely wouldn't interfere with that or with already-higher subtitles. - There are indications that #str is becoming unviable in FMs; see my just-posted: https://forums.thedarkmod.com/index.php?/topic/22434-western-language-support-in-2024/

-

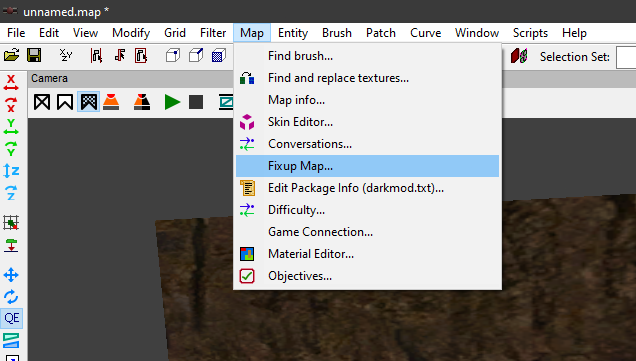

In post https://forums.thedarkmod.com/index.php?/profile/254-orbweaver/&status=3994&type=status @nbohr1more found out what the Fixup Map functionality is for. But what does it actually do? Does it search for def references (to core?) that don't excist anymore and then link them to defs with the same name elswhere? Also I would recommend to change the name into something better understood what it is for. Fixup map could mean anything. And it should be documented in the wiki.

-

yeah its a jungle out there but a 12 gb card on a 128 bit bus would only be viable with dlss and then only just . but there will probably be plenty of options as you mention though id still go for something that would atleast be able to drag the ammount of vram without workarounds. strangely the old amd R9 390 had a 512 bit bus and could probably have accepted 32 gb vram but the card is to slow to do 4k in modern titles. even so the 8 gb model actually ran quite nicely in games such as the first horizon in 4K but would probably choke and die on forbidden west . minor wtf moment is crysis remastered it runs it at 4k in can it run crysis with a gazillion fps looks quite purty to but i suspect this is a bug.

-

Should we consider using detail textures?

The Black Arrow replied to MirceaKitsune's topic in The Dark Mod

Alright, so, I'm a Texture Artist myself for more than 20 years, which means I know what I'm talking about, but my word isn't law at all, remember that. I've worked (mostly as mods, I am a professional but I much prefer being a freelance) with old DX8 games up to DX12. When it comes to Detail Textures, for my workflow, I never ever use it except rarely when it's actually good (which, I emphasize on "rarely"). This is one reason I thought mentioning that I worked with DX8 was logical. One of the few times it's good is when you make a game that can't have textures higher than what would be average today, such as, World Textures at 1024x1024. Making detail textures for ANY (World, Model) textures that are lower than 128x128 is generally appealable. Another is when the game has no other, much better options for texturing, such as Normal Maps and Parallax Mapping. Personally, I think having Detail Textures for The Dark Mod is arguably pointless. I know TDM never had a model and texture update since 2010 or so, but most textures do seem to at least be 1024x1024, if there's any world texture that's lower than 256x256, I might understand the need of Detail Textures. Now, if this was a game meant to be made in 2024 with 2020+ standards, I would say that we should not care about the "strain" high resolution textures add, however, I do have a better proposition: Mipmaps. There are many games, mostly old than new ones, that use mipmaps not just for its general purpose but also to act as a "downscaler". With that in mind, you boys can add a "Texture Resolution" option that goes from Low to High, or even Lowest to Highest. As an example, we can add a 2048x2048 (or even 4096x4096) world texture that, if set to Lowest, it would use the smallest Mipmap the texture was made with, which depends on how the artist did it, could be a multiplication of 1x1 or 4x4. One problem with this is that, while it will help in the game with people who have less VRAM than usual these days, it won't help with the size. 4096x4096 is 4096x4096, that's about 32mb compressed with DXT1 (which is not something TDM can use, DXT is for DirectX, sadly I do not know how OpenGL compresses its textures). I would much rather prefer the option to have better, baked Normal Maps as well as Parallax Mapping for the World Textures. I'm still okay with Detail Textures, I doubt this will add anything negative to the game or engine, very sure the code will also be simple enough it will probably only add 0.001ms for the loading times, or even none at all. But I would also like it as an option, just like how Half-Life has it, so I'm glad you mentioned that. But yet again, I much prefer better Normal Maps and Parallax Mapping than any Detail Textures. On another note...Wasn't Doom 3, also, one of the first games that started using Baked Normal Maps? -

No, the 192-bit RTX 3060 12 GB came first. The cut down 8 GB model came over a year and a half later, and probably in small numbers because nobody talks about it much other than "don't get it, it's 20-30% slower". 3060 Ti had 8 GB from the start, and always has, although it looks like they made a GDDR6X version. They would have to change the bus width to accommodate 12 GB. There were rumors of products like 3070 16 GB, 3080 20 GB and so on, but they never materialized outside of engineering samples. If you think things are confusing now, just wait until 3 GB GDDR7 chips materialize within a couple of years. We could see 12 GB cards on a 128-bit bus, 9 GB on 96-bit, and so on.

-

3060 has 192-bit bus (cut to 128-bit for the maligned 8 GB model), and the gimped cards (like 7600 XT 16 GB 128-bit) can definitely use the extra VRAM in some scenarios. https://en.wikipedia.org/wiki/GeForce_30_series#Desktop

-

aye the rtx 3060 was another weird one, it only has a 128 bit bus which is to low to effectively handle 12 gb so it did not really help with the extra vram in higher resolutions. sadly they decided to continue with the same eh "mistake" with the rtx 4060 16 gb model . id call that deception to make users pay more for a card which is not even rated for 4K... sadly. the 16 gb 3070 model was scrapped by nvidia because it would be a contender for the much higher priced 3080 non ti i guess as it has a 256 bit bus and hence would be a capable 4k card. the 3060 ti 8 gb was a much better card sadly. https://www.techradar.com/reviews/evga-geforce-rtx-3060-black-xc

-

There's been talk over the years on how we could improve texture quality, often to no avail as it requires new high-resolution replacements that need to be created and will look different and add a strain on system resources. The sharpness post-process filter was supposed to improve that, but even with it you see ugly blurry pixels on any nearby surface. Yet there is a way, a highly efficient technique used by some engines in the 90's notably the first Unreal engine, and as it did wonders then it can still do so today: Detail textures. Base concept: You have a grayscale pattern for various surfaces, such as metal scratches or the waves of polished wood or the stucco of a rough rock, usually only a few highly generic patterns are needed. Each pattern is overlayed on top of corresponding textures several times, every iteration at a smaller... as with model LOD smaller iterations fade with camera distance as to not waste resources, the closer you get the more detail you see. This does wonders in making any texture look much sharper without changing the resolution of the original image, and because the final mixture is unique you don't perceive any repetitiveness! Here's a good resource from UE5 which seems to support them to this day: https://dev.epicgames.com/documentation/en-us/unreal-engine/adding-detail-textures-to-unreal-engine-materials Who else agrees this is something we can use and would greatly improve graphical fidelity? No one's ever going to replace every texture with a higher resolution version in vanilla TDM; Without this technique we'll always be stuck with early 2000's graphics, with it we have a magic way of making it look close to AAA games today! Imagine being able to see all those fine scratches on a guard's helmet as light shines on it, the thousands of little holes on a brick, the waves of wood as you lean into a table... all without even losing much performance nor a considerable increase in the size of game data. It's like the best deal one could hope for! The idTech 4 material system should already have what we need, namely the ability to mix any textures at independent sizes; Unlike the old days when only a diffuse texture was used, the pattern would now need to be applied to both albedo / specular / normal maps, to my knowledge there are shader keywords to combine each. Needless to say it would require editing every single material to specify its detail texture with a base scale and rotation: It would be painful but doable with a text injection script... I made a bash script to add cubemap reflections once, if it were worth it I could try adapting it to inject the base notation for details. A few changes will be needed of course: Details must be controlled by a main menu setting activating this system and specifying the level of detail, materials properties can't be controlled by cvars. Ultimately we may need to overlay them in realtime, rather than permanently modifying every material at load time which may have a bigger performance impact; We want each iteration to fade with distance and only appear a certain length from the camera, the effect will cause per-pixel lighting to have to render more detail per light - surface interaction so we'll need to control the pixel density.

-

Hm after testing: This does actually work fine. Both in DR and in tdm. I guess there's no point in specifing the model path, because then it only works on one specific func_static.

-

Skins don't require a model path, that's just a convenience feature to allow the skins to be associated with the model(s) in the editor. However I have no idea if an unassociated skin can be used on a func_static. I suppose there's no reason why it couldn't work, but it's not something I've ever tested and I wouldn't be surprised if it fails to do anything (either in the editor or the game).