Search the Community

Searched results for '/tags/forums/blender lwo export/' or tags 'forums/blender lwo export/q=/tags/forums/blender lwo export/&'.

-

Thanks so much. I'll review the spreadsheet starting tomorrow and after any tweaks, export to .subs as inline. Then convert any to srt that need it, and do QA. The usual process, tho possibly a bit slow due to travel/holidays. I've already built a testSubtitlesDrunk to house the subtitles.

-

Looking at the code, the originals were "pm_mantle_pull 750" and "pm_mantle_pullFast 450". The new "pm_mantle_pull" value is "400". A "pm_mantle_pullFast" value of "450" would be slower than regular pull, not faster. With both being set to "400", they are at least similar. Other than that, it's subjective and the feedback from playtesters was positive. Also, referenced internally here: https://forums.thedarkmod.com/index.php?/topic/22256-movementcontrols-settings-in-main-menu/&do=findComment&comment=489158

-

Hi, this mission is crashing on my system and I cannot go on. Playing "Hidden Hands - The lost citadel" Version 6 on Fedora Linux Version 38. I paste the whole output of the console below. Perhaps someone can find the cause for the crash, that would be very nice [stefan@fedora darkmod]$ ./thedarkmod.x64 TDM 2.11/64 #10264 (1435:10264) linux-x86_64 Jan 30 2023 02:02:43 /proc/cpuinfo CPU frequency: 899.998 MHz 900 MHz Intel CPU with SSE & SSE2 & SSE3 & SSSE3 & SSE41 & AVX found interface lo - loopback Found Intel CPU, features: SSE SSE2 SSE3 SSSE3 SSE41 AVX TDM using AVX for SIMD processing. Found 0 new missions and 0 packages. ------ Initializing File System ------ Current search path: [M] /home/stefan/darkmod/fms/hhtlc [M] /home/stefan/darkmod/fms/hhtlc/hhtlc_1b4187d3b30d65cf.pk4 (665 files - 0xf04d8d7e) [C] /home/stefan/darkmod/ [C] /home/stefan/darkmod/tdm_textures_wood01.pk4 (382 files - 0x54c704d0) [C] /home/stefan/darkmod/tdm_textures_window01.pk4 (399 files - 0x50a48869) [C] /home/stefan/darkmod/tdm_textures_stone_sculpted01.pk4 (464 files - 0x3bd63c7c) [C] /home/stefan/darkmod/tdm_textures_stone_natural01.pk4 (141 files - 0x4d0836ff) [C] /home/stefan/darkmod/tdm_textures_stone_flat01.pk4 (302 files - 0x671a22d2) [C] /home/stefan/darkmod/tdm_textures_stone_cobblestones01.pk4 (271 files - 0xc46ab14f) [C] /home/stefan/darkmod/tdm_textures_stone_brick01.pk4 (527 files - 0x1d087cf8) [C] /home/stefan/darkmod/tdm_textures_sfx01.pk4 (69 files - 0x2c673886) [C] /home/stefan/darkmod/tdm_textures_roof01.pk4 (69 files - 0x24547b7) [C] /home/stefan/darkmod/tdm_textures_plaster01.pk4 (142 files - 0x9747529e) [C] /home/stefan/darkmod/tdm_textures_paint_paper01.pk4 (67 files - 0xa4a95a09) [C] /home/stefan/darkmod/tdm_textures_other01.pk4 (127 files - 0x36932451) [C] /home/stefan/darkmod/tdm_textures_nature01.pk4 (286 files - 0x19240606) [C] /home/stefan/darkmod/tdm_textures_metal01.pk4 (509 files - 0x441d098f) [C] /home/stefan/darkmod/tdm_textures_glass01.pk4 (51 files - 0x3f3721e) [C] /home/stefan/darkmod/tdm_textures_fabric01.pk4 (43 files - 0x649daf73) [C] /home/stefan/darkmod/tdm_textures_door01.pk4 (177 files - 0xb0130166) [C] /home/stefan/darkmod/tdm_textures_decals01.pk4 (474 files - 0xe2ff12c6) [C] /home/stefan/darkmod/tdm_textures_carpet01.pk4 (130 files - 0x79bc3d7c) [C] /home/stefan/darkmod/tdm_textures_base01.pk4 (435 files - 0xc07a324) [C] /home/stefan/darkmod/tdm_standalone.pk4 (4 files - 0xb3f36d20) [C] /home/stefan/darkmod/tdm_sound_vocals_decls01.pk4 (32 files - 0x53cda0aa) [C] /home/stefan/darkmod/tdm_sound_vocals07.pk4 (1111 files - 0xa13ec4c2) [C] /home/stefan/darkmod/tdm_sound_vocals06.pk4 (696 files - 0x44c85e78) [C] /home/stefan/darkmod/tdm_sound_vocals05.pk4 (119 files - 0x6cf23214) [C] /home/stefan/darkmod/tdm_sound_vocals04.pk4 (2869 files - 0xd7ec1256) [C] /home/stefan/darkmod/tdm_sound_vocals03.pk4 (743 files - 0xb3f2e0f1) [C] /home/stefan/darkmod/tdm_sound_vocals02.pk4 (1299 files - 0x5092940e) [C] /home/stefan/darkmod/tdm_sound_vocals01.pk4 (82 files - 0xf4d326b2) [C] /home/stefan/darkmod/tdm_sound_sfx02.pk4 (605 files - 0x31673482) [C] /home/stefan/darkmod/tdm_sound_sfx01.pk4 (987 files - 0x97451b7a) [C] /home/stefan/darkmod/tdm_sound_ambient_decls01.pk4 (8 files - 0x9404877c) [C] /home/stefan/darkmod/tdm_sound_ambient03.pk4 (24 files - 0xd28ca9ec) [C] /home/stefan/darkmod/tdm_sound_ambient02.pk4 (163 files - 0x84efad22) [C] /home/stefan/darkmod/tdm_sound_ambient01.pk4 (220 files - 0xee228c81) [C] /home/stefan/darkmod/tdm_prefabs01.pk4 (1017 files - 0x506baa0b) [C] /home/stefan/darkmod/tdm_player01.pk4 (127 files - 0xd983fc45) [C] /home/stefan/darkmod/tdm_models_decls01.pk4 (101 files - 0x146c787) [C] /home/stefan/darkmod/tdm_models02.pk4 (2241 files - 0x42cdbf62) [C] /home/stefan/darkmod/tdm_models01.pk4 (3326 files - 0x829270f2) [C] /home/stefan/darkmod/tdm_gui_credits01.pk4 (49 files - 0xbff51863) [C] /home/stefan/darkmod/tdm_gui01.pk4 (758 files - 0xcbf4fd2d) [C] /home/stefan/darkmod/tdm_fonts01.pk4 (696 files - 0x7c5027bf) [C] /home/stefan/darkmod/tdm_env01.pk4 (176 files - 0x8bd4045b) [C] /home/stefan/darkmod/tdm_defs01.pk4 (194 files - 0xe5f440dc) [C] /home/stefan/darkmod/tdm_base01.pk4 (223 files - 0x9704b43c) [C] /home/stefan/darkmod/tdm_ai_steambots01.pk4 (31 files - 0x26416485) [C] /home/stefan/darkmod/tdm_ai_monsters_spiders01.pk4 (80 files - 0x15c3ef89) [C] /home/stefan/darkmod/tdm_ai_humanoid_undead01.pk4 (55 files - 0x25e463ad) [C] /home/stefan/darkmod/tdm_ai_humanoid_townsfolk01.pk4 (104 files - 0xa6f7c573) [C] /home/stefan/darkmod/tdm_ai_humanoid_pagans01.pk4 (10 files - 0x566fb35a) [C] /home/stefan/darkmod/tdm_ai_humanoid_nobles01.pk4 (51 files - 0x5ca54cab) [C] /home/stefan/darkmod/tdm_ai_humanoid_mages01.pk4 (8 files - 0x5e7a666b) [C] /home/stefan/darkmod/tdm_ai_humanoid_heads01.pk4 (100 files - 0x45ec787e) [C] /home/stefan/darkmod/tdm_ai_humanoid_guards01.pk4 (379 files - 0x9801be8d) [C] /home/stefan/darkmod/tdm_ai_humanoid_females01.pk4 (172 files - 0xc7de4598) [C] /home/stefan/darkmod/tdm_ai_humanoid_builders01.pk4 (91 files - 0x6dea9b57) [C] /home/stefan/darkmod/tdm_ai_humanoid_beasts02.pk4 (229 files - 0x886c9a98) [C] /home/stefan/darkmod/tdm_ai_humanoid_beasts01.pk4 (23 files - 0xba9da54c) [C] /home/stefan/darkmod/tdm_ai_base01.pk4 (9 files - 0x1de319e8) [C] /home/stefan/darkmod/tdm_ai_animals01.pk4 (82 files - 0x6c0fda50) File System Initialized. -------------------------------------- Couldn't open journal files /proc/cpuinfo CPU processors: 2 /proc/cpuinfo CPU logical cores: 4 ----- Initializing Decls ----- WARNING:file materials/puzzle_paintings.mtr, line 228: material 'textures/puzzle/flower' previously defined at materials/puzzle_paintings.mtr:14 WARNING:file sound/ambient.sndshd, line 79: sound 'firstfloor' previously defined at sound/ambient.sndshd:51 WARNING:file sound/soul.sndshd, line 129: sound 'builder_tim_1' previously defined at sound/soul.sndshd:102 WARNING:file sound/video.sndshd, line 12: sound 'main' previously defined at sound/ambient.sndshd:1 ------------------------------ I18N: SetLanguage: 'english'. I18N: Found no character remapping for english. I18N: 1321 strings read from strings/english.lang I18N: 'strings/fm/english.lang' not found. Couldn't exec editor.cfg - file does not exist. execing default.cfg Gamepad modifier button assigned to 6 execing Darkmod.cfg execing DarkmodKeybinds.cfg execing DarkmodPadbinds.cfg Gamepad modifier button assigned to 6 Couldn't exec autoexec.cfg - file does not exist. I18N: SetLanguage: 'german'. I18N: Found no character remapping for german. I18N: 1321 strings read from strings/german.lang I18N: 'strings/fm/german.lang' not found. I18NLocal: 'strings/fm/english.lang' not found, skipping it. ----- Initializing OpenAL ----- Setup OpenAL device and context OpenAL: found device 'ALSA Default' [ACTIVE] OpenAL: found device 'HDA Intel PCH, CS4208 Analog (CARD=PCH,DEV=0)' OpenAL: found device 'HDA Intel PCH, HDMI 0 (CARD=PCH,DEV=3)' OpenAL: found device 'HDA Intel PCH, HDMI 1 (CARD=PCH,DEV=7)' OpenAL: found device 'HDA Intel PCH, HDMI 2 (CARD=PCH,DEV=8)' OpenAL: device 'ALSA Default' opened successfully OpenAL: HRTF is available [ALSOFT] (EE) Failed to set real-time priority for thread: Operation not permitted (1) OpenAL vendor: OpenAL Community OpenAL renderer: OpenAL Soft OpenAL version: 1.1 ALSOFT 1.21.1 OpenAL: found EFX extension OpenAL: HRTF is disabled (reason: 0 = ALC_HRTF_DISABLED_SOFT) OpenAL: found 256 hardware voices ----- Initializing OpenGL ----- Initializing OpenGL display ...initializing QGL ------- Input Initialization ------- ------------------------------------ OpenGL vendor: Intel OpenGL renderer: Mesa Intel(R) HD Graphics 615 (KBL GT2) OpenGL version: 4.6 (Core Profile) Mesa 23.1.9 core Checking required OpenGL features... v - using GL_VERSION_3_3 v - using GL_EXT_texture_compression_s3tc Checking optional OpenGL extensions... v - using GL_EXT_texture_filter_anisotropic maxTextureAnisotropy: 16.000000 v - using GL_ARB_stencil_texturing X - GL_EXT_depth_bounds_test not found v - using GL_ARB_buffer_storage v - using GL_ARB_texture_storage v - using GL_ARB_multi_draw_indirect v - using GL_ARB_vertex_attrib_binding X - GL_ARB_compatibility not found v - using GL_KHR_debug Max active texture units in fragment shader: 32 Max combined texture units: 192 Max anti-aliasing samples: 16 Max geometry output vertices: 256 Max geometry output components: 1024 Max vertex attribs: 16 ---------- R_ReloadGLSLPrograms_f ----------- Linking GLSL program cubeMap ... Linking GLSL program bumpyEnvironment ... Linking GLSL program depthAlpha ... Linking GLSL program fog ... Linking GLSL program oldStage ... Linking GLSL program blend ... Linking GLSL program stencilshadow ... Linking GLSL program shadowMapA ... Linking GLSL program ambientInteraction ... Linking GLSL program interactionStencil ... Linking GLSL program interactionShadowMaps ... Linking GLSL program interactionMultiLight ... Linking GLSL program frob ... Linking GLSL program soft_particle ... Linking GLSL program tonemap ... Linking GLSL program gaussian_blur ... Linking GLSL program testImageCube ... --------------------------------- Font fonts/english/stone in size 12 not found, using size 24 instead. --------- Initializing Game ---------- The Dark Mod 2.11/64, linux-x86_64, code revision 10264 Build date: Jan 30 2023 Initializing event system ...873 event definitions Initializing class hierarchy ...172 classes, 1732032 bytes for event callbacks Initializing scripts ---------- Compile stats ---------- Memory usage: Strings: 56, 9048 bytes Statements: 23155, 926200 bytes Functions: 1358, 177432 bytes Variables: 107720 bytes Mem used: 2149432 bytes Static data: 408 bytes Allocated: 1152120 bytes Thread size: 7928 bytes Maximum object size: 816 Largest object type name: speaker_zone_ambient ...6 aas types game initialized. -------------------------------------- Parsing material files Found 0 new missions and 0 packages. Found 42 mods in the FM folder. Parsed 46 mission declarations. No 'tdm_mapsequence.txt' file found for the current mod: hhtlc -------- Initializing Session -------- session initialized -------------------------------------- Font fonts/english/mason_glow in size 12 not found, using size 48 instead. Font fonts/english/mason_glow in size 24 not found, using size 48 instead. Font fonts/english/mason in size 12 not found, using size 48 instead. Font fonts/english/mason in size 24 not found, using size 48 instead. WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'QuitGameDialogAskEverytimeOption' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'ColorPrecision' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'OpenDoorsOnUnlock' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'InvPickupMessages' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'HideLightgem' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'BowAimer' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'FrobHelper' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'showTooltips' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'MeleeInvertAttack' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'MeleeInvertParry' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'SCGeneralBind5' has value count unequal to choices count --- Common Initialization Complete --- ------------- Warnings --------------- during The Dark Mod initialization... WARNING:file materials/puzzle_paintings.mtr, line 228: material 'textures/puzzle/flower' previously defined at materials/puzzle_paintings.mtr:14 WARNING:file sound/ambient.sndshd, line 79: sound 'firstfloor' previously defined at sound/ambient.sndshd:51 WARNING:file sound/soul.sndshd, line 129: sound 'builder_tim_1' previously defined at sound/soul.sndshd:102 WARNING:file sound/video.sndshd, line 12: sound 'main' previously defined at sound/ambient.sndshd:1 WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'BowAimer' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'ColorPrecision' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'FrobHelper' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'HideLightgem' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'InvPickupMessages' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'MeleeInvertAttack' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'MeleeInvertParry' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'OpenDoorsOnUnlock' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'QuitGameDialogAskEverytimeOption' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'SCGeneralBind5' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'showTooltips' has value count unequal to choices count 15 warnings WARNING: terminal type 'xterm-256color' is unknown. terminal support may not work correctly terminal support enabled ( use +set in_tty 0 to disabled ) pid: 4247 Async thread started Couldn't exec autocommands.cfg - file does not exist. Found 0 new missions and 0 packages. Found 42 mods in the FM folder. reloading guis/msg.gui. WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'QuitGameDialogAskEverytimeOption' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'ColorPrecision' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'OpenDoorsOnUnlock' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'InvPickupMessages' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'HideLightgem' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'BowAimer' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'FrobHelper' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'showTooltips' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'MeleeInvertAttack' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'MeleeInvertParry' has value count unequal to choices count WARNING:idChoiceWindow:: gui 'guis/mainmenu.gui' window 'SCGeneralBind5' has value count unequal to choices count reloading guis/mainmenu.gui. WARNING:unknown destination 'FlareBox::rect' of set command at /fms/hhtlc/hhtlc_1b4187d3b30d65cf.pk4/guis/map/hhtlc.gui:48 WARNING:unknown destination 'FlareBox::rect' of set command at /fms/hhtlc/hhtlc_1b4187d3b30d65cf.pk4/guis/map/hhtlc.gui:53 WARNING:unknown destination 'FlareBox::rect' of set command at /fms/hhtlc/hhtlc_1b4187d3b30d65cf.pk4/guis/map/hhtlc.gui:58 WARNING:unknown destination 'FlareBox::rect' of set command at /fms/hhtlc/hhtlc_1b4187d3b30d65cf.pk4/guis/map/hhtlc.gui:63 WARNING:unknown destination 'FlareBox::rect' of set command at /fms/hhtlc/hhtlc_1b4187d3b30d65cf.pk4/guis/map/hhtlc.gui:68 WARNING:unknown destination 'FlareBox::rect' of set command at /fms/hhtlc/hhtlc_1b4187d3b30d65cf.pk4/guis/map/hhtlc.gui:73 --------- Map Initialization --------- Map: hhtlc ------- Game Map Init SaveGame ------- ---------- Compile stats ---------- Memory usage: Strings: 57, 9144 bytes Statements: 23506, 940240 bytes Functions: 1379, 179716 bytes Variables: 108332 bytes Mem used: 2188540 bytes Static data: 408 bytes Allocated: 1176676 bytes Thread size: 7928 bytes collision data: 1373 models 163204 vertices (5100 KB) 273893 edges (12838 KB) 110189 polygons (8170 KB) 15680 brushes (2322 KB) 138243 nodes (6480 KB) 243924 polygon refs (3811 KB) 58507 brush refs (914 KB) 85771 internal edges 9795 sharp edges 0 contained polygons removed 0 polygons merged 39637 KB total memory used 2123 msec to load collision data. map bounds are (22831.0, 23151.4, 9093.0) 79 KB passage memory used to build PVS 52 msec to calculate PVS 252 areas 598 portals 14 areas visible on average 7 KB PVS data [Load AAS] missing maps/hhtlc.aas48 [Load AAS] loading maps/hhtlc.aas96 done. [Load AAS] loading maps/hhtlc.aas32 done. [Load AAS] missing maps/hhtlc.aas100 [Load AAS] loading maps/hhtlc.aas_rat done. [Load AAS] loading maps/hhtlc.aas_elemental done. WARNING:Couldn't load gui: 'guis/map_of.gui' WARNING:idCollisionModelManagerLocal::LoadModel: collision file for 'models/ritual_hammer2.lwo' contains different model WARNING:idCollisionModelManagerLocal::LoadModel: collision file for 'models/ritual_hammer3.lwo' contains different model WARNING:idCollisionModelManagerLocal::LoadModel: collision file for 'models/ritual_hammer4.lwo' contains different model WARNING:Couldn't load sound 'explosion_all_clear.wav' using default [map entity: atdm_trigger_voice_12] [decl: explosion_all_clear in <implicit file>] [sound: explosion_all_clear.wav] No running thread for RestoreScriptObject(), creating new one. -------------------------------------- ----- idRenderModelManagerLocal::EndLevelLoad ----- 0 models purged from previous level, 2786 models kept. --------------------------------------------------- ----- idImageManager::EndLevelLoad ----- WARNING:Couldn't load image: lights/qc_comj [map entity: light_159] [decl: lights/qc_comj in <implicit file>] [image: lights/qc_comj] WARNING:Couldn't load image: guis/assets/game_maps/map_of_icon [map entity: MapMansion1] [decl: atdm:map_of in def/tdm_shopitems.def] [decl: guis/assets/game_maps/map_of_icon in <implicit file>] [image: guis/assets/game_maps/map_of_icon] 0 purged from previous 219 kept from previous 2070 new loaded all images loaded in 41.8 seconds ---------------------------------------- Linking GLSL program cubeMap ... Linking GLSL program bumpyEnvironment ... Linking GLSL program depthAlpha ... Linking GLSL program fog ... Linking GLSL program oldStage ... Linking GLSL program blend ... Linking GLSL program stencilshadow ... Linking GLSL program shadowMapA ... Linking GLSL program ambientInteraction ... Linking GLSL program interactionStencil ... Linking GLSL program interactionShadowMaps ... Linking GLSL program interactionMultiLight ... Linking GLSL program frob ... Linking GLSL program soft_particle ... Linking GLSL program tonemap ... Linking GLSL program gaussian_blur ... Linking GLSL program testImageCube ... Linking GLSL program depth ... Linking GLSL program interaction_ambient ... Linking GLSL program interaction_stencil ... Linking GLSL program interaction_shadowmap ... Linking GLSL program stencil_shadow ... Linking GLSL program shadow_map ... Linking GLSL program frob_silhouette ... Linking GLSL program frob_highlight ... Linking GLSL program frob_extrude ... Linking GLSL program frob_apply ... Linking GLSL program heatHazeWithDepth ... Linking GLSL program HeatHazeWithMaskAndDepth ... Linking GLSL program heatHaze ... Linking GLSL program heatHazeWithMaskAndBlur ... Linking GLSL program fresnel ... Linking GLSL program ambientEnvironment ... Linking GLSL program heatHazeWithMaskAndDepth ... ---------------------------------------- ----- idSoundCache::EndLevelLoad ----- 394497k referenced 125k purged ---------------------------------------- ----------------------------------- 77079 msec to load hhtlc Interaction table generated: size = 0/512 Initial counts: 6903 entities 665 lightDefs 5265 entityDefs ------------- Warnings --------------- during hhtlc... WARNING:Couldn't load gui: 'guis/map_of.gui' WARNING:Couldn't load image: guis/assets/game_maps/map_of_icon WARNING:Couldn't load image: lights/qc_comj WARNING:Couldn't load sound 'explosion_all_clear.wav' using default WARNING:idCollisionModelManagerLocal::LoadModel: collision file for 'models/ritual_hammer2.lwo' contains different model WARNING:idCollisionModelManagerLocal::LoadModel: collision file for 'models/ritual_hammer3.lwo' contains different model WARNING:idCollisionModelManagerLocal::LoadModel: collision file for 'models/ritual_hammer4.lwo' contains different model 7 warnings Interaction table generated: size = 0/512 Initial counts: 6903 entities 665 lightDefs 5265 entityDefs WARNING:Restarted sound to avoid offset overflow: sound/ambient/environmental/water_pool02.ogg WARNING:Restarted sound to avoid offset overflow: sound/ambient/ambience/silence.ogg WARNING:Restarted sound to avoid offset overflow: sound/ambient/ambience/alien05.ogg Linking GLSL program environment ... The ambient volume is now -1.885291 decibels (range: -60..0), i.e., 87.749992% of full volume. Restarting ambient sound snd_ct_babtistery'(derelict03) with volume -1.885291 signal caught: Segmentation fault si_code 128 Trying to exit gracefully.. ----- idRenderModelManagerLocal::EndLevelLoad ----- 0 models purged from previous level, 2786 models kept. --------------------------------------------------- Regenerated world, staticAllocCount = 0. Getting threadname failed, reason: No such file or directory (2) --------- Game Map Shutdown ---------- ModelGenerator memory: 67 LOD entries with 0 users using 1072 bytes. --------- Game Map Shutdown done ----- Shutting down sound hardware idRenderSystem::Shutdown() ...shutting down QGL I18NLocal: Shutdown. ------------ Game Shutdown ----------- ModelGenerator memory: No LOD entries. Shutdown event system -------------------------------------- Sys_Error: ERROR: pthread_join Frontend failed shutdown terminal support About to exit with code 1

-

That sort of tone doesn't fly in our forums.

-

I would use this massive list for any fan missions, it includes campaigns too: https://www.ttlg.com/forums/showthread.php?t=148090 There are a lot of Fan Missions for the picking, I myself go for the lesser known ones and the short variety, because sometimes they hide a gem or two. Just like jaxa, I'm a bit outdated after the temporal retirement, but I do remember some amazing campaigns like "The Black Frog". If you intend to play The Black Frog, you should play the first two of the L'Arsene series missions, it's how I did it myself. Also, yes, L'Arsene are a fantastic series. The first mission of L'Arsene is a "rough draft", author was a bit new to Thief level making, but still great either way, after the 3rd you will see how his skill increased by a massive amount.

-

Unfortunately, TDM forum deletes the separator between the numbers. So I have to guess where one number ends and the next starts Also, user can rename screenshot manually, or push it through something that would rename it automatically. Thenwe won't see coordinates on screenshot address on forums.

-

tdm_show_viewpos cvar and screenshot_viewpos command: https://forums.thedarkmod.com/index.php?/topic/22310-212-viewpos-on-player-hud-and-screenshots/

-

Relax @Näkki, it's great to hear you enjoyed the game. My personal expectations were just a bit different when I read the Steam page, although the various trailers should have been a warning that stealth maybe wasn't the biggest priority of the devs. From the Steam page: "Weird west legends meet eldritch horror in BLOOD WEST, an immersive stealth FPS." Also "Blood West is a stealth FPS inspired by the genre classics such as the Thief series (whose fans will be happy to hear the voice of Stephen Russell, the actor voicing the master-thief Garrett, returning here as the protagonist), S.T.A.L.K.E.R. games, or - from the contemporary catalog - Hunt: Showdown. The gameplay rewards the careful approach: scouting the area, stalking your enemies, and striking from the shadows. Can you figure out a way to clear a fort full of ghouls and monsters without raising an alarm?" From my personal experience I think the game is predominantly Hunt: Showdown, a bit of S.T.A.L.K.E.R. and a very small portion Thief. Stealth is very unforgiving and makes it almost impossible early game when there are various enemies around, but hey maybe I just suck at it. I don't see Deus Ex in it, unless the skill leveling is the Deus Ex part for you and then I have to disagree with you, as that seems like the trait system in Hunt: Showdown. Edit: What I also understood from the Steam forums is that the original VA was dropped close before the release of the full version and replaced by Russell with no real explanation from the devs why this was done.

-

The devs didn't title this thread, and @datiswous said they're attempting to mislead people by using Russell's name and a retro style to make it resemble Thief, which is cynical. I grew up on forums like I'm sure anyone who likes a game from '98 did. I actually left the Discord immediately after joining it because it was more off-topic doom-posting than anything relevant to the mod. I thought the forums might be better, but it's mostly just grown men yelling at clouds and telling strangers how mature they are, and a few brave souls actually developing anything. Depressing place, I'll just stick to enjoying new missions every 6 months without an account.

-

True, but, 1. this thread is called "Western stealth FPS with Stephen Russell", and, 2. nothing you said changes anything for me. The gameplay still doesn't look like something I'd enjoy. And, if you really think this forum is cynical, then you don't visit forums much. Actually, the majority of the users are are pretty mature, unlike in other forums.

-

So, if I understand you, no Thief Gold FM does sound and text notifications of completed objectives? The missions in The Black Parade surely did. I'm completely confused now. I was sure that original Thief Gold had those objective complete notifications (at least the sound). Reading this thread suggests otherwise though: https://www.ttlg.com/forums/showthread.php?t=132977

-

A Problem Arises I've paused subtitling of the Lady02 vocal set, because of a problem with the voice clips described here: https://forums.thedarkmod.com/index.php?/topic/21741-subtitles-possibilities-beyond-211/&do=findComment&comment=490151 While a way forward is being determined, I'll work on a different vocal set. Maybe manbeast, for which Kingsal just provided me the voice script.

-

Changelog of 2.12 development: release212 (rev 16989-10651) * Training Mission reverted to 2.11 state, except for text changes about new controls. beta212-07 (rev 16982-10651) * Fixed save/load of turrets. * Fixed some more cases of camera clipping during force-crouched mantle (6425). * Fixed crash if player wins twice in quick succession (6489). * Added angRotate script event. * Fixed church_altar prefab (6285). beta212-06 (rev 16970-10644) * Fixed light leaks workaround dropped after save + load (post). * Force doors which connect visportal to cast shadows regardless of light flow (post). * Improved candle vs junk detection for new frob controls (6316). * Fixed player getting stuck at start of "One Step Too Far" (post). * Fixed warning on spawning atdm:env_ragdoll_tdm_spider. * Fixed wrong skin in mechanical/switches/switch_rotate_lever prefab (6479). * Fixed double slash in lady02 subtitle decls (post). * Fixed rotated versions of safe03_wall prefab (6268). * Tweaked fogging of health potion. * Fixed overbright skins for nature bushes (6478). * Fixed Grandfather_clock_victorian_01 model (6383). * Removed pause from looping sound machinery/machines/m3_loop (6384). * Fixed broken func_portals in Training Mission (4352). * Minor improvements in Saint Lucia mission. * Doubled game scripts memory limit (post). * Improved normal map of long banners (6355). beta212-05 (rev 16950-10635) * Fixed player seeing through ceiling when mantling into crouched state (6425). * Improved how frobbing works on junk items (6316). * Toggled states of player movement are saved and restored properly (6458). * Fixed back image loading optimization. * Added canCloseDoors spawnarg on AI, which allows to block closing only (6460). * Rats and spiders are non-shoulderable by default (6456). * Increased wait in screenshot_viewpos macro command (6331). * Added forest models from The Valley abandoned mission. * Fixed frobstage on sign models (6457). * Added vine arrows to training mission (4352). * Improved Merry Chest prefabs (6459). * Fixed normal map of dirt_packed_muddy (4668). * Fixed nails in door_boarded_up01 model. * Fixed attachments of atdm:fireplace_place_base (6474). * Fixed editor image of blocks_large_sandstone, rough_grey_dirty_sepia_grey_trim (6281, 6464). * Added editor image for grey_dirty_trim material. * Adjusted tooltip for auto-search bodies. beta212-04 (rev 16932-10626) * Added massive package of subtitles for AI sounds (6240, thread). * Fixes in envshot command (5796). * Added nature/dirt/ash_and_coals texture (6441). beta212-03 (rev 16902-10623) * Improved subtitles layout and location ring picture (p1 p2). * Fixed broken remote render with soft stencil shadows. * Added color buffer clears to fix remote render breaking skybox (6424). * Fixed warning generated by remote render (6424). * Fixed min_lod_bias being ignored if no other LOD settings is specified (6359). * Now changing LOD settings effects objects with min_lod_bias immediately (6359). * Fixed text & background alignment in mission lists (6337). * Fixed gaps in chandelier models (6433). * Added missing editor texture for carpet/runners/ornate_red_black03_end (6435). * Further expansion of listRenderLightDefs and listLightEntityDefs commands. beta212-02 (rev 16889-10613) * Fixed underwater rendering due to missing doublevision shader (post). * Exclude more lights from the new light portal flow optimizations (5172, 6321). * black_matt is now fully black, no tiny green bias (post). * Fixed lockpick interruption when mouse cursor switches between door and handle. * Extended listRenderLightDefs and listLightEntityDefs commands. * Fixed church_altar.pfb (6285). * Added window01_curtains01.lwo in separate parts (6356). * tdm_open_doors now opens locked doors too. * Fixed rare case of getting NaN in spline mover. * Added r_skipEntities cvar, similar to "filter entities" in DR. * Added editor spawnargs for volumetric light properties (6322). * Fixed radius override and added position override for script-based stims. * Fixed warnings with wrong virtual function overrides. beta212-01 (rev 16879-10584) * Fixed player falling through elevator when shouldering a body (6259). * Rebalanced volume of all player footsteps (6348). * Fixed weird animation when mixing drawn bow and main menu (2758). * Fixed all kind of issues with bc_teatray material. * Added alternative frob controls mode tdm_holdfrob_drag_all_entities for dragging on hold. * Fixed non-actor entities always getting full splash damage. * Hide console before screenshot with screenshot_viewport command (6331). * Added tdm_subtitles_ring cvar to disable subtitles location ring. * Added mission.cfg as a temporary way for mission to override non-archived cvars (5453). * Cvars "pm_lean_*" are no longer archived (6320). * Removed some cvar overrides from atdm:player_base. * CFrobLock now supports script events: Lock, Unlock, ToggleLock, IsLocked, IsPickable (6329). * Simplified flee script event, supported fleeing from non-actor entity and fleeFromPoint. * Fixed crash on some non-standard cases of flinderize. * Can set spawnarg "douse 0" on damageDef to not extinguish lights from splash damage. * Added setFrobMaster script event. * Added script-based stim type, which triggers only when stimEmit script event is called. * Added on/off script events to func_emitter entity. * Added setSmoke script event to change particle decl for a func_smoke. * Added hasInvItem script event to check if player has some item. * Added launchGuided script event to start guided projectiles. * Added getInterceptTime script event for shooting projectile and running target. * Added "bounce_sound_min|max_velocity" spawnargs to control projectile bounce sounds. * Added "postbounce_*" spawnargs to change projectile properties after bounce. * Fixes to moor guard ragdoll (6345). * Fixed wench AI sounds (6284). * Added new experimental entityDef for an automatic turret. * Official missions no longer pretend to be part of 3-mission campaign (6338). * Removed AI PAIN messages console spam. * Removed excessive "s_volume 0" from base loot entityDefs (6346). * Replaced symbol on the proguard's belt. * Default value of com_maxfps increased from 144 to 300. * Improved idEntityPtr, fixed some warnings. dev16854-10518 * High mantle animation has become much faster (6343). * Crouching while on ladder/rope now causes player to slide instantly. * Added "forceShadowBehindOpaque" hack to workaround shadow leaks in old missions (5172). * Fixed and revised underwater "double vision" effect (6300). * Add scratch images have alpha = 1, which fixes some mirror materials (6300). * Added warning if material output color depends on input alpha, fixed it in core assets (6340). * Support several independent user addon scripts (6336). * Fixed missing headbob and footsteps at very high FPS (4696). * Fixed player hanging mid-air in a jump at very high FPS (6333). * Don't crash if player's head does not exist (6326). * Added "fade in fast" options for frobhelper (6342). * Removed "show tooltips" option, now it is always on (6344). * Added default spawnarg values to "text" debug entityDef (6325). * Fixed some uninitialized values, float overflows and NaNs across the code. * Reorganized covered furniture models from Seeking Lady Leicester (6289). dev16842-10488 * Major changes in frob/use controls: holding frob does different thing now (6316, thread). * Fixed some electric lights not spawning. * It is no longer necessary to specify extension to reference PNG image. * Added cvar tdm_show_viewpos and command screenshot_viewpos (6331). * Fixed hanging when light is moved through a plane with many visportals (3815, thread). * Fixed multipage readables stuck on empty page, improved page flipping (6313). * Fixed WAV sounds playing in main menu, all sounds are streaming now (6330). * Fixed light leaks along scissor rectangle boundary with soft stencil shadows (thread). * Better subtitles location visualization (6264). * Changed position of subtitle blocks and subtitle font (6264). * Internal refactoring of idImage class (6300). * Fixed rare bug in renderworld raycasting... might happen with particle collisions. * Fixed warnings in newspaper_bridgeport0X core readable GUI (6245). * Added vec4 GUI keyword (6164). * Renamed pm_lean_toggle cvar to tdm_toggle_lean. * Improved "head bob" and "mantle roll" settings in main menu. * Updated FFmpeg to 4.4.4 (6314). Known issues: * Various problems after image refactoring: underwater, mirrors, etc. dev16829-10455 * Allowed to mantle while carrying/manipulating an object (5892, thread). * Allowed to change weapon while mantling or on rope/ladder (6319). * Several leaning improvements (6320, thread). * Parallel shadow-casting lights are deprecated, use parallelSky instead (6306). * Added many menu settings for autoloot body, blackjack helper, and other (6311). * Deleted option for autolooting bodies with one item per frob, added menu setting (6257). * Added cvar to modify all head bobbing settings (6310). * Fixed some corner cases with multiloot (6270). * Fixed frob helper's "always visible" mode (6318). * New&fixed versions of atdm:lamp_electric_square_3_lit_unattached (6315). * Fixed UV map on Stove models (6312). * Reworked r_showPrimitives + deleted code for rendering from CPU buffers. * Shortened name of end-mission autosaves (6294). * Consistent names of various arrows. Known issues: * Some electric lights don't spawn. dev16818-10434 * Fixed projectiles flying through player and enemies sometimes (6292). * Lights with noshadows spawnarg pass through walls again (5172). * Disabled portal flow culling optimization for parallel lights (5172, 6306). * Faster light-entity interactions matching if light is noshadows due to spawnarg (6296). * Compression of images to DXT1/3/5 is done in software (6300). * Cleaned up rounding math routines (6300). * r_showportals 2 is easier to understand now * Changed rules for getting start areas of parallelSky light (6306). dev16814-10408 * Optimized portal flow culling for shadowing lights (5172). * Extended dmap diagnostics to info_portalSettings, improved editor descriptions (6224). * Added test commands: tdm_open_doors and tdm_close_doors. * Minor adjustments to ear-cutting algorithm in dmap. * Minor refactoring in image compression code (6300). dev16809-10394 * r_shadowMapSinglePass is enabled by default now. * Fixed lack of shadows in volumetric lights under r_shadowMapSinglePass (6271). * Fixed interaction rendering on materials with polygonOffset (5868). * Optimized code for finding light-entity interactions on large maps (6296). * Optimized moving shadowing lights: don't create interactions in unreachable areas (5172). * More refactoring in backend: tonemap shader (6271). * Added more covered furniture models (6289). * Added wall models from Seeking Lady Leicester (6293). Known issues: * Some noshadows lights no longer pass through walls. dev16801-10370 * Supported -durationExtend for inline subtitles (6262). * Added blue noise dithering to tonemap shader, which fixed color banding of fog (6271). * Optimized away unnecessary render copy under "useNewRenderPasses 1" (6271). * Refactored blend and fog lights into new backend architecture. For troubleshooting, reduce cvar useNewRenderPasses to 1 or 0 (6271). * Added 30 case to max FPS selection in settings menu. dev16792-10357 * Fixed particles bound to animated joints (6099). * idVec3 is no longer initialized to zero by default (6280). * Integrated Address Sanitizer tool and fixed a few found bugs (6280). dev16789-10349 * Deleted old backend completely + some cleaning (6271). * Fixed map icon wrong name (thread). * Now light entities support noPortalFog spawnarg (6282). * Support fonts aspect ratio correction (6283). * Fixed playerstart customization (thread). * Refactored "render pass" part into new backend architecture. For troubleshooting, try cvar "useNewRenderPasses 0". Also "textures/particles/blacksmokepuff" now works (6271) * Now arithmetic expressions in materials support min/max functions (6271). * Minor initialization cleanup (6280). dev16785-10319 * "r_shadowMapSinglePass 1" now respects noselfshadows flag (6271). * Continued refactoring in shadow maps and render-pass shaders (6271). dev16783-10307 * Backported new rendering backend to uniforms, should work like the old one now (6271). * "Auto" lockpicking difficulty now unlocks pin from after one cycle (6256). * Added "auto-search bodies" feature under tdm_autosearch_bodies cvar (6257). * Added r_shadowMapAlphaTested cvar for single-pass shadow maps (6271). dev16781-10289 * Added first version of direction and volume cues to subtitles (6264). * Allow subtitles to extend duration of sound sample (6262). * Improved slot allocation algorithm for subtitles, a subtitle no longer changes slot (6264). * Fixed bug that stereo sample plays for 2x duration due to length confusion. * Upgraded libpng and rebuilt third-party packages. * Internal fixes of depth bounds test asserts. dev16778-10275 * Allow limited mantling with a shouldered body (5892). * Fixed toggle creep and improved settings layout in the menu (6242). * Fixed bounding boxes of animated entities and particles, enabled r_useEntityScissors by default (6099). * Trigger call_on_exit before call_on_entry when switching locations. * Don't expand bounds of surfaces with turbulent deform (5990). * Removed "gui" spawnarg on GUI message to avoid first frame (6117). Known issues: * Particles bounds to animated joints broken. Changelog of earlier versions can be found here.

-

Black Parade is released ! https://www.ttlg.com/forums/showthread.php?t=152429

-

Body awareness please. https://forums.thedarkmod.com/index.php?/topic/20013-are-you-gonna-add-this/

-

If the "mission fails as soon as stealth score turns non-zero," that would not be good for ghost players. They might need to find out "how" they failed and experiment to avoid alerting guards. They might need to take those score points as a "bust". They might need to take those score points to complete an objective. Then, mission authors would need to encode exceptions into their missions, which would be a lot of work (if they decide to do it at all). However, part of what makes ghosting challenging and fun is when mission authors do not create their missions with ghosting in mind. Please see: Official Ghosting Rules: https://www.ttlg.com/forums/showthread.php?t=148523 Writing code for these rules would be a huge undertaking. Ghost Rules Discussion: https://www.ttlg.com/forums/showthread.php?t=148487 Creating an official mode could alienate these dedicated ghost players, because it would clash with what is considered ghosting in the community. Including the Stealth Stat Tool mod in the official release would be more useful. Or, making the audible alert states of guards quick and easy to recognize could help as well. For these reasons, I don't agree with an official "Ghost" mode. If the dev team were to do it, we should consult with @Klatremus so we get it 100% correct or not pursue it at all. (This ghosting bit should probably be in its own thread.)

-

I loved it. Awesome game. I faceplanted at the people who asked for quest markers in the Steam forums there... Herr, lass Hirn regnen. The game is so great, and so true to the original, because it doesn't hold your hand. When is the new breed of gamers gonna learn.

-

A couple more: https://forums.thedarkmod.com/index.php?/topic/21739-resolved-allow-mantling-while-carrying-a-body/ https://forums.thedarkmod.com/index.php?/topic/22211-feature-proposal-new-lean-for-tdm-212/ https://forums.thedarkmod.com/index.php?/topic/22198-feature-proposal-frob-to-use-world-item/ https://forums.thedarkmod.com/index.php?/topic/22249-212-auto-search-bodies/

-

Try it, its FOSS like Blender, noyhing to lose.

-

Interesting, didn't know this yet. Problem is that tutorials for Blender won't work with that well, so although maybe it's easier to use, I'm not sure if it's a better choice.

-

Gimp and Krita are an killer combo for image creating and edit. If you also use Blender or Bforartist, perfect.

-

I'm happy to present my first FM, The Spider and the Finch. There may be a spider, but no ghosts or undead. It should run a couple hours. It's now available on the Missions page or the in-game downloader. Many thanks to the beta testers Acolytesix, Cambridge Spy, datiswous, madtaffer, Shadow, and wesp5 for helping me improve and making the mission to the best of my abilities. This would not be have been possible without Fidcal's excellent DarkRadiant tutorial. Thanks also to the many people who answered my questions in the TDM forums. Cheers! 2023-12-13 Mission updated to version 3. Fixed a bug where the optional loot option objective was not actually optional. Updated the animations for Astrid Added a hallway door so the guards are less likely to be aggroed en masse. 2024-05-31 Mission updated to version 4. Adjusted tower guards Added voices for conversations Improvements to objectives 2024-07-12 Updated to version 5. added dialog subtitles small bug fix

- 109 replies

-

- 22

-

-

-

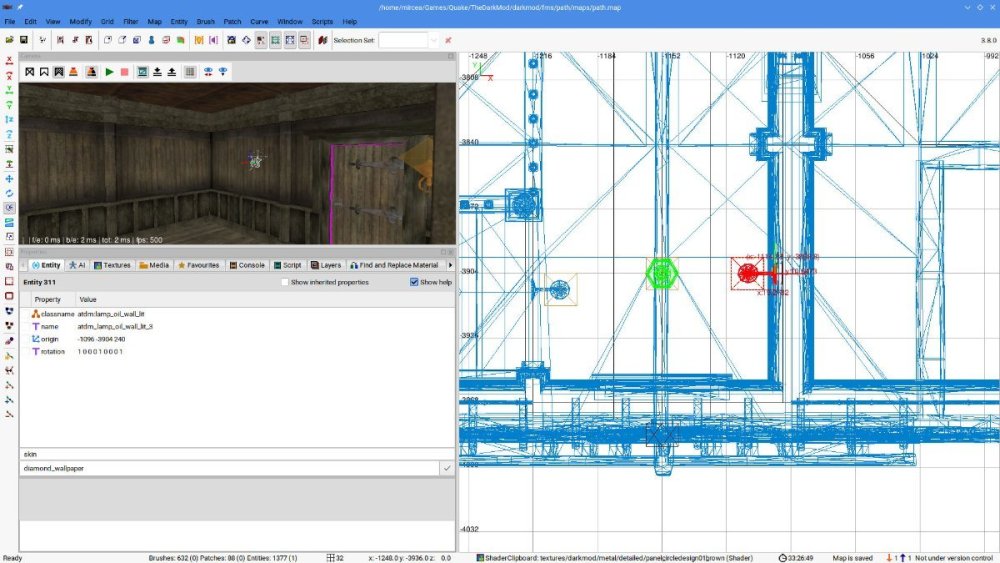

It's not that no: I didn't modify the properties of the default entity or its flame, in this case it's the standard atdm:lamp_oil_wall_lit entity... also I have player shadows enabled, the player as well as other architecture elements cast shadows fine. Walls are the building modules, eg: model models/darkmod/architecture/modules/interior_set01_corner.lwo with skin diamond_wallpaper as a test. This is the closest to the setup I still have: The origin of the light is well beyond the face of the module surface for shadow casting. Though this shouldn't even matter since the light is in the other room and the caulk brush should itself mark this. I think I noticed this on other maps too while playing, but only now saw it obviously enough to realize there's likely an issue somewhere. I remember seeing the glow of a light from another room shining on the floor / ceiling when it shouldn't, though I didn't document it at the time.

-

Public release v1.7.6 (with Dark Mod support) is out. Improvements since the final beta 14 are: Fixed a few remaining bugs with zip/pk4 support. Game Versions window now properly displays TDM version. Import window no longer has a vestigial off-screen TDM field (because TDM doesn't need or support importing). Web search option is now disabled if an unknown/unsupported FM is selected. If an FM with an unknown or unsupported game type is selected, the messages in the tab area now no longer refer to Thief 3 ("Mod management is not supported for Thief: Deadly Shadows"). The full changelog can be viewed at the release link. The de facto official AngelLoader thread is here: https://www.ttlg.com/forums/showthread.php?t=149706 Bug reports, feature requests etc. are usually posted there. I'll continue following this thread though. Thanks everyone and enjoy!

- 41 replies

-

- 10

-

-

Good work! I enjoy short missions because things are nice and focused - you get in, you get out. Also I tend to do better with the loot amounts and I was able to get all the loot without too much trouble, which is rare for me. If I were to make a suggestion though - I found the intro briefing sequence a bit distracting because it was so obvious the narration was pitch-shifted to make a deeper voice. If you felt the original voice wasn't deep enough for your needs, I would either get someone on the forums to record it for you or just leave as is. That's my only real complaint and it's not even about the mission itself, so pretty good first start!