Search the Community

Searched results for '/tags/forums/input/' or tags 'forums/input/q=/tags/forums/input/&'.

-

Desired Results File with All Languages What is want to end up with from our AI (with any iterative fixup and manual integration) is an FM-only version of TDM’s UTF-8 all.lang. That file has 17 language sections in a particular order, which we will adopt too (in the prompt further below), although, other than English being first, it doesn’t really matter. Once we have our FM all.lang, we can easily generate all the required ISO-encoded *.lang files, e.g., french.lang. Strategy of Feeding the AI One approach would be to just feed the entire #str list in one gulp, with prompt engineering that covers all aspects. This would minimize the post-translate integration time. But the concern is that prompt engineering becomes more difficult. The AI might get confused about what restrictions and hints apply to which strings. While sometimes shared context across strings can be helpful, too much shared context could lead to overly-creative translations (e.g., hallucinations). [BTW, if accessing the AI through an API, there’s often a "temperature" value you can specify, from 0.0 to 1.0, from most-predictable to most-creative. We can use a few words in the prompt to approximately achieve similar ends.] So, to maintain more control, I’m going to batch-feed. The assumption is that translating #str_ in batches of related input groups will allow more focused guidance from prompt engineering, leading to better results. I’ll start with inventory items, that have the shortest strings and most dictionary-like lookup. An alternative/additional batching (particularly needed with large FMs) would be by "scene". In the case of Air Pocket, it could be thought of broadly as 4 scenes, based on timeline and location. The story, as driven by objectives, is fairly linear; larger FMs would typically have some randomization in scene order. Would batching by scene be useful (i.e., give better AI results) for some of Air Pocket’s #str_ s? Thinking this over. But for now, treat inventory items independent of scene. Prompt Engineering for Inventory Items A stab at a reusable template follows in blue. It describes the overall translation task, the desired tone, and input and output formats. Text specific to inventory items is shown in bold. Text that is specific to this FM, to clarify the context and the meaning of particular words, is in italics, with spoilers hidden. You are an expert translator between English and other European languages, including Russian. You wish to translate a list of inventory items, all inanimate objects, from English to these other languages. Each line of the list begins with a tab, then a word beginning with #str_ within double quotes, then another tab, then a phrase within double quotes. When you translate a line, in the output keep the tabs and the #str_ word unchanged, and only change the phrase to the other target language in UTF-8, keeping it in double quotes. Make the translated phrase reasonably short while preserving the formal meaning. Avoid modern slang. Old-fashioned wording is fine. At the end of each line, add another tab, and then add a back-translation of the previous phrase into English again. When back-translating, ignore the original English phrase. Most of the inventory items are keys, and the associated phrases describe locked doors to particular locations aboard a ship, or locked trunks or safes on a ship. The "Master Key" opens all locks. Following the input list of inventory items, append output lists in these target languages: 1. German 2. French 3. Polish 4. Italian 5. Spanish 6. Portuguese 7. Russian 8. Czech 9. Hungarian 10. Dutch 11. Slovak 12. Danish 13. Swedish 14. Romanian 15. Turkish 16. Catalan. List of inventory items: [... skipping 1 potential spoiler] "#str_fm_map_inv_key_captains_cabin" "Captain's Cabin" "#str_fm_map_inv_key_captains_safe" "Captain's Safe" "#str_fm_map_inv_key_galley" "Galley" "#str_fm_map_inv_key_master_key" "Master Key" "#str_fm_map_inv_key_mess" "Mess" "#str_fm_map_inv_key_sea_trunk" "Sea Trunk" Using ChatGPT As discussed at the outset, you can use this without signing in (it will nag you). Also, if you’d like it not to retain your input for training purposes, click on the circled question mark and change it under "Settings". As of this post, of you ask ChatGPT what model it’s using, it responds "You're currently chatting with GPT-4o, the latest model from OpenAI as of 2025. The "o" stands for "omni" — it's designed to handle text, images, and more, all in one model." Following up by enquiring about usage limitations, it says "Free users can access GPT‑4o, but with strict usage caps, which vary based on demand and time of day". More specifically, "usage falls in the range of 5–16 messages per 3–5 hours, after which you'll be limited or switched to GPT‑4o‑mini." The latter is a faster but lower-accuracy model. "We’ll notify you once you’ve reached the limit and invite you to continue your conversation using GPT-4o mini or to upgrade to [paid] ChatGPT Plus." Because I’m doing this at a leisurely rate (and reporting it to you in posts), the usage restrictions should not bite. About Input and Output Formats As you can see above, the input is the AI prompt, appended with content from english.lang, namely, the lines between the "{" and "}" brackets. For those lines, no change to tab-separation is done. The output is the same format, but with an added English back-translation added to each line. When ChatGPT generates the response, each requested language is enclosed in its own HTML response frame, with a separate "copy" link. So you have to copy each link separately, pasting them successively into your FM-specific all.lang file while adding headers, e.g., [French]. Also, the frame margin contains the word "vbnet". When I asked, ChatGPT indicates that’s the style of syntax highlighting applied to the results, based on source material, but it may be inappropriate and so ignorable. Which explains why the word "key" was always colored green. In the next post, I’ll discuss the specific results.

-

============== -= IRIS =- ============== WELLINGTONCRAB TDM v 2.11 REQ Ver. 1.3 *For Maureen* -=- "Carry the light of the Builder, Brother. Unto its end." -Valediction of the Devoted "What year is this? Am I dreaming?" -Plea of the Thief Dear Iris, I am old and broken. When we were young it felt like the words came easily. Now I find the ink has long dried on the pen and I'm as wanting for words as coin in my purse. I can tell we are nearing the end of the tale; time enough for one more job before the curtain call... ============== -Installation- Requires minimum version of TDM 2.11 **Dev build dev17056-10800 (2.13) fixes several visual effects which have been broken in the mission since the release of 2.11. For that reason playing with that version or later is currently recommended** -Iris does not support mods or the Unofficial Patch- Download and place the following .pk4 into you FMs directory: Iris Download ============== *Thank you for playing. Iris is a large mission which can either take as quickly or as long as you are compelled to play. I hope someone out there enjoys it and this initial release is not completely busted - I tried the best I could!* *Iris both is and isn't what it seems. If commenting please use spoiler tags where appropriate. If you are not certain if it would be appropriate a good assumption would be to use a spoiler tag* *Support TDM by rating missions on Thief Guild: https://www.thiefguild.com/* ============== WITH LASTING GRATITUDE: OBSTORTTE - Whose gameplay scripts from his thread laid the foundation which made the mission seem like something I could even pull off at all. Also fantastic tutorial videos! DRAGOFER - Who built upon that foundation and made it shine even brighter! And whom also provided immeasurable quantities of help and encouragement the past couple years on the TDM discord. ORBWEAVER & GIGAGOOGA - For generously offering their ambient music up for use. EPIFIRE - Who lent me his fine trash and trash receptacle models. AMADEUS - Who was the first person who wasn't me to play the damn thing and provided his excellent editorial services to improving the readers experience playing TESTERS AND TROUBLESHOOTERS: AMADEUS * DATISWOUS * SPOOKS * ALUMINUMHASTE * JAXA * JACKFARMER * WESP5 * ATE0ATE * MADTAFFER * STGATILOV * DRAGOFER * KINGSAL * KLATREMUS - What can I possibly say? Playing this thing over and over again could not have been easy. Deepest thanks and all apologies. -=THANKS TO ALL ON THE TDM DISCORD AND FORUM=- ==SEE README.TXT FOR ADDITIONAL ATTRIBUTIONS & INFORMATION== HONORABLE MENTION: GOLDWELL - If I hadn't by chance stumbled into Northdale back in 2018/2019 I would probably still be trying to get this thing to work in TDS, which means it probably would not exist - though more details on that in readme. ============== Boring Technical Information: *Iris is a performance intensive mission and I recommend a GTX 1060 or equivalent. I find the performance similar to other demanding TDM missions on my machine, but mileage may vary and my apologies if this prevents anyone from enjoying the mission.* *Iris heavily modifies the behavior of AI in the game, how they relate/respond to each other and the player. So they may act even stranger than they do typically in TDM. Feedback on this is useful - as it can potentially be improved and expanded upon in future patches.* -=- This is my first release and it has been a long time coming! If I forgot anything please let me know! God Speed. 2.10 Features Used:

- 420 replies

-

- 25

-

-

-

Away 0: Stolen Heart by Geep & _Atti_ (2021/11/12)

covert_caedes replied to Geep's topic in Fan Missions

Hrm I wasn't able to type on *after* the spoiler and accidentally already submitted the reply.. (Does this forum somewhere have a raw mode where you type text with tags around them or something instead of this broken WYSIWYG editor?) Anyway, what I was gonna add: This was a great mission and I already played Away1 - I'm really looking forward to Away2, want to see how the story goes on -

In order to thank the team (and other mappers) for their relentless efforts in contributing, I hereby give the community.... Ulysses: Genesis A FM By Sotha Story: Read & listen it in game. Link: https://drive.google.com/file/d/0BwR0ORZU5sraTlJ6ZHlYZ1pJRVE/edit?usp=sharing http://www4.zippysha...95436/file.html Other: Spoilers: When discussing, please use spoiler tags, like this: [spoiler] Hidden text. [/spoiler] Mirrors: Could someone put this on TDM ingame downloader? Thanks!

- 132 replies

-

- 13

-

-

Ulysses 2: Protecting the Flock By Sotha The mission starts some time after the events of Ulysses: Genesis, and continues the story of Ulysses. It is a medium sized mission with a focus on stealthy assassinations and hostage liberation. BUILD TIME: 12/2014 - 05/2015 CREDITS The TDM Community is thanked for steady supply of excellent mapping advice. Thanks goes also to everyone contributing to TDM! Voice Actors: Goldwell (as Goubert and Ulysses), Goldwell's Girlfriend (as Alis) Betatesters: Airship Ballet, Ryan101. Special Thanks to: Springheel and Melan (for proofreading). Story: Read & listen it in game. Link: https://drive.google.com/file/d/0BwR0ORZU5sraRGduUWlVRmtsX3c/view?usp=sharing Other: Spoilers: When discussing, please use spoiler tags, like this: [spoiler] Hidden text. [/spoiler] Mirrors: Could someone put this on TDM ingame downloader? Thanks!

- 74 replies

-

- 18

-

-

This post differentiates between "gratis" ("at no monetary cost") and "libre" ("with little or no restriction") per https://en.wikipedia.org/wiki/Gratis_versus_libre * A libre version of TDM could: ** Qualify TDM for an article on the LibreGameWiki *** TDM is currently listed as rejected https://libregamewiki.org/Libregamewiki:Rejected_games_list because "Media is non-commercial (under CC-BY-NC-SA 3.0). The engine is free though (modified Doom 3) (2013-10-19)" ** Qualify for software repositories like Debian *** TDM is currently listed as unsuitable https://wiki.debian.org/Games/Unsuitable#The_Dark_Mod because 1) "The gamedata is very large (2.3 GB)", and 2) "The license of the gamedata (otherwise it must go into non-free with the engine into contrib)" and links to https://svn.thedarkmod.com/publicsvn/darkmod_src/trunk/LICENSE.txt Questions: 1) tdm_installer.linux64 is 4.2 MB (unzipped), which is far from the 2.3 GB which is said to be too large. Yes, the user can use it to download data that is non-libre, but so can any web browser too. If the installer itself is completely libre, does anyone know the reason why it cannot be accepted into the Debian repository? 2) If adding the installer to the repository is not a viable solution, would it be possible to package the engine with a small and beginner friendly mission built only from libre media/gamedata into a "TDM-libre" release, and add user friendly functionality to download the 2.3 GB media/gamedata using "TDM-libre" (similar to mission downloading)? 3) Would such a "TDM-libre" release be acceptable for the Debian repository? 4) Would such a "TDM-libre" release be acceptable for LibreGameWiki? 5) Would the work be worth it? * Pros: Exposure in channels covering libre software (e.g. the LibreGameWiki). Distribution in channels allowing only libre software (e.g. the Debian repository). * Cons: The work required for the modifictions and release of "TDM-libre". Possible maintenance of "TDM-libre". I'm thinking that the wider reach may attract more volunteers to work on TDM, which may eventually make up for this work and hopefully be net positive. 6) Are there any TDM missions that are libre already today? If not, would anyone be willing to work on one to fulfill this? I'll contribute in any way I can. 7) I found the following related topics on the forum: * https://forums.thedarkmod.com/index.php?/topic/16226-graphical-installers-for-tdm/ (installing only the updater) * https://forums.thedarkmod.com/index.php?/topic/16640-problems-i-had-with-tdm-installation-on-linux-w-solutions/ (problems with installation on Linux) * https://forums.thedarkmod.com/index.php?/topic/17743-building-tdm-on-debian-8-steamos-tdm-203/ (Building TDM on Debian 8 / SteamOS) * https://forums.thedarkmod.com/index.php?/topic/18592-debian-packaging/ (Dark Radiant) ... but if there are other related previous discussions, I'd appreciate any links to them. Any thoughts or comments?

-

That's correct but many people just use the in-game mission downloader to check for new stuff without even looking at the forums, which is what I did. In the past a lot of missions that required a newer version of the game executable and assets would tell you this when you tried to start them up. I was just reporting on this and that you don't need to reinstall, just update via tdm_updater if you find this issue.

-

Just to complicate your life, there are 3 additional aspects to consider about the circa-2014 Mason files, and subsequent circa-2017 improvements to the 'english' version perhaps applicable to your work. (These issues are covered in the wiki "Mason Font" article, with a bit more in my "Analysis of 2.12 TDM Fonts", https://forums.thedarkmod.com/index.php?/topic/22427-analysis-of-212-tdm-fonts/. The 2017 changes can be seen in the *current* 2.13 TDM English Mason files.) 1) Need for custom DAT-scaling on certain Mason characters The source TTF had upper-case and lower-case characters that were early-on considered too similar to size. So (before 2014) in the DAT, selective per-character scaling was used to differentiate them. See https://wiki.thedarkmod.com/index.php?title=Font_Metrics_%26_DAT_File_Format#Per-Character_Font_Scaling for details. As you add new characters, you should do likewise (relatively easy with refont). 2) Creating the "glow" of mason_glow How Tels created the glow (for 'english' carleton & mason) is discussed in reasonable detail here: https://forums.thedarkmod.com/index.php?/topic/12863-translating-the-tdm-gui/page/5/#findComment-262661 That could be done for Russian too, which I recall currently fakes a glow, and possibly would require a minor GUI or engine code change to use. Note: To best accommodate glow and retain GIMP-visualization-alignment between base and glow characters, Tels moved some base characters within their bitmap, to keep their glyphs 2-3 pixels away from any bitmap edge. You should consider this when placing new base glyphs. Note: For the 3 mason bitmaps doubled in size circa-2017 as discussed next, the mason_glow bitmaps were also doubled. 3) Extensive bitmap editing to solve main menu character jaggedness. On Oct. 5, 2017, @Springheel in https://forums.thedarkmod.com/index.php?/topic/19129-menu-update/#findComment-412921 said: "Looking at the Mason fonts, it looks like they were super low res to begin with, and were then just resized [presumably referring to per-character scaling], making them even worse. I'll see what I can do." [Further on, referring to fonts in the TDM menu system:] "It appears that resizing the dds file to make it higher res is possible, so I'll proceed." Later, on Oct 13, 2017, he concluded within a "More detailed list of changes: "Updated the menu fonts, which were surprisingly bad before" Unfortunately, I couldn't find details on how this work was actually done. I assume the bitmap editing was all done in GIMP. It started with doubling the size of certain bitmaps from 256x256 to 512x512. This was done for the first 3 bitmaps (i.e., those with ASCII, some Latin-1). Then characters were made more crisp and smooth-edged. How? Dunno. Also, some odd but harmless artifacts happened within GIMP (noted in https://forums.thedarkmod.com/index.php?/topic/22427-analysis-of-212-tdm-fonts/page/3/#findComment-499660)

-

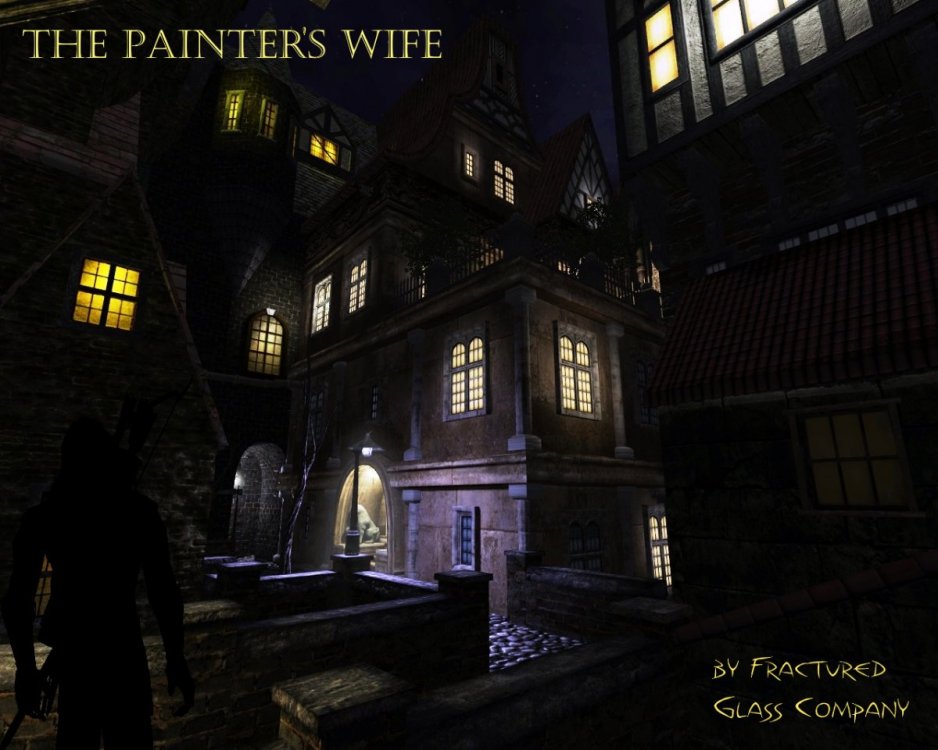

My old friend Andreas urgently needs my help. He asked if I could meet him at the Lion's Head Inn, our favourite retreat in a quaint part of the city called Mirkway Quarter. He’s got a small apartment nearby where he makes a modest living off paintings he sells to pompous nobles and the odd merchant. Not long ago, his wife Lily was hired as a servant at the manor of the local alderman, one Lord Marlow. Now she hasn't been home for days. Andreas went to the manor looking for her, but the guards shoved him into the gutter and warned him not to return. Andreas is certain that something bad has happened, and I don’t think he’s wrong. Gallery Authors’ Notes It all started many years ago when Shadowhide laid the foundation for a sprawling and convoluted city and worked with MoroseTroll and Clearing to create a macabre storyline to befit this medieval metropolis. At some point, however, the beast grew too large to handle, so he handed the keys to the City to Bikerdude and Melan. Together, the two worked tirelessly, passing the map back and forth, each playing to their respective strengths. Notably, Melan reworked the story concept, toning down many of its darker, R-rated elements. Eventually Melan, too, moved on in 2017, but by then large swathes of the community had become involved in this map’s development. Mapping work was contributed by Baal, Grayman, Fidcal, Ubermann, Skacky, and Flanders, while Destined, nbohr1more, and Obsttorte wrote story texts. Several scripts were provided by Grayman, Baal and Obsttorte, such as an elevator with scissor gates, a TDM first. Even after all this input, the daunting task still remained to transform what had grown into the largest TDM map ever made into a playable mission. Bikerdude hammered away at this for some more years still, on and off between other projects, until in early 2020 when he deemed it ready for public viewing. It was then that Dragofer and Amadeus joined in. In the months that followed, the trio reworked, finished, and polished the mission in nearly every aspect, fully writing out and editing the story as well as adding countless scripted effects and (with help from Bienie) many new readables. The good working atmosphere and pooled creativity brought forth several new secrets, of which the largest likely hasn’t been done before in TDM (hint: check the libraries). In the very end, the name “Fractured Glass Company” was drawn up to refer to everybody who was involved in creating this very special mission. Without the hard work of all these people, most of all Bikerdude and Shadowhide, this mission would likely never have seen the light of day, let alone become what you see here before you. The mission is, as Bikerdude puts it, a homage to Thief 1 & 2, and it’s our hope that you catch these vibes as you explore and enjoy this mission. Update 1.2 (released 04/04/2021) Update 1.1 (released 11/11/2020) Credits - Mapping: Shadowhide, Bikerdude, Amadeus, Baal, Dragofer, Fidcal, Flanders, Melan, Skacky, UberMann - Original Story Concept: Clearing, MoroseTroll, Shadowhide - Story & Readables: Amadeus, Bienie, Bikerdude, Dragofer, Destined, Melan, nbohr1more, Obsttorte, Shadowhide - Editor: Amadeus - Scripting: Dragofer, Baal, Grayman, Obsttorte - Voice Acting: AndrosTheOxen (Andreas), Joe Noelker (Player) - Video Editing: Bikerdude (briefing), Goldwell (briefing intro) - Custom Models: Bikerdude, Dragofer, Dram, Epifire, Grayman, Obsttorte - Custom Textures: Airship Ballet, Dmv88, Hugo Lobo - Custom Sounds: GigaGooga, Sephy, Shadow Sneaker, alanmcki, andre_onate, Deathscyp, dl-sounds.com, Dmv88, dwoboyle, eugensid90, gzmo, lucasduff, mistersherlock, qubodup, randommynd, richerlandtv, sfx4animation, Speedenza - Betatesting: Amadeus, ate0ate, Biene, Bluerat, CambridgeSpy, Cardia, Dragofer, Garrett(Monolyth-42), JoeBarnin, Kingsal, Krilmar, ManzanitaCrow, MikeA, Noodles, S1lverwolf, s.urfer Download Note: this mission requires TDM 2.08, which is now available for download. Please be aware that old saved games will no longer work after you upgrade to 2.08's release build. Note: it’s highly recommended to run this mission using the 64-bit client (TheDarkModx64.exe), since there've been frequent reports the mission won't load on the 32-bit client (TheDarkMod.exe). Both are found in the same folder. The mission is available from the ingame downloader. In addition to that, here are some more mirrors, as well as the official screenshots for anybody uploading this mission to a FM database: Mission: Google Drive / OneDrive Mission (v1.1, slimmed down version for 32-bit clients): Google Drive / OneDrive Official Screenshots: Google Drive / OneDrive Hi-Res Map: Imgur Links Secret loot & areas walkthrough by @Lzocast

- 292 replies

-

- 27

-

-

-

I switched from a 60 Hz display to a 144 Hz display some time ago. I absolutely love the smoother movement and animations in games. It really makes the games more realistic. Regarding the input lag: I don't think I would notice that. I don't notice a difference in input lag between Vsync on and off either.

-

I'm beginning to wonder if you creeps fuckin hear yourselves when you speak. To give you the benefit of the doubt and assume you just choose language poorly and mean you hope she's not a Mary Sue, doesn't her suffering and competency depend on player input?

-

Might this not be better suited to https://forums.thedarkmod.com/index.php?/forum/58-tdm-tech-support/ or failing that make the text collapsable.

-

When talking about a possible libre version of TDM (https://forums.thedarkmod.com/index.php?/topic/22346-libre-version-of-tdm/) it seems we believe all media/gamedata included in TDM is licensed CC-BY-NC-SA. I am not familiar with how the process of adding new media/gamedata works today; I have seen files uploaded to the bugtracker which developers then commit to SVN, but I don't know if there are other ways. It may be a good idea to implement a process that when new components (media/gamedata included in TDM) are added, the contributor is asked to be explicit about the license (a choice which may defaults to their previous preference, for usability). It won't fix the past, but it may help in the future. This will make it easy for contributors to add future data under a more permissive license if they choose. Libre media can be added and its license can be tracked, rather than assumed to be CC-BY-NC-SA. I suggest looking at how Wikimedia Commons has implemented this: the contributor state the source and license at the time the data is uploaded. This can be done either by providing urls or by saying "It's my work and I choose this licsense". The first step could be to add a way to keep track of each filepath in SVN, author, license, sources. Start by setting the value for each file's license to "(default/legacy CC-BY-NC-SA)". Possible implementations for a user interface for new additions are: * Use our own wiki, which runs Mediawiki (same as Wikimedia Commons). I see several benefits of this, but we also need a way to accept uploads of batches, not just single files. * Look at how other open source projects have solved this. There may be more appropriate solutions available. ... but I'll leave the implementation open. Suggestions are very welcome! If the author of each file already in SVN can be tracked, then it may be possible that the author is willing to give a blanket permission for all their past files in one statement, and all their files in SVN can be updated in one commit. A productive contributor willing to release some of their work under a more permissive license could make a big change. If Dark Radiant would support letting mappers search media/gamedata by license (does it already?), it would make it easier for mappers to create a completely libre mission, which would help facilitate a TDM-libre release. If I understand things correctly. This post does not address all details and it may contain misunderstandings or assumptions, but it's a start. Also relevant: * Is there a compiled and maintained list of recommended or deprecated resources for mappers to use? * https://forums.thedarkmod.com/index.php?/topic/20311-external-art-assets-licensing/

-

Regarding the existing Russian version of TDM's MasonAlternative font, this had a different origin than those Russian fonts processed by Riff_Keeper. Tels created this in 2012. He started from bitmaps of an ASCII Mason font, then used his Perl patch program to copy selected ASCII glyphs (that resemble in some way Cyrillic) to new font "MasonAlternative". See https://forums.thedarkmod.com/index.php?/topic/12863-translating-the-tdm-gui/page/15/#findComment-274617 In GIMP, he flipped or otherwise hand-edited to make them Cyrillic. He said, "There are still a few dozen missing, but this is enough to render the two headlines we have (New Mission and Setting)" https://forums.thedarkmod.com/index.php?/topic/12863-translating-the-tdm-gui/page/15/#findComment-274623 This accounts for the incomplete coverage. Speculatively, he took this approach because it couldn't find a Mason-style TTF font with both Russian characters and an acceptable license (e.g., public domain, or at the least freely redistributable for non-commercial use). @kalinovka,I wonder what the licensing is for your masonchronicles3.ttf.

-

Evidently a significant portion of the Cyrillic work was done by Keeper_Riff (in conjunction with Tels) back in 2011. These folks are not active in TDM these days. Keeper_Riff outlined a workflow, starting with FontLab to edit TTF files... https://forums.thedarkmod.com/index.php?/topic/12863-translating-the-tdm-gui/page/12/#findComment-271548 Specifically Carleton: https://forums.thedarkmod.com/index.php?/topic/12863-translating-the-tdm-gui/page/4/#findComment-262135

-

@kalinovka, probably no quick solution. I imagine, with a font editor that reads/writes TTFs, you could relocate the Cyrillic down to the 0-255 range in a custom TTF, which could then be processed by ExportFont3All256. Or, if you know C++, you could make a variant version of ExportFontDoom3All256 with a different input range (both start and end) in the loop.* The wiki page contains a link to the source code for a Visual Studio build. In either case, you'd want to order the glyphs (or glyph processing) as Win-1251 (and TDM) expects, so the generated .DAT files would require minimal fixup. * Specifically, you'd start with FontExporter.cpp, and in function FontExporter::export_, change the loop indices of: // Export all characters. for (int characterCode = 0; characterCode < Font::numCharactersToExport; characterCode++) ... But if that was all it took, I'd be very surprised.

-

A Winter's Tale By: Bikerdude "One of the few pleasures I have as a man of the thieving profession is to return to my old home town for the holidays and relax in the company of friends and relatives. But a local lord has been going out of his way to making the lives of the locals a miserable." Notes: - TDM 2.12 or later is REQUIRED to play this mission. - This is my entry for the speedbuilding jam. - This FM should play on the vast majority of systems. I have perf tweaked this map with low end players in-mind. And have moved the globale fog/moon lights to the ‘ better’ LOD level. - min recommended spec (as per beta testing) Intel Core i7(3rd gen), nvidia 1030 4gb (GDDR5), will get you 60fps inside and 45fps outside. - Various areas will look better with shadow maps enabled (SoftShadows set to medium/high, Shadows Softness set to zero and LOD set to 'better'), at the possible expense of performance depending on your system specs. - this mission continues the imperial theme, with this being a border town slightly further out from the main imperial lands than Brouften. - build time roughly 130hrs. Download Link: - (v1.4) - https://discord.com/channels/679083115519410186/1310012992867405855/1310022631415746632 v1.4 changes: I have been tweaking the LOD levels through-out the map - - Snow fall has been moved to LOD low/normal. - world fog moved to LOD better. - fireplace grills to LOD better. - world moonlight moved to LOD high. Other tweaks - - reduced light counts in all the fireplaces. - reduced the length of the looping menu video background. - Some new assets Gameplay: - added more ways for the player to get around. - tweaked existing routes to make some easier or more of a challenge. - and added an additional option objective. Credits: Special thanks go to - - Nbhor1more, flashing out the briefing and creation of readables. - Amadeus, Help w/custom objectives, script work, proof reading, mission design & testing. - Dragofer, for custom scripts and script work for the main objective. - Baal, for additional tweaking of the main objective and script work,. - Beta testers: Amadeus, Nbhor1more, Mat99, DavyJones, S1lverwolf, Baal & Dragofer. - Freesound.org, for ambient tracks, further details in sndshd file. Speed Jam Thread: - https://www.ttlg.com/forums/showthread.php?t=152747

- 33 replies

-

- 18

-

-

-

Quite recently, I switched to a laptop with 240Hz display. While I don't see that much improvement over 120 Hz/FPS threshold (maybe it's the age and reaction time thing), I do like the improved latency quite a bit. I know it's not that relevant in a stealth game with slower-paced gameplay, but it's just nice in how smooth the game response to input is.

-

help [SOLVED] No sound on the native linux version

Nachzehrer replied to Nachzehrer's topic in TDM Tech Support

aplay -L null Discard all samples (playback) or generate zero samples (capture) sysdefault Default Audio Device speexrate Rate Converter Plugin Using Speex Resampler pipewire PipeWire Sound Server upmix Plugin for channel upmix (4,6,8) default Default ALSA Output (currently PipeWire Media Server) sysdefault:CARD=Controller DualSense Wireless Controller, USB Audio Default Audio Device front:CARD=Controller,DEV=0 DualSense Wireless Controller, USB Audio Front output / input surround21:CARD=Controller,DEV=0 DualSense Wireless Controller, USB Audio 2.1 Surround output to Front and Subwoofer speakers surround40:CARD=Controller,DEV=0 DualSense Wireless Controller, USB Audio 4.0 Surround output to Front and Rear speakers surround41:CARD=Controller,DEV=0 DualSense Wireless Controller, USB Audio 4.1 Surround output to Front, Rear and Subwoofer speakers surround50:CARD=Controller,DEV=0 DualSense Wireless Controller, USB Audio 5.0 Surround output to Front, Center and Rear speakers surround51:CARD=Controller,DEV=0 DualSense Wireless Controller, USB Audio 5.1 Surround output to Front, Center, Rear and Subwoofer speakers surround71:CARD=Controller,DEV=0 DualSense Wireless Controller, USB Audio 7.1 Surround output to Front, Center, Side, Rear and Woofer speakers iec958:CARD=Controller,DEV=0 DualSense Wireless Controller, USB Audio IEC958 (S/PDIF) Digital Audio Output hdmi:CARD=HDMI,DEV=0 HDA ATI HDMI, HDMI 0 HDMI Audio Output hdmi:CARD=HDMI,DEV=1 HDA ATI HDMI, HDMI 1 HDMI Audio Output hdmi:CARD=HDMI,DEV=2 HDA ATI HDMI, HDMI 2 HDMI Audio Output hdmi:CARD=HDMI,DEV=3 HDA ATI HDMI, HDMI 3 HDMI Audio Output hdmi:CARD=HDMI,DEV=4 HDA ATI HDMI, HDMI 4 HDMI Audio Output hdmi:CARD=HDMI,DEV=5 HDA ATI HDMI, HDMI 5 HDMI Audio Output sysdefault:CARD=Generic HD-Audio Generic, ALC887-VD Analog Default Audio Device front:CARD=Generic,DEV=0 HD-Audio Generic, ALC887-VD Analog Front output / input surround21:CARD=Generic,DEV=0 HD-Audio Generic, ALC887-VD Analog 2.1 Surround output to Front and Subwoofer speakers surround40:CARD=Generic,DEV=0 HD-Audio Generic, ALC887-VD Analog 4.0 Surround output to Front and Rear speakers surround41:CARD=Generic,DEV=0 HD-Audio Generic, ALC887-VD Analog 4.1 Surround output to Front, Rear and Subwoofer speakers surround50:CARD=Generic,DEV=0 HD-Audio Generic, ALC887-VD Analog 5.0 Surround output to Front, Center and Rear speakers surround51:CARD=Generic,DEV=0 HD-Audio Generic, ALC887-VD Analog 5.1 Surround output to Front, Center, Rear and Subwoofer speakers surround71:CARD=Generic,DEV=0 HD-Audio Generic, ALC887-VD Analog 7.1 Surround output to Front, Center, Side, Rear and Woofer speakers iec958:CARD=Generic,DEV=0 HD-Audio Generic, ALC887-VD Digital IEC958 (S/PDIF) Digital Audio Output sysdefault:CARD=Wireless Arctis Pro Wireless, USB Audio Default Audio Device front:CARD=Wireless,DEV=0 Arctis Pro Wireless, USB Audio Front output / input surround21:CARD=Wireless,DEV=0 Arctis Pro Wireless, USB Audio 2.1 Surround output to Front and Subwoofer speakers surround40:CARD=Wireless,DEV=0 Arctis Pro Wireless, USB Audio 4.0 Surround output to Front and Rear speakers surround41:CARD=Wireless,DEV=0 Arctis Pro Wireless, USB Audio 4.1 Surround output to Front, Rear and Subwoofer speakers surround50:CARD=Wireless,DEV=0 Arctis Pro Wireless, USB Audio 5.0 Surround output to Front, Center and Rear speakers surround51:CARD=Wireless,DEV=0 Arctis Pro Wireless, USB Audio 5.1 Surround output to Front, Center, Rear and Subwoofer speakers surround71:CARD=Wireless,DEV=0 Arctis Pro Wireless, USB Audio 7.1 Surround output to Front, Center, Side, Rear and Woofer speakers iec958:CARD=Wireless,DEV=0 Arctis Pro Wireless, USB Audio IEC958 (S/PDIF) Digital Audio Output iec958:CARD=Wireless,DEV=1 Arctis Pro Wireless, USB Audio #1 IEC958 (S/PDIF) Digital Audio Output -

Double topic. See also topic: https://forums.thedarkmod.com/index.php?/topic/22754-thief-vr-legacy-of-shadow-announcement/

-

i am having a hard time searching for specific keyword in bug tracker so i decided to wade into google search and found similar thread with similar issue : https://forums.thedarkmod.com/index.php?/topic/13723-key-drop-melee-animation-glitch/

-

Websites prove their identity via certificates, which are valid for a set time period. The certificate for forums.thedarkmod.com expired on 10/18/2024. Error code: SEC_ERROR_EXPIRED_CERTIFICATE

-

Or, just get in contact with the owners of the IP and ask them since just because an IP lawyer in one jurisdiction says it would be okay, doesn't mean it would be okay globally. There are several packs out there of scripts/definition files which are licenced under free licences (CC0 and WTFPL mostly) and claim their freedom by recreating them using "clean-room way". In fact, I used them in getting TDM running with mostly free licenced files by selectively choosing which had clearly been written from scratch albeit with reference to the originals, and which were just direct copy and paste with claimed free licences (I didn't use them). Then it was a case of finding/replacing the core engine textures, sounds, etc. the engine required to launch with free alternatives (again checking the packs since some were just copied direct from the game files and claiming to be free). In the end, the only non-free licenced files that were still required were those from TDM itself. The result of this TDM version is the screenshot I posted some posts ago here: https://forums.thedarkmod.com/index.php?/topic/22346-libre-version-of-tdm/page/3/#findComment-500642 However, you will notice the giant cursor on the screen in the screenshot, why? because the only reference to hiding the cursor is within the UI files which come under the game eula, so I didn't add in the command to hide it in game. In the case of the script/def files, this "clean-room" approach has stood up in a court of law when I looked online, however you wouldn't really want to end up being in the position of ending up in court defending yourself in the first place. The flaw in the def/scripts that were recreated are that they all wrote their files using the originals as reference. So if the originals are under the game eula, and if the information contained is in some way protected, then all these "clean-room" files revert to the original game eula, as the authors didn't have the right to change the licence. I believe (with a pinch of salt )that if the core scripts/files were made GPL by idSoftware/Microsoft then as files based off of or using them as parents (basically all TDM scripts/defs as far as I can make out) then all of the TDM scripts and def files would automatically become GPL as their authors could also not claim their work was NC-BY since it was then based on GPL work.

-

Mandrasola is a small sized map in which aspiring thief Thomas Porter steals some herbal products from a smuggler. The mission was created by me, Sotha and I wish to thank Bikerdude, BrokenArts and Ocn for playtesting and voice acting. Thanks goes naturally to everyone contributing and making TDM possible. This mission occurs chronologically before the Knighton's Manor, making it the first mission in the Thomas Porter series. Events in chronological order are: Mandrasola, The Knighton's Manor, The Beleaguered Fence, The Glenham Tower and The Transaction. The winter came early and suddenly this year. Weeks of strong blizzards and extremely harsh cold weather hit Bridgeport hard. With the seas completely frozen, a rare occurence indeed, most of the City harbor commerce has stopped completely. Vessels are stuck in the ice and no ship can leave or enter the City, resulting in the availability imported goods declining and their prices skyrocketing. One of these imported items is Mandrasola, a rare herbal product, which is imported overseas from the far southern continents. Mandrasola has its uses in alchemical cures and poisons, but mostly this substance is used for its narcotic qualities by commoners and even the nobility. The problem with Mandrasola is that excessive use is extremely addicting and the withdrawal effects are most grievious. Many are utterly incapable of stopping using Mandrasola and are transformed into quivering human ruins if they do no get their daily dose. And now this expensive and rare substance is running out from the whole City. Me and my fence, Lark Butternose, would love to grab this monopoly to ourselves: selling the last few doses in the City would probably be worth a fortune. According to Lark's sources, there remains only one smuggling lord who still has Mandrasola in stock. The problem is that this individual maintains an exclusive clandestine operation and only supplies a few nobles. Despite our best information gathering efforts we couldn't learn who the smuggler is and where he or she operates. Luckily we have an alternate plan. While searching for Mandrasola related information, we learned that a noblewoman called Lady Ludmilla is addicted to the substance and has paid high prices for small amounts of it. We also know that she has visited frequently someone in the Tanner's Ward waterfront, and since she goes to the area personally we believe she is visiting the smuggler. The plan is simple: I must monitor Ludmilla's most likely entryway to the Waterfront and then follow her to the smugglers hideout. I'd better be very careful around Ludmilla. She must not realise I'm following her or she probably won't lead me to her dealer. Hurting her is also out of the question. After she leads me to the smuggler's hideout, I can take my time to break in carefully and steal all the Mandrasola I can find. While I'm there it wouldn't be a bad idea to grab some loose valuables as well. I've now waited in the blistering cold for a few hours already. Looks like there are a few city watch patrols in the area to complicate matters... I think I heard a womans voice beyond the north gate. That must be lady Ludmilla, I haven't seen many ladies in these parts. I'd better get ready.. Links: Use the ingame downloader to get it. WARNING! Someone always fails to use spoiler tags. I do not recommend reading any further until you've played the mission.

-

Hi folks, and thanks so much to the devs & mappers for such a great game. After playing a bunch over Christmas week after many years gap, I got curious about how it all went together, and decided to learn by picking a challenge - specifically, when I looked at scripting, I wondered how hard it would be to add library calls, for functionality that would never be in core, in a not-completely-hacky-way. Attached is an example of a few rough scripts - one which runs a pluggable webserver, one which logs anything you pick up to a webpage, one which does text-to-speech and has a Phi2 LLM chatbot ("Borland, the angry archery instructor"). The last is gimmicky, and takes 20-90s to generate responses on my i7 CPU while TDM runs, but if you really wanted something like this, you could host it and just do API calls from the process. The Piper text-to-speech is much more potentially useful IMO. Thanks to snatcher whose Forward Lantern and Smart Objects mods helped me pull example scripts together. I had a few other ideas in mind, like custom AI path-finding algorithms that could not be fitted into scripts, math/data algorithms, statistical models, or video generation/processing, etc. but really interested if anyone has ideas for use-cases. TL;DR: the upshot was a proof-of-concept, where PK4s can load new DLLs at runtime, scripts can call them within and across PK4 using "header files", and TDM scripting was patched with some syntax to support discovery and making matching calls, with proper script-compile-time checking. Why? Mostly curiosity, but also because I wanted to see what would happen if scripts could use text-to-speech and dynamically-defined sound shaders. I also could see that simply hard-coding it into a fork would not be very constructive or enlightening, so tried to pick a paradigm that fits (mostly) with what is there. In short, I added a Library idClass (that definitely needs work) that will instantiate a child Library for each PK4-defined external lib, each holding an eventCallbacks function table of callbacks defined in the .so file. This almost follows the idClass::ProcessEventArgsPtr flow normally. As such, the so/DLL extensions mostly behave as sys event calls in scripting. Critically, while I have tried to limit function reference jumps and var copies to almost the same count as the comparable sys event calls, this is not intended for performance critical code - more things like text-to-speech that use third-party libraries and are slow enough to need their own (OS) thread. Why Rust? While I have coded for many years, I am not a gamedev or modder, so I am learning as I go on the subject in general - my assumption was that this is not already a supported approach due to stability and security. It seems clear that you could mod TDM in C++ by loading a DLL alongside and reaching into the vtable, and pulling strings, or do something like https://github.com/dhewm/dhewm3-sdk/ . However, while you can certainly kill a game with a script, it seems harder to compile something that will do bad things with pointers or accidentally shove a gigabyte of data into a string, corrupt disks, run bitcoin miners, etc. and if you want to do this in a modular way to load a bunch of such mods then that doesn't seem so great. So, I thought "what provides a lot of flexibility, but some protection against subtle memory bugs", and decided that a very basic Rust SDK would make it easy to define a library extension as something like: #[therustymod_lib(daemon=true)] mod mod_web_browser { use crate::http::launch; async fn __run() { print!("Launching rocket...\n"); launch().await } fn init_mod_web_browser() -> bool { log::add_to_log("init".to_string(), MODULE_NAME.to_string()).is_ok() } fn register_module(name: *const c_char, author: *const c_char, tags: *const c_char, link: *const c_char, description: *const c_char) -> c_int { ... and then Rust macros can handle mapping return types to ReturnFloat(...) calls, etc. at compile-time rather than having to add layers of function call indirection. Ironically, I did not take it as far as building in the unsafe wrapping/unwrapping of C/C++ types via the macro, so the addon-writer person then has to do write unsafe calls to take *const c_char to string and v.v.. However, once that's done, the events can then call out to methods on a singleton and do actual work in safe Rust. While these functions correspond to dynamically-generated TDM events, I do not let the idClass get explicitly leaked to Rust to avoid overexposing the C++ side, so they are class methods in the vtable only to fool the compiler and not break Callback.cpp. For the examples in Rust, I was moving fast to do a PoC, so they are not idiomatic Rust and there is little error handling, but like a script, when it fails, it fails explicitly, rather than (normally) in subtle user-defined C++ buffer overflow ways. Having an always-running async executor (tokio) lets actual computation get shipped off fast to a real system thread, and the TDM event calls return immediately, with the caller able to poll for results by calling a second Rust TDM event from an idThread. As an example of a (synchronous) Rust call in a script: extern mod_web_browser { void init_mod_web_browser(); boolean do_log_to_web_browser(int module_num, string log_line); int register_module(string name, string author, string tags, string link, string description); void register_page(int module_num, bytes page); void update_status(int module_num, string status_data); } void mod_grab_log_init() { boolean grabbed_check = false; entity grabbed_entity = $null_entity; float web_module_id = mod_web_browser::register_module( "mod_grab_log", "philtweir based on snatcher's work", "Event,Grab", "https://github.com/philtweir/therustymod/", "Logs to web every time the player grabs something." ); On the verifiability point, both as there are transpiled TDM headers and to mandate source code checkability, the SDK is AGPL. What state is it in? The code goes from early-stage but kinda (hopefully) logical - e.g. what's in my TDM fork - through to basic, what's in the SDK - through to rough - what's in the first couple examples - through to hacky - what's in the fun stretch-goal example, with an AI chatbot talking on a dynamically-loaded sound shader. (see below) The important bit is the first, the TDM approach, but I did not see much point in refining it too far without feedback or a proper demonstration of what this could enable. Note that the TDM approach does not assume Rust, I wanted that as a baseline neutral thing - it passes out a short set of allowed callbacks according to a .h file, so language than can produce dynamically-linkable objects should be able to hook in. What functionality would be essential but is missing? support for anything other than Linux x86 (but I use TDM's dlsym wrappers so should not be a huge issue, if the type sizes, etc. match up) ability to conditionally call an external library function (the dependencies can be loaded out of order and used from any script, but now every referenced callback needs to be in place with matching signatures by the time the main load sequence finishes or it will complain) packaging a .so+DLL into the PK4, with verification of source and checksum tidying up the Rust SDK to be less brittle and (optionally) transparently manage pre-Rustified input/output types some way of semantic-versioning the headers and (easily) maintaining backwards compatibility in the external libraries right now, a dedicated .script file has to be written to define the interface for each .so/DLL - this could be dynamic via an autogenerated SDK callback to avoid mistakes maintaining any non-disposable state in the library seems like an inherently bad idea, but perhaps Rust-side Save/Restore hooks any way to pass entities from a script, although I'm skeptical that this is desirable at all One of the most obvious architectural issues is that I added a bytes type (for uncopied char* pointers) in the scripting to be useful - not for the script to interact with directly but so, for instance, a lib can pass back a Decl definition (for example) that can be held in a variable until the script calls a subsequent (sys) event call to parse it straight from memory. That breaks a bunch of assumptions about event arguments, I think, and likely save/restore. Keen for suggestions - making indexed entries in a global event arg pointer lookup table, say, that the script can safely pass about? Adding CreateNewDeclFromMemory to the exposed ABI instead? While I know that there is no network play at the moment, I also saw somebody had experimented and did not want to make that harder, so also conscious that would need thought about. One maybe interesting idea for a two-player stealth mode could be a player-capturable companion to take across the map, like a capture-the-AI-flag, and pluggable libs might help with adding statistical models for logic and behaviour more easily than scripts, so I can see ways dynamic libraries and multiplayer would be complementary if the technical friction could be resolved. Why am I telling anybody? I know this would not remotely be mergeable, and everyone has bigger priorities, but I did wonder if the general direction was sensible. Then I thought, "hey, maybe I can get feedback from the core team if this concept is even desirable and, if so, see how long that journey would be". And here I am. [EDITED: for some reason I said "speech-to-text" instead of "text-to-speech" everywhere the first time, although tbh I thought both would be interesting]

- 25 replies

-

- 3

-