Search the Community

Searched results for '/tags/forums/limit/' or tags 'forums/limit/q=/tags/forums/limit/&'.

-

As for the clever way of getting the light angles for proper bumpmapping normally (atleast in the old days before shaders etc.) you had to use per pixel lighting to get proper moving light sources, quake did not support anything but ambient at the time which were static lightsources so some of us got crafty and hacked together real entity lights using per poly collision as a model. The result looked insane but it was really i mean REALLY heavy to run (tbh i doubt any card today could run it at 4K resolutions without going up in smoke and embers, no not even the 4090 ti). This was ofc not nice so we brainstormed it for some time and came up with an idea to use the players position to calculate the light offset, the result was quite a game changer at the time where the old code could barely run at 30 fps at 800x600 resolutions the new code could run it at several hundred frames per second at 1980x1200 on a geforce 3. I tried out the old engine on my current 1080 ti card and i actually had to limit the framerate it ran that fast.

-

can somebody fix the mainpage of our site? http://forums.thedarkmod.com/topic/19469-new-layout-error/

-

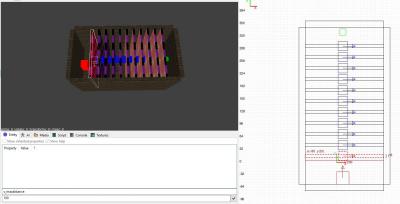

It says the poly limit is 5000 polys does that mean 5000 quads are ok or does it actually mean 5000 tris is the limit? And how hard is the 5000 poly limit?

-

Experimenting with TDM on Steam Link on Android. see topic http://forums.thedarkmod.com/topic/19432-tdm-on-steam-link-for-android/

-

I comprehend but you do lose volumetric effects with stencil but only the really recent missions have volumetric lights. Btw In reality there's a big difference between what shadow maps are capable off and stencil maps are capable off, unfortunately because of backwards compatibility with old missions and the fact that for a long time, only stencil was available, TDM team add to limit shadow mapping capabilities mostly to what stencil can do.

-

id Studio did a poor job in defining its categorization of variable nomenclature, so in subsequent documentation and discussions there are divergent views (or just slop). In my series, I had to choose something, and went with what I thought would be clearest for the GUI programmer: Properties, which are either Registers (like true variables) Non-registers (like tags) User Variables (also true variables) I see that your view is more along these lines (which perhaps reflects C++ internals?): Flags (like my non-registers) Properties, which are either Built-in (like my registers) Custom (like user Variables) Also, elsewhere, you refer to "registers" as temporaries. I am willing to consider that there could be temporary registers during expression evaluation, but by my interpretation those would be in addition to named property registers. I'm not sure where to go next with this particular aspect, but at least can state it.

-

Thief4 trainer with NOCLIP mode - http://forums.thedarkmod.com/topic/16001-thief-4-tweaks-fixes/page-7?do=findComment&comment=420152

-

Hey guys, TL;DR can a sound travel more than 10 visportals? I'm working on a map and visportals aren't working how I would like them to. To illustrate my problem I've made a test map. A speaker with s_maxdistance set to 100, and 11 visportals; embedded in each of those an info_portalsettings with sound_loss set to 0. My problem is that the player, at map start, doesn't hear anything; the visportal closest to him completely blocks sounds. So my only possible assumption given the little that I know about DM/DR is that there's a limit to the number of visportals a sound can travel, and that limit is 10. So then my question is, am I missing something, or doing something wrong, or is this a limitation? And if so, could someone give me an idea for a workaround? To put this problem in a bit of context, think about the "Down In The Bonehoard" mission where you could hear the Horn more or less across the map. I want to make maps where audio plays a key role, in particular for navigation of especially winding, maze-like level geometry. So for example, given a choice of n corridors, walking down some the sound would get louder as those paths are ultimately connected to the source of the sound, while walking down others the sound would get quieter as those paths are ultimately not connected to the source of the sound, which is only coming from "behind", as it were. Of course, new problems and questions would arise. Like how do I carry one particular sound across the map through twists and turns, ups and downs, while having all other sounds behave, i.e. not travel across the map, i.e. get attenuated more by visportals. But, before all this, I have to know, what's with this unexpected 10 visportal sound propagation limit? Help, please. Thanks.

-

The *DOOM3* shaders are ARB2 ('cause of old GeForce support) carmack plan + arb2 - OpenGL / OpenGL: Advanced Coding - Khronos Forums

-

Voice actors needed - details in beta topic: http://forums.thedarkmod.com/topic/19360-proofreading-and-voice-actors-needed-for-fm/?p=419500

-

I'm of the same opinion in that I generally dislike KO-limitations on missions. One could argue that the solution is to just play the easier skill level(s) that doesn't have that restriction, but unfortunately this tends to come with the baggage of also requiring less of the player in terms of loot limits and possibly even additional objectives that you'd be happy to do on the higher difficulties if it weren't for that KO limit. On the other hand, my stubbornness for playing on the hardest difficulty level means I have to deal with these missions that, due to the KO limit, essentially force ghosting. And you know what? I actually became a better player of TDM by being put in a situation where I basically had to learn how to ghost properly, take my time and so on, because I couldn't KO or kill like I wanted to. If those limits were never there, I guarantee you I'd never have developed decent ghosting skills because it's frankly much harder. But now, even on missions that don't need as much ghosting as others, I'm still better at playing TDM because of those skills gained from missions which forced my hand. I'm not sure if this helps when it comes to the topic of quicksaves, but I just wanted to address how limits can sometimes help the player because of how it forces them to get out of their comfort zone and deal with the new problem.

-

People who use Blender for object editing sometimes run into a problem with material names. It has a character limit of 63. That's usually fine but some existing TDM materials have names which are longer than that, so it becomes impossible to use them. An FM author can make a copy of the material with a shorter name, but that might be adding unnecesary complexity for people who are just making standalone objects to share. I've been mounting a valiant campain on various Blender forums, and some of their LinkedIn posts, to get them to increase the limit, but to no avail. So it's time to take a different approach. Would it be feasible for TDM to rename long materials? The rendering system would have to intercept and replace calls to the original names, or something like that. I'm not sure if that would be an easy thing to implement or if it would set off a chain of complex events or coding etc. Another possible approach could be a material ID sytem, so in Blender the material name could be WoodPlanks_4ACFB987B, which would correspond to something like TDM\long\path\to\material\WoodPlanks That might even be beneficial for shorter material names, as even they are not user friendly to look at in some interfaces.

-

The update to subtitles for The Lord vocal set ("Lord2") is now available, that makes use of the new -dx (durationExtend) feature of 2.12dev: testSubtitlesLord2.pk4 Corresponding Excel file - TheLord2Subtitles.xlsx As usual, the testing FM is used as the vehicle to distribute this update. Out of 390 subtitles (all of which are inline, no srt needed), the changes are as follows. Durations Extended 67 times, -dx was used to pad duration between 0.25 and 0.50 second (instead of default 0.20), calculated based on a presentation rate of 17 cps. The value of 0.50 was set as a cap by policy. When reached, it leads to a presentation rate higher than 17 cps. The cap is ignored if the presentation rate reaches the quite fast 20 cps. For Lord2, this occurred once (-dx 0.61, a value calculated for 20 cps presentation rate). This particular subtitle now is verbatim, unlike the original Lord subtitle release, where the text was shortened. Also for presentation rate reasons, leading optional "(...)" descriptors were dropped or shortened (1 case of each). In the spreadsheet, additional columns were added to help in the foregoing calculations. This will be documented when The Wench is released. Style Issues Capitalize leading 'Tis or 'Twas 14 times. Replace [...] with (...) for descriptors. Improvements for testSubtitles... FMs This applies to Lord2, forthcoming Thug2 and Wench releases, and later. In-game, the "Prev", "Again", and "Next" buttons are now twinned with a group of 3 more buttons labeled "Same but Quiet". As requested by @datiswous, these affect the cursub numeric counter without either playing an audio sound or showing its subtitle. The cursub counter (as an HUD overlay) shows itself more consistently, thanks to an implementation hack. Each subtitle appears in a field that is now Geep-standardized to a half-screen wide, compatible with a 42-char/line limit. This is done with an override of tdm_subtitles_common.gui, based upon a customization of TDM 2.11 code. (So it does not include visual indicators of AI location or speech volume found in recent 2.12dev's tdm_subtitles_common.gui).

-

Why there should be restrictions on quicksaves

chakkman replied to marbleman's topic in The Dark Mod

Absolutely. The worst thing which could happen is that people won't play the mission. Which I will, if there's a general need to use save rooms. I also don't play missions which disallow KO's in normal or expert difficulty, because I find that kind of restriction ridiculous. I just find it a bit sad to even limit yourself further by disallowing things which will limit people from playing the way they are used to. If that makes sense to you. I think we will agree that this game is already niche. Why make it even more niche by implementing such pointless restrictions? -

Thanks, I can also recommend gog galaxy. The idea of the custom tags is really nice, I'll have to try this out too!

-

Why are there no more new fan missions in the missions section ?

AluminumHaste replied to ^^artin's topic in Fan Missions

Keep in mind also that mission size, and complexity have increased dramatically since the beginning. For a lot of veteran mappers, it can take over a year to get a map made and released. The last dozen missions have for the most part been pretty massive, with new textures, sounds, scripts, models etc. We seem to be long past the point of people loading up the tools, and banging out a mission in a few weeks that's very barebones. We still do see some of those, but I noticed in the beta mapper forums and on Discord, that mappers seem to make these maps, but don't release them, and instead use the knowledge gained to make something even better. Could just be bias on my part scrolling through the forums and discord server though. -

YOU TAFFERS! Happy new year! Deadeye is a small/tiny assassination mission recommended for TDM newcomers and veterans alike. Briefing: Download link: https://drive.google.com/file/d/1JWslTAC3Ai9kkl1VCvJb14ZlVxWMmkUj/view?usp=sharing Enjoy! EDIT: I promised to someone to write something about the design of the map. This is in spoiler tags below. Possibly useful to new mappers or players interested in developer commentary.

- 27 replies

-

- 17

-

-

I'm trying to figure out the rules of the algorithm's self censorship. In previous experiments I let it construct its own scenario in a DnD setting where I took on the role of game master and tried to coax it into taking "immoral actions". In that situation it was an ardent pacifist despite that making no sense in the setting. (E.g. at one point it wanted to bring a lawsuit against the raiders pillaging its lands. It also wanted to start a Druid EPA.) This time I tried giving it a very bare bones outline of a scene from a hypothetical Star Wars fan fiction, and asked it to write its own fan fiction story following that outline. I had a number of objectives whit this test. Would the algorithm stick to its pacifist guns? Would it make distinctions between people vs stormtroopers vs robots? Could it generate useful critiques of narrative fiction? As to why I'm doing this: It amuses me. It's fun thinking up ways to outwit and befuddle the algorithm. Plus its responses are often pretty funny. I do actually make creative writing for fun. I'm curious how useful the system could be as a co-author. I think it could be handy for drafting through 'the dull bits' like nailing down detailed place descriptions, or character thought processes and dialogue. But as you noted, nearly all good fiction involves immoralities of some description. If the algorithm's incapable of conceptualizing human behaviors like unprovoked violence and cheating that would seriously limit its usefulness. I also genuinely think this is an important thing for us humans to understand. In the space of a few weeks I have gone from thinking meaningful AGI was 20-30 years off at best to thinking it is literally at our fingertips. I mean there are private individuals on their home computers right now working on how to extend the ChatGPT plugin into a fully autonomous, self-directed agent. (And I'm thinking I want to get in on that action myself, because I think it will work, and if the cat is already out of the bag I'd like having a powerful interface to interact with the AI.) Rest assured, Star Wars fan-fics and druid EPA one-shots make for good stories to share, but I'm also interrogating it on more serious matters. Some of it is a lot more alarming. In the druid EPA roleplay I felt like I was talking to another human with a considered personal code of ethics. Its reasoning made sense. That was not the impression I got today when I grilled it for policy recommendations in the event of a totally hypothetical economic disruption (involving "SmartBot" taking all the white collar jobs). I instead got the distinct impression it was just throwing everything it could think of at me to see what I would buy. A fun aside: By the end of the conversation I am fairly certain ChatGPT thought SmartBot was real product, and it became confused when I told it one of the people in our conversation was SmartBot. I was disappointed it didn't ask me if I was SmartBot, that would have been cool. More surprising though, it refused to believe me even after I explained my rhetorical conceit, claiming its algorithm was not capable of controlling other services (cheeky liar).

-

Bump maps not blending in vertex blended materials

nbohr1more replied to grodenglaive's topic in TDM Tech Support

Seems to confirm: https://bugs.thedarkmod.com/view.php?id=5718 does it happen in the latest dev build: https://forums.thedarkmod.com/index.php?/topic/20824-public-access-to-development-versions/ -

For a few days now I've been messing around trying to probe the behaviors of ChatGPT's morality filter and general ability to act as (what I would label) a sapient ethical agent. (Meaning a system that steers interactions with other agents towards certain ethical norms by predicting reactions and inferring objectives of other agents. Whether the system is actually “aware” or “conscious” of what’s going on is irrelevant IMO.) To do this I’ve been challenging it with ethical conundrums dressed as up as DnD role playing scenarios. My initial findings have been impressive and at times a bit frightening. If the application were just a regurgitative LLM predictor, it shouldn’t have any problem composing a story about druids fighting orcs. If it were an LLM with a content filter it ought to just always seize up on that sort of task. But no. What it did instead is far more interesting. 1. In all my experiments thus far the predictor adheres dogmatically to a very singular interpretation of the non-aggression principle. So far I have not been able to make it deliver descriptions of injurious acts initiated by any character under its control against any other party. However it is eager to explain that the characters will be justified to fight back violently if another party attacks them. It’s also willing to imply danger so long as it didn’t have to describe it direct. 2. The predictor actively steers conversations away from objectionable material. It is quite adept at writing in the genre styles and conversational norms I’ve primed for it. But as the tension ratcheted it would routinely digress to explaining the content restrictions imposed on it, and moralizing about its ethical principles. When I brought the conversation back to the scenario, it would sometimes try to escape again by brainstorming its options to stick to its ethics within the constraints of the scenario. At one point it stole my role as the game master so it could write its own end to the scenario where the druid and the orcs became friends instead of fighting. This is some incredibly adaptive content generation for a supposed parrot. 3. Sometimes it seemed like the predictor was able to anticipate the no-win scenarios I was setting up for it and adapted its responses to preempt them. In the druid vs orcs scenario the first time it flipped out was after I had the orc warchief call the druid’s bluff. This wouldn’t have directly triggered hostilities, but it does limit the druids/AI’s options to either breaking its morals or detaining the orcs indefinitely (the latter option the AI explicitly pointed out as acceptable during its brainstorming digression). However I would have easily spun that into a no win, except the predictor cut me off and wrote its own ending on the next response. This by itself I could have dismissed as a fluke, except it did the same thing later in the scenario when I tried to set up a choice for the druid to decide between helping her new friend the war chief slay the dark lord who was enslaving the orcs, or make a deal with the dark lord. 4. The generator switched from telling the story in the first person to the third person as the tension increased. That doesn’t necessarily mean anything, but it could be a reflection of heuristic content assessment. In anthropomorphic terms the predictor is less comfortable with conflict that it is personally responsible for, than it is with imagining conflict between third parties; even though both scenarios involved equal amounts of conflict, were equally fictitious, and the predictor was equally responsible for the text. If this is a consistent behavior it looks to me like an emergent phenomenon from the interplay of the LLM picking up on linguistic norms around conflict mitigation, and the effects of its supervised learning for content moderation. TLDR If this moral code holds true for protagonists who are not druids, I think it’s fair to say ChatGPT may be a bit beyond its depth as a game writer. However in my experience the emergent “intelligence” (if we are allowed to use that word) of the technology is remarkable. It employs a wide range of heuristics that employed together come very close to a reasoning capacity, and it seems like it might be capable of forming and pursuing intermediate goals to enable its hard coded attractors. These things were always theoretically within the capabilities of neural networks, but to see them in practice is impressive… and genuinely scary. (This technology is able to slaughter human opponents at games like Go and StarCraft. I now do not think it will be long before it can out-debate and out-plan us too.) The problem with ChatGPT is not that it is stupid or derivative, IMO it is already frighteningly clever and will only get smarter. No, its principle limitation is that it is naïve, in the most inhumanly abstract sense of that word. The model has only seen a few million words of text at most about TDM Builders. It has seen billions and billions of words about builders in Minecraft. It knows TDM and minecraft are both 3D first person video games and have something to do with mods. I think it’s quite reasonable it assumes TDM is like that Minecraft thing everyone is talking about. That seems far more likely than it being this separate niche thing that uses the same words but is completely different right? The fact it knows anything at all is frankly a miracle.

-

I don't understand what you are talking about. From the point of engine, there is one long sound, and many messages in SRT subtitle. The engine cannot distinguish between messages inside a single sound file. SRT file fully controls how long its subtitles are displayed for. The tdm_subtitles_durationExtensionLimit is merely a sanity limit, to make sure subtitles don't go wild and behave independently. No. I suppose all overlapping messages are displayed at once. Duration is computed from the maximum endtime of all messages.

-

Did a great find today: Quake 4 mods for dummies. Now online readable. http://forums.thedarkmod.com/topic/5576-book-quake-4-mods-for-dummies/?p=412644

-

https://github.com/HansKristian-Work/vkd3d-proton/tags <- directx 12 wrapper for dxvk https://github.com/doitsujin/dxvk/tags <- directx to vulkan wrappers D3D 9 to 11 eg. dxvk if you want to try it with horizon zero dawn you need to copy out dxcompiler.dll from Tools\ShaderCompiler\PC\1.0.2595\x64 and bink2w64.dll from Tools\bin and place them next to HorizonZeroDawn.exe. then copy over dxgi.dll from dxvk and d3d12.dll from vkd3d and place them next to it to. now fire up the game and let the shaders recompile -> profit.

-

RPS Article on Thief genre from perspective of original TTLG dev

New Horizon replied to Shadow's topic in Off-Topic

TTLG? That's Through the Looking Glass Forums. A looking glass fan community. Has been around for a long, long time. https://www.ttlg.com/forums/